Editor’s Note: This text course is an edited transcript of a live webinar. Download supplemental course materials.

Gus Mueller: This is our fourth year of our Vanderbilt Journal Club, which meets quarterly. Thanks for joining. At each session, our guest presenters track down the best journal articles that have been published in the last year, and talk about how they might relate to what many of you do on a daily basis. Our guest today is Todd Ricketts.

Dr. Ricketts, an associate professor, has been at Vanderbilt for about 10 years now. He has a very active research lab that publishes some of the top articles on hearing aids each year. He also is active with the Au.D. students and mentors his own Ph.D. students. And, an additional huge task that he has taken on the past year is the Director of Graduate Training at Vanderbilt University. Thank you, Todd, for talking to us today.

Todd Ricketts: Thank you, Gus. Welcome to our Journal Club. As Gus suggested, I tried to pick hearing aid articles that I thought had some interesting clinical relevance.

Article #1

The first article that I am going to review is titled Real-Life Efficacy and Reliability of Training a Hearing Aid published by the group at National Acoustic Laboratories (NAL) in Australia (Keidser & Alamudi, 2013). There have been a few articles published on trainable hearing aids. The reason I selected this one is that it is one of the first articles that examines what I would consider second-generation trainable hearing aids. Also, the comparison prescriptive method was NAL-NL2, rather than NAL-NL1, making it a little more up-to-date.

The authors asked, “Do patients reliably train away from the verified and validated prescriptive method of NAL-NL2?” They were also interested in whether the actual listening environment affected the reliability of the training. They wanted to examine whether patients could train reliably and whether they exhibited a preference for the trained gain; the preference data was based on journals that they kept of their real-world experiences. This research matters for a number of reasons; most importantly, if some patients can reliably train to an individual preferred gain, this technology may be particularly useful once appropriate clinical guidelines for its use are established.

Methods

The authors recruited 26 individuals for this study, but only 25 experienced hearing aid wearers were able to complete the study. They were all older individuals with a median age of 79. They were fitted with prototypes of commercially available multi-memory devices that enabled training of the compression characteristics in four frequency bands and six sound classes. They initially were programmed to the NAL-NL2, which was clinically modified and verified. This, importantly, was how we would typically do things in the clinic, which is to first fit them to NAL-NL2 and then modify as needed. After that, individuals trained the devices for three weeks, and compared the trained settings to the NAL-NL2 prescribed settings for two weeks by using the two different settings on alternate days. Participants made daily diary satisfaction ratings and completed a structured interview after the comparison trial. The authors also wanted to see if the training was reliable, so they had 19 participants go back to the original NAL-NL2 settings and repeat the training and comparison trials.

Results

On average, there were not large changes in trained gain from the first to the second trials. For the low frequencies, the largest gain change was a gain reduction, which was, on average, about 1.5 dB for music signals. There were larger gain reductions in the high frequencies, because there was more gain to work with; the largest gain reductions were for signals classified as speech in noise, and for music. There were small changes in the compression ratio as well, with the biggest changes occurring in quiet, both for the low and high frequencies where we see increases in the compression ratio due to patients turning up the gain for low-level inputs or turning down the gain for high-level inputs.

Sometimes when you send research patients out into the field, they do what you ask and sometimes they do not. What the authors found when looking at the daily subject logs is that only 20 of 25 participants adhered to the protocol; that is, some of the patients were not changing their hearing aids every other day in the last trial. Two of the 20 that had adhered to protocol did not train their hearing aids at all. This left them with 18 participants. Of the remaining 18, eight exhibited no preference for either setting, eight preferred the trained gain, and two preferred NAL-NL2. There was a greater percentage of “no preference” in this study than we have seen in other studies. The authors suggested that this might be due to the fact that there were patients who had fewer adjustments and patients who had smaller adjustments than in previous studies.

One interesting finding is that the two individuals who said they preferred NAL-NL2 made the greatest changes during training than those who did not. I think this leads to a little bit of concern, because it suggests that, for some small set of subjects, they might do the training, and they can train quite a ways away from the validated prescriptive method, but then they went back and preferred the gain that they had originally Again, this was only a very small number of subjects.

Recall that the authors also asked whether the participants could complete training reliably. Nineteen of the participants were asked to go through the entire process over again. Training occurred for 46% of potential cases in the first training period for these 19 subjects, but only 32% of potential cases in the second training period. There was less training, probably because participants got tired of training after completing the first portion of the study. For those subjects, 12 of 19 showed a significant correlation between their first and second training period. This means that training was both reliable, and the effect of training was significant. For the remaining seven, four were unreliable and three did not show significant training.

Finally, the authors wanted to know, “When individuals make these reliable judgments, do they lead to a reliable and valid preference after both comparison trials?” In this case, only ten of the individuals produced valid preferences after both comparison trials. Of these ten, six had consistent preferences, four had no preference, one preferred the trained gain, and one preferred the NAL-NL2. Three participants changed from preferring the trained gain to having no preference, and one participant changed their preference from NAL-NL2 to that of the trained response.

Why is this important? It suggests is that we have smaller changes compared to past studies, and that may be related to the fact that NAL-NL2 may be more well-liked as a starting place compared to previous studies that used prescriptive methods such as NAL-NL1. NAL-NL2 prescribes about 4 dB less gain than NAL-NL1, particularly for moderate inputs, for many common hearing thresholds. One of the things the authors also demonstrated is that individuals who trained more often and in more environments were more likely to prefer the trained response. Individuals who were generally happy with their NAL-NL2 first fit trained less. In other words, training is not for everyone, but those that used this feature and trained in a lot of different environments tended to prefer the trained response.

Recommendations

The authors concluded with some recommendations. First, the findings suggest that we should avoid training for those that are not interested in training the hearing aids. Those that are interested in training will fall into different groups. If we have a satisfied patient at the follow-up appointment that has trained significantly large changes, I agree with the authors that they should continue wearing the training devices as is. If we have satisfied patients who have little or no trained changes, we can assume that they are happy with their prescription and can deactivate the training. The authors suggest that if we left the training on, we might have inadvertent changes and end up with a patient who is less happy.

Patients who are unsatisfied and made little to no training changes should be encouraged to continue their training for a bit longer to obtain more desirable gain and output settings. Finally, if you have an unsatisfied patient who has trained significantly large changes, we need to figure out why they are not getting where they want to be. The authors suggest resetting the prescription and exploring why there is dissatisfaction.

Article #2

The next article I am going to review looked at the effect that hearing aid users’ voices could have on directional benefit. This follow-up article was done by Wu, Stangl, and Bentler (2013) at the University of Iowa. This article is particularly interesting because it speaks to differences in the laboratory versus the real world and the importance of the actual test settings.

These authors asked whether hearing aid users’ own voices affected directional benefit. This was for a newer type of directional hearing aid that has directional steering that includes backward-facing directional processing algorithms. The hearing aid automatically switches the direction of focus to behind the listener, which is what some manufacturers refer to as an anti-cardioid directivity pattern. The typical use-case for this type of processing would be while driving a car, carrying on a conversation with someone in the back seat.

The authors’ general research question matters in a broader scope because the magnitude of directional benefit has been shown to be affected by a large number of factors, and we need to make sure that we understand those factors - in this case, the listener’s own voice - particularly in some of the newer automatic switching systems. We want to make sure that laboratory-based measures of benefit do not over- or under-estimate the benefit achieved in specific real-world listening situations.

Wu et al. (2013) measured speech recognition performance for 15 listeners with sensorineural hearing loss. In this case, speech was presented behind the listener with the noise in front. The hearing aids were programmed either to an omnidirectional setting, a directional setting, or a new algorithm that automatically switched between a reverse directional, a directional, and an omnidirectional pattern. Noise was presented continuously.

In the first experiment, listeners repeated sentences from the standard Hearing in Noise Test (HINT). In the second experiment, the HINT was modified so that a carrier phrase was presented before each sentence in order to prime the processing after the patient finished repeating the previous sentence. The selection of microphone mode for this instrument was an automatic adaptive feature. That is, the omnidirectional pattern was activated when the noise was low; when the overall sound level was higher than approximately 65 dB and the stimuli consists of speech and noise, the device selects a standard directional pattern when speech is from the front. It selects a reverse directional pattern when the speech is detected from behind. Again, all of this is done automatically by the hearing aid.

For those of you not familiar with the HINT, the lower the number means better performance. It means you are able to perform at a poorer (more adverse) signal-to-noise ratio. For example, a HINT score of 0 dB is better than a score of 4 dB. In the original condition with no carrier phrase, omnidirectional performance was better than directional performance when the speech was presented from behind and noise was presented from the front. However, reverse directional performance was not significantly different than omnidirectional performance. The results from the second experiment show that the new technology with reverse directional capability leads to significantly better performance than both directional and omnidirectional conditions.

The authors noted that the adaptation time for this new technology was approximately one to two seconds, and that HINT sentences are about two to three seconds long. When the listener responds, either it is speech in quiet, or in this case, speech in noise, but the speech comes from the front, and the hearing aid will return to omnidirectional mode. For experiment #1, the hearing aid would not switch back until halfway or two-thirds of the way through the HINT sentence following the response. That is especially critical for the HINT material, as the sentence is only scored correct if all key words of the sentence are repeated back correctly—if the person only misses the first key word (during the hearing aid’s adaptive transition to reverse cardioid), the sentence is scored incorrect. By playing a carrier sentence in noise prior to the HINT sentence, the switching occurred prior to the presentation of the HINT sentence in experiment #2, leading to this additional benefit.

This suggests that you might see less benefit from this technology when going back-and-forth in active conversations, which is a realistic exchange in the real world, than in other real-world environments. The authors point out that both of those situations do occur commonly in the real world. It suggests that, in some real-world conversations, this technology may not produce the expected benefit. The main point is that the magnitude of benefit in the laboratory is only representative of real-world listening situations that are nearly identical to the situations that are presented in the lab.

Article #3

The next study (Magnusson, Claesson, Persson, & Tengstrand, 2013) asked what happens to the benefit from directional microphones and potential benefits to noise reduction in open-fit hearing aids. While I think the question is extremely important, there are some issues with this article that need to be addressed, which I’ll get to shortly. These authors asked, “Do directional microphones improve speech recognition in noise compared to the omnidirectional setting for open-fit hearing aids?” and, “To what extent might an open fitting reduce the benefit from directional microphones and noise reduction in comparison to a closed fitting?” Finally, after a period of wearing an open-fit hearing aids, the authors’ asked: “How do the subjective hearing aid outcomes with a typical open fit compare to outcomes of the average hearing aid user?”

Methods

This is a clinically relevant topic, as open-fit hearing aids are popular for a number of good reasons such as reduced occlusion effect and overall listening comfort. However, we need to consider the negatives during the clinical fitting as well, and know whether the overall outcome is still positive. In this study, 20 adults with symmetric, mildly sloping to moderate sensorineural hearing loss, without prior experience with hearing aids were evaluated. All were fitted binaurally with the same model of hearing aids. Speech recognition in noise was evaluated for unaided, omnidirectional with and without noise reduction, as well as directional with and without noise reduction. This was conducted both for open and closed fittings. Moderate noise reduction and fixed directionality was applied across the fittings. One of the issues with the study, however, was that all fittings were conducted using the manufacturer’s first-fit default without any real-ear verification. What that means is that we have no idea whether gain varied across the fittings as well as the settings for other features (See Mueller, 2014 for a discussion of this topic and examples of manufacturer’s first-fit settings).

The speech recognition testing was completed using adaptive signal-to-noise ratio tasks, with the speech fixed at 65 dB. Speech was presented in front of the listeners with uncorrelated noise presented from four loudspeakers surrounding the listeners. About four to six weeks after the patients had been wearing the open-fit hearing aids; they completed the International Outcome Inventory for Hearing Aids (IOI-HA) to assess the outcomes with the open-fit devices.

Results

If we compare omnidirectional for the open and the closed fittings, there is a slight trend towards a decrease in performance for open compared to closed fittings. Secondly, when the participants went from omnidirectional to directional, there was an increase in performance in noise for the open fitting, although we see a larger increase in directional benefit for the closed fitting.

To summarize, there is a trend of about 1 dB poorer performance for open versus closed fittings when in the omnidirectional mode. The much larger effect is that directional benefit increased from about 1.4 dB in open fittings to about 3.3 dB in closed fittings. There was a small benefit for noise reduction, but only in the closed fittings. This is in contrast to previous studies using this particular brand of hearing instrument; I think it is difficult to interpret because it is unclear whether the same gain was provided across the noise reduction on and off conditions. Recall that I mentioned earlier that no verification was conducted in this study.

In terms of the IOI-HA results, the authors point out that they were similar to normative values, other than a higher score for “impact on others” for the open fitting. This finding was suggested to reflect that they had good outcomes, or at least expected outcomes, for the open fittings.

Why are these results important? Certainly, there was greater benefit for closed fittings, but despite this benefit, 17 of the 20 participants with open-fit instruments chose to keep the open instruments after a 4 to 6 week trial, which we would expect relates to the positive IOI-HA findings. I should point out, however, that no trial with closed fitting was offered.

Finally, I think that if these laboratory results are due mainly to differences in coupling and not differences in gain, they suggest that we may consider fewer open fittings for listeners who exhibit particularly poor speech recognition in noise and the directional microphone is a particularly important feature to them; they are going to obtain the best performance in a more closed fitting.

The Commodore Award

Gus Mueller: Okay—it’s time for the Commodore Award. This is a feature of every Vandy Journal club. This award is for an article that the presenter believes deserves special attention. The name originates from Commodore Cornelius Vanderbilt, who made a large donation to have the university founded many years ago. And of course, our sports teams are the Vandy Commodores, or just the ‘Dores if you’re a local.

Todd Ricketts: The article I chose for the Commodore Award today is by Harvey Abrams, Theresa Chisolm, Megan McManus and Rachel McArdle (2012). They compared a manufacturer’s first-fit approach to a verified prescription and looked at self-perceived hearing aid benefit. I like this article because they try to do things in a clinically applied manner; this was not a fit-it-and-forget-it type of procedure. They pointed out that, despite evidence suggesting inaccuracy in the default fittings provided by hearing aid manufacturers, the use of probe-microphone measures for verification is routinely used by fewer than half of practicing audiologists. They wanted to know, “Does self-perception of hearing aid benefit, as measured by the Abbreviated Profile of Hearing Aid Benefit (APHAB), differ between a manufacturer’s initial-fit approach compared to a verified prescription of NAL-NL1?”

What is important for this study is that they did adjust the hearing aids based on participants’ request in both cases. This matters because verification of a validated prescriptive method requires clinical time and resources. We need evidence to support applying these resources consistent with evidence-based practice procedures if we are going to continue to suggest that this should be done. If evidence exists supporting this practice, it may be adopted by a greater percentage of practicing audiologists. Perhaps if we can show that there is a potential clinical benefit to verifying a validated prescriptive method, it may be more compelling to individuals who currently do not do so.

Methods

In this study (Abrams, et al., 2012), there were 22 experienced hearing aid users. This was a cross-over design study: Half of the participants were initially fit with new hearing aids via the manufacturer’s first fit while the second half were fit to NAL-NL1 using probe microphone verification. After the first wearing period of four to six weeks, the participants’ hearing aids were re-fit via the alternate method and worn for an additional four to six weeks. Each participant therefore experienced each condition. One of the things that was controlled for in this study was the potential bias of more time and effort with patients on subsequent report of benefit. That is, the patient could rate the fitting better just because there was more contact with the audiologist during the fitting. In order to control for that, and assure that the outcome was not due to the fact that more time was spent with participants who received probe-microphone verification, the participants were blinded to which approach was being used. Probe mic measures were applied in both approaches. For the NAL-NL1, the probe-mic findings were used to adjust the fitting to match ear canal SPL targets, and for the initial fit, they only measured the initial fit settings with the probe mic. In both cases, they made changes based on the user’s input, as we would normally do in the clinical setting.

The APHAB was administered at baseline and at the end of each of the intervention trials. At the end of both of the trials, participants identified which fit they preferred. This study also employed a variety of manufacturers and different instruments, which resulted in multiple different first fits. They also used a variety of different hearing aid styles from behind-the ear (BTE) to completely-in-the-canal (CIC).

Results

In terms of the real-ear gain, the NAL-NL1 targets gave the highest gain, with the probe- microphone adjusted measurements offering slightly less, followed by the first-fit output offering the least. I would like to point out that most hearing aids do not have substantial gain above 4000 Hz, so I think we can ignore the data points at 6000 and 8000 Hz, because it is not expected that most hearing aids would have much gain at that point. But they did end up with about 4 or 5 dB less gain than the NAL-NL1 targets for the final probe-mic fit (based on patient-driven adjustments), and about 4 or 5 dB below that for the manufacturer initial fit. Nine of the 44 participants were modified for the NAL-NL1 fit, and 5 of the 44 were modified for the initial fit. These adjustments tended to be minimal, as they resulted in a change from average gain across all participants of no more than about 1.1 dB.

The benefit scores for the APHAB were higher for the verified prescription than the initial fit. For the Ease of Communication Profile subscale, we see that there is an improvement from initial fit to the verified prescription. That is also true for the Reverberation subscale, and for the Background Noise subscale. Across these three of four subscales, they saw significantly better outcomes for the validated prescriptive method in comparison to the verified first fit.

Not surprisingly, those that preferred the NAL fittings had better outcomes generally for the NAL fit, and those that preferred the initial fit generally had better outcomes for the initial fit. Those that preferred the NAL fit generally had better outcomes in general and showed more benefit in general than those with the first fit.

To summarize, the Ease of Communication, Reverberation, and Background Noise subscale scores obtained with the verified prescription were significantly higher than for the initial fit approach, indicating greater benefit. More importantly, of the 22 participants, only 7 preferred their hearing aids programmed to the initial fit, and 15 preferred their hearing aids programmed to the verified prescription.

Why is this important? These data add to and support the use of verification of a validated prescriptive fitting method, and applying it using standard clinical techniques. This is not saying that we need to be a slave to our prescriptive methods, but they give us a good starting point. If we make adjustments from that point, we end up with better outcomes than if we use the manufacturer’s initial fit as the starting point and make adjustments from that manufacturer’s first fit. I think this research also provides additional evidence-based support for the need of well-trained practitioners for the optimal fitting of hearing aids, and provide support for us as evidence-based practitioners. Finally, I think these data also provide some indirect support for NAL-NL2. If you look at the individualized probe mic fittings in the Abrams (2012) study, they are 4 or 5 dB lower than the NAL-NL1 prescription and, in fact, are close to what would be the NAL-NL2 prescription, which is what is commonly used in clinics today.

Gus Mueller: Let me jump in with a few comments. I agree that verification is a critical aspect of fitting hearing aids. A few years back Erin Picou and I conducted a survey of over 500 audiologists, and asked if they used a validated prescription method (Mueller & Picou, 2010) Of the respondents, 78% said yes. Of that 78%, only 44% reported using probe mic measures. How did the other 56% know what they were using, given that prescriptive method is the sound pressure level at the eardrum, not a button in software? There are a couple of studies that looked directly at this disconnect.

Aazh and Moore (2007) used four different types of hearing aids from different manufacturers. They considered the fit to be adequate if you were within 10 dB of NAL targets. Only 36% of 42 ears were truly fit to NAL-NL1 using default settings. After reprogramming with probe mic measures, 83% were within 10 dB of targets for two-channel hearing aids and 100% for hearing aids with four channels or more.

Last year, another study (Aazh, Moore, & Prasher, 2012) examined open fittings from the same group. They had 51 fittings programmed to the NAL in the fitting software, but only 29% ended up being programmed to within 10 dB of the actual targets. After they reprogrammed, they obtained a match for 82% of the fittings. This is a huge problem. If we are going to see if these prescriptive fitting methods really work, we have to fit to that method (re: ear canal SPL).

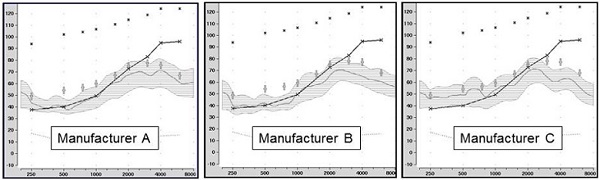

Finally, I’d like to comment on some data collected last month by Elizabeth Stangl at the University of Iowa, which are displayed in Figure 1. She selected the premier hearing aid from three of the six leading manufacturers for subjects with sloping hearing loss. In the fitting software, she selected NAL-NL2 and matched all the patient characteristics between the software and the probe microphone for bilateral fittings. She used common speech mapping techniques to see how the fittings matched targets. Manufacturers A and B missed NAL targets by about 10 dB at many frequencies, and Manufacturer C also missed the mark. If you did not do probe microphone measurement, you could almost convince yourself that you were fitting to NAL-NL2. Recall that 70% of clinicians are not using probe mic measurements. The average output for the speech signal from 3000 to 4000 Hz should be approximately 78 dB; this data shows around 62 dB, which is a 16 dB mistake. Could that make a difference in a person’s life? Absolutely.

Figure 1. Shown are the speechmapping findings for the premier hearing aid of three major manufacturers, programmed using the manufacturer’s NAL-NL2 option in the fitting software. The test stimulus was the male talker of the Verifit system, presented at 55 dB SPL. Also shown are the sample patient’s audiogram (upward sloping solid line) and the NAL-NL2 targets for this speech input (frequency-specific crosses). (Chart courtesy of Elizabeth Stangl, AuD. From Mueller, 2014).

Clinical Tidbits

Todd Ricketts: There were some other articles that offer some important clinical tidbits that I’ll discuss briefly. The first is from Caporali et al. (2013), who performed a study to see if a receiver-in-the-ear (RITE) instrument might be reasonable for infants. The notion was that because they have a smaller size and less weight, retention may be less of an issue. I think an important point of this article is that you have to modify the RITE to be specific to the infant, with an anchor to provide extra retention.

They fit 18 infants with mild to moderately-severe hearing loss. Sixteen infants were prior BTE users. The infants used the devices for a period of two to five months. Audiologists and parents completed questionnaires. At the end of the study, 11 of the 16 children were using the instant ear tip provided by the manufacturer, and 5 were fitted with the receiver mounted in a custom ear mold. Sixteen of the 18 children continued to use the RITE instruments, and the audiologists rated the RITE instrument as a safe, stable, and secure fit. Parents got used to them too, and by the end of the trial, really liked them. I think the outcome of this report is that we have mini-BTE styles that are very popular and well liked by many adult users, and with some modifications, they may be appropriate for children, even infants.

Another study I’d like to mention relates to placebo effects in hearing aid clinical trials. We have to be careful when we read research that discusses old hearing aids versus new hearing aids. That is a particularly popular thing to do in some of the articles we see in trade journals. Manufacturers will point out, “Compared to our old model, our new hearing aid is much better.” One study fit 16 participants with two devices that were acoustically identical (Dawes, Hopkins, & Munro, 2013). In fact, they took the same hearing aid and changed the shell color and for half the subjects; they told them one shell color was new and the old shell color was conventional. They fit these instruments to patients individually, and they achieved exactly the same target gain, on average.

One of the things that is telling in this study is there was a significantly better mean speech-in-noise performance for the “new” hearing aid. We have seen significantly better sound quality ratings before for new hearing aids, but this is the first time I have seen a significant change in speech recognition. Not only are patients biased by calling the hearing aid “new” that they will report it is better, they may even try harder at speech recognition and give you better objective outcomes.

Finally, 75% of patients expressed an overall preference for the new hearing aid and, overall, I think this lends additional support that we need to have a double-blinded methodology when we are trying to compare hearing aid technologies; we could be having a placebo effect tell us that a new instrument is better.

Questions and Answers

I am not familiar with trainable hearing aids. Are these hearing aids with adaptation properties? What is actually meant by trainable?

Todd Ricketts: There are two types of self-adjusting hearing aids: automatic adaptation managers which start a low level of gain and then move the level of gain up over time, and trainable hearing aids that change the gain based on patient input. The automatic adaption/acclimatization systems are controlled by the audiologist—that is, the audiologist programs how he or she wants the gain to increase, and how fast it should occur (Over a week? Several weeks? Several months?). In the true trainable instruments, the patient is in control. The hearing aid “remembers” what gain setting the user selects for different input levels and listening situations, and eventually will train to that level. Once trained, the hearing aid will adjust to this gain level whenever the hearing aid detects that situation, be it speech-in-quiet, speech-in-noise, music, etc. There are several manufacturers that include both automatic adaptation managers and trainable features. It is suggested to proceed with an automatic adaptation manager before using the trainable function. Other people suggest starting at the prescriptive method and allowing people to train. If you have patients who you do not think are going to tolerate the initial prescriptive method and you want to get them up to that as a starting point, it can be useful for some patients to apply both an adaptation manager, followed by a period of training.

Do the results support not turning the training on until the second visit? If someone is going to be happy with the initial settings, why risk having them make it worse until they have tried it?

I think this is a good question. It is something that manufacturers have struggled with as well. I do not know if I made it clear about this particular brand and generation of trainable, but manufacturers are making the training occur a little more slowly. The training effects take more effort to cause the training. There is not much data to support which way to go and when training should begin. My own bias is if you have a patient who seems very motivated to train, and wants to adjust it themselves after the initial fitting, going with training at an initial fitting might be reasonable for that individual. If you have patients that are happy with the settings and do not want to change anything, they are probably not good candidates for trainable. If they come back at their follow-up fit and are still very happy with how it sounds, they are likely not good candidates for trainable at all.

Gus Mueller: This is very similar to what Catherine Palmer (2012) studied. She took two groups of new users, with 18 in each group. One group was fitted to NAL and began training immediately for two months. The second group also was fitted to the NAL, but used the hearing aids for a month with this fitting, and then did training for the second month. So, one group trained for two months, the other group only one month. On average, both groups trained gain down a little. The speech intelligibility index (SII) for soft sounds went down ~4% for the group that trained two months and ~2% for the group that trained one month. There are a couple reasons why we see this slight difference. It could be because the first group had an extra month to train, or that the second group became acclimated to the NAL, and therefore did not train down as much. We don’t know what they would have done if they would have had an additional month to train. Either way, neither group trained their hearing aids down a lot, and consistent with what Todd mentioned earlier, the amount they trained down from NAL-NL1 actually put them closer to NAL-NL2, which is commonly used today..

I know that some audiologists are concerned that if they allow their patients to train the hearing aids, they will make the fitting considerably worse. I don’t think that happens very often. Consider that in Catherine’s study (Palmer, 2012), the HINT scores were the same for the trained gain as for the original NAL fitting. And, about 70% of the subjects preferred the trained gain after a real-world comparison to the NAL fitting.

You said that in the Abrams et al. (2013) study that 30% of the subjects preferred the initial fit over the verified fit. That seems like a lot. Is there any way to predict who those patients are? Where they under-fit previous users, for example?

Unfortunately, the individual data are not provided in that study. It is unclear whether that is a possibility or not. The Abrams study (2013) is similar to the Palmer (2012) study; they forced people to tell us which one they preferred, and we do not really have a way of knowing whether a subset of both groups actually had no preference or the preference for one over the other was so small that it was not meaningful. Also, recall that they used several manufacturers, and it could be that some manufacturers have a first-fit that is reasonably close to the NAL.I think there is some additional work that needs to be done on predicting who might prefer a little less gain versus who might not.

There was a slide that mentioned hearing aid users’ voices and the effect on directional benefit, but I missed the finding. Can you address that?

This is a specific type of new technology looking at an automatic system that would switch to a directional mode in noise if the primary speaker were in front, and it would switch to a reversed directional mode if the primary talker were behind the listener. This study found that the hearing aid wearer’s own voice could switch the device to directional mode, as it would see the voice as being in front, and if immediately this was followed by a talker from behind; the hearing aid did not have enough time to adapt and focus to the speech behind the head for sentences that immediately followed the hearing aid wearer speaking. Therefore, the listener might miss the first word or two of the following sentence. That might be a real clinical outcome, so for those specific environments where you have talkers going back and forth, you might have patients that have to have the hearing aid primed a little bit before it switches to the appropriate mode.

Gus Mueller: I have a real-world example that might add to what Todd is saying regarding “primed a little.” The point is that the switching of the speech focus of the directional algorithm might take a second or two. Let’s say that Bob is driving the car, and says to Mary sitting in the back seat, “Where should we stop for dinner?” Consider that the hearing detects that there is speech from the front (Bob’s voice) and noise from all around, so it goes to the traditional directional mode. This of course is very poor polar plot for understanding speech from the back. Now, if Mary responds quickly with “Applebee’s,” the benefit of the automatic rear-speech focus will be lost, as the word will be completed before the hearing aid adapts to the appropriate polar pattern. If on the other hand, she says, “I’d like to go to Applebee’s”, Bob will maybe miss the first word or two, but by the time Mary got to the most important word, “Applebee’s,” the rear-focus would be active, and Bob’s chances of hearing and understanding the word would be more likely.

Was a questionnaire the only rating for the mini RITE study on infants?

Yes. There were questionnaires for both audiologists and for parents. This was not a comparison trial of any kind. This was just a trial with the RITE (or receiver in the canal, RIC) instrument, trying to determine whether the RITE instrument was a reasonable and acceptable fit both to parents and to clinicians. I would view that as very preliminary data.

On at least three of the major manufacturers’ first fit settings, you said that output may fall 10 dB or more below the true NAL-NL2 targets based on verification of the fitting. Even if you did not do probe microphone measurements, wouldn’t this shortfall be quickly apparent in the validation step, including the often-ignored speech perception testing?

Gus Mueller: First, this makes the bold assumption that the average clinician performed aided speech perception testing at all. Secondly, it would depend on the speech presentation level. If you were to deliver speech at 65 dB SPL, 50 dB HL, which is common, this may not show up. If you delivered speech at 50 to 55 dB SPL, yes it probably would. But even then, you’d have to know what the optimum score should be for that patient.

For example, you just fit your uncle with hearing aids and perform the QuickSIN, which yielded an SNR-loss of 8 dB. Had you fit him correctly maybe the SNR-loss would have been as good as 4 dB, but how do you know that 8 dB SNR-loss is not your uncle’s max score for the QuickSIN? In many cases, when you are conducting speech perception testing, you do not know how good the score can get. This is why speech recognition is problematic when used for hearing aid verification, and why we prefer to use ear canal SPL verification of a prescriptive fitting as a starting point; followed by some form of validation of course.

Todd Ricketts: I would agree. Even if you do speech recognition correctly, and you show benefit by using low-level speech, you do not know whether this is just a person that already gets good benefit and could have done even better? It would only show up if you did a comparison of an accurate fit to the inaccurate fit.

Gus Mueller: As you probably know Todd, there was a recent publication that addressed the very point you’re making written by Leavitt and Flexer (2012) and published in the Hearing Review. They compared the proprietary first fit to a probe-mic verified NAL fitting for the premier hearing aid of the six major manufacturers. Testing a group of hearing-impaired individuals fitted bilaterally, they found an SNR improvement of up to 8 dB on the QuickSIN when programmed to the NAL versus the manufacturer first fit. This is an important area to consider at when we are fitting patients with new technology.

References

Aazh, H., & Moore, B. C. (2007). The value of routine real ear measurement of the gain of digital hearing aids. Journal of the American Academy of Audiology, 18(8), 653-664.

Aazh, H., Moore, B. C., & Prasher, D. (2012). The accuracy of matching target insertion gains wit open-fit hearing aids. American Journal of Audiology, 21(2), 175-180. 10.1044/1059-0889(2012/11-0008).

Abrams, H. B., Chisolm, T. H., McManus, M., & McArdle, R. (2012). Initial-fit approach versus verified prescription: comparing self-perceived hearing aid benefit. Journal of the American Academy of Audiology, 23(10), 768-778. doi: 10.3766/jaaa.23.10.3.

Caporali, S. A., Schmidt, E., Eriksson, A., Skold, B., Popecki, B., Larsson, J., & Auriemmo, J. (2013). Evaluating the physical fit of receiver-in-the-ear hearing aids in infants. Journal of the American Academy of Audiology, 24(3), 174-191. doi: 10.3766/jaaa.24.3.4.

Dawes, P., Hopkins, R., & Munro, K. J. (2013). Placebo effects in hearing aid trials are reliable. International Journal of Audiology, 52(7), 472-477. doi: 10.3109/14992027.2013.783718.

Keidser, G., & Alamudi, K. (2013). Real-life efficacy and reliability of training a hearing aid. Ear and Hearing, 34(5), 619-629. doi: 10.1097/AUD.0b013e31828d269a.

Leavitt R., & Flexer, C. (2012). The importance of audibility in successful amplification of hearing loss. Hearing Review, 19(13), 20-23.

Magnusson, L., Claesson, A., Persson, M., & Tengstrand, T. (2013). Speech recognition in noise using bilateral open-fit hearing aids: the limited benefit of directional microphones and noise reduction. International Journal of Audiology, 52(1), 29-36. doi: 10.3109/14992027.2012.707335.

Mueller, H.G. (2014, January). 20Q: Real-ear probe-microphone measures - 30 years of progress? AudiologyOnline, Article 12410. Retrieved from: https://www.audiologyonline.com

Mueller, H.G. & Picou, E.M. (2010) Survey examines popularity of real-ear probe-microphone measures. Hearing Journal, 63(5), 27-32.

Palmer, C. (2012, April). Siemens Expert Series: Implementing a gain learning feature. AudiologyOnline, Article 20424. Retrieved from https://audiologyonline.com

Wu-Hsiang, Y., Stangl, E., & Bentler, R. A. (2013). Hearing aid users’ voices: a factor that could affect directional benefit. International Journal of Audiology, 52(11), 789-794. doi: 10.3109/14992027.2013.802381.

Cite this content as:

Ricketts, T., & Mueller, H.G. (2014, February). Vanderbilt Audiology’s Journal Club: Hearing aid features and benefits – research evidence. AudiologyOnline, Article 12439. Retrieved from: https://www.audiologyonline.com