Learning Outcomes

After this course, participants will be able to:

- List 4 recent journal articles that address the benefit of hearing aid features or fitting.

- Explain the findings of 4 recent journal articles that address the benefit of hearing aid features or fitting.

- Describe how the results of 4 recent journal articles may impact the clinical process of hearing aid fitting and support evidence based practice.

Introduction and Overview

Gus Mueller: For today’s Vanderbilt Audiology Journal Club, Dr. Todd Ricketts will present research evidence to support the benefits of various hearing aid features.

Dr. Ricketts is a professor at Vanderbilt University, and directs graduate studies in both audiology and speech language pathology. He is also Director of the Dan Maddox Hearing Aid Research lab, and has authored many publications from his research findings. In just the past four years he has co-authored the books Modern Hearing Aids Pre-fitting, a companion book Modern Hearing Aids – Verification, Outcome Measures and Follow Up, and a third clinical handbook Speech Mapping and Probe Microphone Measures. A fourth book will be released shortly—Essentials of Modern Hearing Aids. I could go on and on about Dr. Ricketts’ professional accomplishments, but trust me when I say that you’re a hearing from an expert today.

Dr. Todd Ricketts: Thanks, Gus. Today, I’m going to focus on four different journal articles that address hearing aid features, and the benefits of those features. In addition, I've selected a couple more articles with some valuable clinical tidbits that I will describe toward the end of the presentation.

Article 1: Fitting Noise Management Signal Processing Applying the American Academy of Audiology Pediatric Amplification Guideline: Verification Protocols (Scollie et al., 2016)

This article is from a special issue of the Journal of the American Academy of Audiology (JAAA) that addressed the American Academy of Audiology Pediatric Amplification Guidelines. This article was authored by a group from the University of Western Ontario. It is fairly broad in purpose, and describes several smaller studies as well as some of the information in the guidelines. Today, I’ll focus on one of the investigations that explores whether variation across different brands of noise reduction are perceived by children who have hearing loss.

We know from existing research that speech recognition is generally not affected much by digital noise reduction (DNR) processing in hearing aids (that is, noise reduction signal processing – we’re not referring to microphone-based noise reduction). However, people show differences in their preferences for DNR as it relates to factors such as listening effort and sound quality. While preference differences across manufacturers has been explored in adults, it had not previously been explored in children.

What They Did

In this study, the participants were 15 children and young adults (between the ages of 8-22 years). Two participants were unable to complete the task, which left 13,with a mean age of 12.5 years. The investigators evaluated four different BTE hearing aid models, and they devised their own classifications after conducting some measurements. They measured noise reduction systems in a few different ways. First, they used a clinical procedure with the Audioscan Verifit to look at the amount of noise reduction over time. Next, they used more of a laboratory-based procedure, called a spectral subtraction procedure, where they estimated the spectrum of the noise and speech, and subtracted the spectrum of the noise from the total spectrum.

They evaluated four BTE hearing aid models:

- Hearing Aid 1: Fast noise reduction speed; medium effect (i.e., the amount of reduction was medium)

- Hearing Aid 2: Medium speed; medium to strong effect

- Hearing Aid 3: Medium speed; weak effect

- Hearing Aid 4: Slow speed; strong effect

The hearing aids were fitted and verified to DSL v5-quiet, and also to DSL v5-noise. They made recordings with noise reduction off and on. With noise reduction on, they set the noise reduction to maximum; for some of the hearing aids with a moderate noise reduction setting, they also set them to moderate. They made these recordings through all the hearing aids using the KEMAR. Following the recordings, then then played the various samples through speakers to the children and young adults.

They had the subjects evaluate the signals using the Multiple Stimuli with Hidden References and Anchors (MUSHRA; ITU, 2001) method for sound quality evaluation, which has been used in a large range of studies. The MUSHRA method includes one anchor, and one reference. In this case, it was +10 SNR and -10 dB SNR. Five groups of stimuli were created for evaluation from recordings of DNR on and off. The five groups included:

- Speech in babble at a +5 dB SNR

- Speech in babble at a 0 dB SNR

- Speech in speech-shaped noise at +5 dB SNR

- Speech in speech-shaped noise at 0 dB SNR

- Speech in quiet

The subjects were all listeners with hearing loss, so they fit each of them with a single model of hearing aids. All of them were fit with BTE hearing aids programmed individually to DSL v-5 child targets, with no noise reduction active. All signal processing in the hearing aids was disabled, except for compression and feedback suppression. This way, the hearing aids were doing their own signal processing, but they're playing signals that have been hearing aid processed in different ways. The MUSHRA method was used to judge the speech quality from poor (0%) to excellent (100%).

What They Found

When they performed sound quality ratings at +5 SNR, they found that for some brands, noise reduction had little to no average effect on sound quality. For other brands, noise reduction improved sound quality at least for some conditions. For example, for one of the hearing aids, sound quality was a little better in both babble and in speech-shaped noise when noise reduction was activated. For another hearing aid, sound quality was better in speech-shaped noise when noise reduction was on. And lastly, they found that some brands resulted in higher sound quality ratings regardless of whether noise reduction was on or off. For example, in speech-shaped noise one of the hearing aids had a rated sound quality of around just above 50% whether noise reduction was on or off, where another hearing aid had a rated sound quality of nearly 70% in both noise reduction on and off conditions. These results are broadly consistent with those of Brons and colleagues (2013), which demonstrated differences in annoyance, listening effort, and speech intelligibility across DNR systems in adults.

Why is This Important?

The authors point out that these results might question some common clinical assumptions. Sometimes we think that noise reduction is all the same, but that doesn't appear to be the case when it comes to its effect on sound quality. Sound quality relative to noise reduction seems to be different across brands, and the effect of turning noise reduction on and off seems to have different effects across brands. The authors point out that considering the noise reduction function and how each individual reacts to the sound quality may be important for optimizing the sound quality of hearing aids in noisy environments.

There are a couple of considerations that the authors didn’t discuss but I would like to address here. First, the authors categorized the amount of noise reduction based on the reduction in noise level and change in SNR between noise reduction on, and noise reduction off. The noise reduction on and noise reduction off conditions were controlled via the clinician controls in the programming software.

There are a couple of different types of noise reduction that are often used together in hearing aids: modulation-based DNR and fast-filtering methods. Modulation-based DNR looks at the modulations in the signal: if the signal is a steady-state signal it acts upon it; if the signal is fluctuating it does not. Fast-filtering methods (such as adaptive Weiner filtering or spectral subtraction) try to subtract out the estimated noise from the signal. In this study, the authors didn't categorize the differences across these two types of noise reduction. That's fair in some ways because they're often used in tandem. However, sound quality differences for speech in noise in past research have typically been attributed to fast filtering methods.

Things get more complicated when you look at what happens when you turn noise reduction on and off across manufacturers. For some manufacturers, when you disable noise reduction, it turns all noise reduction off. For other manufacturers, the fast filtering is always on, so when you turn noise reduction off, you are really only turning off the modulation-based noise reduction. You may not have direct control over both types of noise reduction clinically when you turn noise reduction on and off. When we look back at the data in this study, we see that one hearing aid showed no differences between noise reduction off versus on. It's possible that there was some fast filtering that was active in both cases and only modulation-based noise reduction was adjusted in the ‘off’ setting. In situations where there is speech in noise (not just noise alone), modulation-based DNR has been shown previously to have minimal effects on sound quality ratings.

One other consideration is that compression and other signal processing differences between hearing aids can also affect sound quality. We can't assume that the differences in sound quality between hearing aids are purely due to how noise reduction is implemented. Unfortunately, it may not be straightforward to predict preference based only on test box measures of DNR function.

There is some potential clinical application if further research confirms the presence of preference differences after a period of experience (because people had limited experience in the study). We also would need to confirm that these differences are present in the real world, in the context of other hearing aid processing (such as directional microphones), and across other noise conditions including noise only, where we might see big changes in sound quality. Then, we need to look at examining what factors affect preference. If results are generalizable and reliable, there is the potential for development of individualized fitting methods for DNR that could lead to improved outcomes. That is, there are people that may prefer the sound quality of particular noise reduction settings comparatively to others, and we can take those preferences into account during fittings.

Article 2: The Effects of Frequency Lowering on Speech Perception in Noise with Adult Hearing Aid Users (Miller, Bates, & Brennan, 2016)

In this study, the authors looked at the effect of applying three different types of commercial brands of frequency lowering technologies on speech recognition in adult hearing aid users. There have been a variety of frequency lowering technologies introduced that are currently available across the manufacturers. However, data demonstrating benefits, particularly in adult listeners, are fairly limited.

What They Did

The study had 10 participants. Some had experience with frequency lowering, mainly non-linear frequency compression, but overall, experience with frequency lowering technology was relatively limited.

The authors compared non-linear frequency compression (NFC), linear frequency transposition (LFT), and frequency translation (FT), which is sometimes referred to as frequency duplication and lowering. Each one of these is a technology that's available from at least one manufacturer. The highest audible frequency for the listeners in this experiment was around 4 kHz for a 65 dB HL speech signal across all of these technologies. The settings for each frequency lowering strategy were adjusted for each person to provide audibility for the average level of a 6300 Hz band pass filtered speech signal. The authores evaluated sentence recognition in noise, subjective measures of sound quality, and they calculated a modified version of the speech intelligibility index (SII) to determine whether there were any differences with the frequency lowering technologies on versus off.

One of the things they examined was the amount of lowering that each type of frequency lowering technology provided for different bands of signals. It’s important to understand that the authors made these measures band by band. For example, they introduced a 3000 Hz signal, and then looked at the output signal; they introduced a 4000 Hz signal, and then looked at the output signal, and so on. You would see very different results if you looked at a broadband signal. When you look band by band, you find that for non-linear frequency compression (NFC), there is a little bit more lowering as you go higher in frequency as compared to the linear frequency transposition (LFT). Frequency translation (FT) had the most lowering of the three technologies for these narrow bands.

What They Found

For speech recognition in noise, SNR-50 was not improved for any of the frequency lowering and technologies. For LFT and FT, speech recognition in noise was decreased. One important detail is that none of the listeners had much experience with frequency lowering technologies, particularly with LFT or FTE. There has been work suggesting that LFT requires experience and time to optimize speech recognition benefits. Additionally, when the authors did calculations of SII, they found no differences between frequency lowering on versus off.

Why is This Important?

There are several issues that limit generalization of these results. As I previously mentioned, these individuals had limited experience with frequency lowering. Also, the study had a small number of listeners (n=10). Optimal fitting methods for frequency lowering technologies are still being developed, and some of the manufacturers would suggest different fitting methods than were used in this study. The hearing loss of the subjects in this study, while significant, was not in the high frequency range where you'd expect frequency lowering technologies to provide benefit. However, this study is now one in a list of studies suggesting that frequency lowering may provide little or no significant improvement to sentence recognition in adult hearing aid listeners. We do see some benefits in the literature occasionally related to consonant recognition, particularly in quiet.

Article 3 - A Randomized Control Trial: Supplementing Hearing Aid Use with Listening and Communication Enhancement (LACE) Auditory Training (Saunders et al., 2016)

The next study I’ll duscuss focuses on a topic that has been of interest to hearing aid researchers for decades, and has had a recent resurgence in popularity. It is the notion that using different types of audiologic rehabilitative techniques has the ability to supplement hearing aid fittings. This study was designed to determine whether the Listening and Communication Enhancement (LACE) Auditory Training program provided an effective supplement to standard-of-care hearing aid intervention in a veteran population.

The authors of this study note that hearing aid outcomes vary widely from listener to listener and that we can't restore the auditory system to normal. Increasingly, hearing aid processing is becoming more and more sophisticated. The hearing aid processed signals are acoustically very different than what listeners have been used to hearing before they wore hearing aids. In addition, many people who have acquired hearing loss have not had normal auditory input for some time, as they acquired the hearing loss slowly over many years. As such, auditory training like the LACE system relies on the assumption that there is the possibility for brain reorganization and restructuring; that adults with hearing loss can be “trained” or “retrained” to use bottom-up or top-down auditory processing skills to improve speech recognition after this systematic practice.

What They Did

One of the things I liked about this study is that there was a large group of subjects. This study involved 279 veterans and included those who were both new and experienced hearing aid users enrolled at three different VA sites. They were randomized into one of four different treatments after the fitting:

- LACE Training using a 10-session DVD format

- LACE Training using a 20-session computer-based format

- Placebo auditory training consisting of actively listening to digitized books on computer

- Control group, which received educational counseling, rather than any sort of listening training

Groups were compared and compliance was tracked across all the different methods. The data consisted of five behavioral and two self-report measures collected during three research visits: 1) baseline; 2) immediately following the intervention period; and 3) six months’ post-intervention.

The investigators evaluated:

- Speech in noise using words; the Words in Noise (WIN) test

- Speech understanding for rapid speech in noise

- Speech understanding with speech as a competing background

- Word memory using the Digit Span test

- Use of linguistic context using the R-SPIN test (i.e., how well people use linguistic context when they could guess the last word, versus not having context to guess the last word)

- Activity limitations and participant restrictions using the Abbreviated Profile of Hearing Aid Performance (APHAP), and the Hearing Handicap Inventory of the Elderly (HHIE).

The investigators were very careful in randomizing the patients into the different groups. The number of withdrawals from the study was relatively small.

What They Found

Across all the different measures, there was no significant difference at visit two, visit three, and then six months’ post for any of the interventions, either for new, or for experienced users. The authors found no significant differences on any measure across all the different measures that they looked at.

Why is This Important?

The authors concluded that the LACE Training alone does not result in improved outcomes over standard-of-care hearing aid intervention. However, they suggest that benefit may exist using other tools. For example, using sentences in noise rather than words in noise might yield benefit, though they speculate that this is probably not a big factor. More importantly, they suggest the possibility that this type of auditory training might have potential benefits for some individuals and not others. There is a previous study (Sweetow & Sabes, 2006) that shows small but significant benefits for LACE. This previous study had a relatively high drop-out rate of about 20%. Even though there was potentially some self-selection bias, it does suggest the possibility that the training was beneficial for some individuals. In other words, the people who stayed in the program and continued to use this method recognized the potential of benefits for this type of auditory training.

Article 4: Wind noise within and across behind-the-ear and miniature behind-the-ear hearing aids (Zakis & Hawkins, 2015)

This study examined the effects of hearing aid wind noise. There have been a number of previous studies that examined wind noise in hearing aids. What's different about this particular study is that it analyzed how BTE hearing aid styles (traditional and mini-BTE) affect wind noise and how this might impact predicted speech intelligibility (SII). As many of you probably know, wind doesn't generate a noise per se; it generates turbulence. That fluctuating turbulence bounces against the microphone diaphragm, and that's what generates noise at the microphone output. Only about 58% of hearing aid users report satisfaction with their devices in wind (Kochkin, 2010), which is worse than any other noisy situation. Clearly, wind noise can be a significant problem. Mini BTEs are currently the most common style of hearing aid dispensed. However, prior to this study, properties of wind noise in mini BTEs were unknown. The authors point out that if we have a better understanding of wind noise, perhaps we could improve designs and improve satisfaction for wearers in windy environments.

What They Did

The investigators measured wind-noise levels across one BTE and two mini BTE devices, and between the front and rear omni-directional microphones within devices. Each device had two different microphone ports. Some had a little bit of shielding such as a screen or hood, while others didn’t have shielding. The investigators used an elaborate setup similar to one that was used at the National Acoustics Lab in Australia for some other measures of wind noise.* The set up included a fan generating wind noise, directing air down to impinge on a hearing aid placed on a KEMAR. Wind-noise levels were measured at two different wind speeds: 3 meters per second (m/s) and 6 m/s, and at 36 different wind azimuths. So, they turned the KEMAR in 10-degree increments and measured wind noise at each one.

*Comment from Gus Mueller: As rumor has it—In the early days of working with the wind machine at the NAL research lab, the researchers were having problems with the machine producing an even flow for all positions. To solve this, they used some highly acclaimed research supplies—drink straws from McDonalds. By stacking 100s of straws on top of each other, they created a honeycomb effect for the wind the pass through.

What They Found

The authors calculated SII in quiet, and across the different conditions for two different hearing losses: 20 sloping to 50 dB HL hearing loss; and 35 sloping to 65 dB HL hearing loss. They found that at 3 m/s, there was a drop in SII in all of the hearing aids as compared to quiet. When wind speed increased to six m/s, important speech information was essentially masked out completely across all of the models.

The mini BTES had similar wind noise levels between front and rear microphones. The BTEs showed a slightly different pattern; wind noise at the rear microphone was at a much higher level than noise at the front microphone. When you compare the BTE to the mini BTEs, it gets a little complicated. One of the mini BTEs had higher mean wind noise levels at the front mic and lower mean wind noise levels at the rear mic, compared to the BTE. The other mini BTE had the same mean wind noise level at the front mic as the BTE, and a lower mean wind noise level at the rear mic. So, mean wind noise levels in the BTE were higher, lower, or similar to those of the mini BTE devices, depending on the mini BTE device, microphone (front or rear), and frequency band.

Why is This Important?

Mean wind noise levels were much different across the two mini BTE devices, particularly in the low frequencies. In general, small differences in microphone location, shell design and/or wind shielding could all result in large differences in wind noise levels across the mini BTE hearing aids. This study was conducted on the KEMAR, and so in the real world we might also expect large individual differences based on exactly how mini BTE and BTE devices fit on the ear, and how much the pinna shields the microphone ports. For the mini BTE devices, wind noise levels were lower at the rear microphone for about half of all the azimuths. For the BTE device, it was likely that wind shielding differences strongly affected level differences between the microphones. In other words, one of the microphones was shielded much better than the other. Reinforcing what I've already said, wind has the potential to significantly reduce speech intelligibility across BTE and mini BTE devices. Higher wind noises can wipe out speech intelligibility altogether. Design plays a large difference in how much wind noise we get at the output from the front and rear microphones. Today’s hearing aids of course do have wind reduction algorithms, and they too have been studied—research findings to report on another day!

Article 5: The Effects of FM and Hearing Aid Microphone Settings, FM Gain and Ambient Noise Levels at the Tympanic Membrane (Norrix, Camarota, Harris & Dean, 2015)

This study looked at how FM and hearing aid microphone settings, including FM gain (mix ratio) and background noise levels, influence SNR at the tympanic membrane and speech recognition in noise. They were interested in closely examining how the FM was set up, how much the FM was turned up (FM gain, or sometimes called mix ratio), and if the ambient noise levels at the two microphones (the FM microphone and the hearing aid microphone) affected the SNR.

Many aspects in this experiment have been studied before, but perhaps not as carefully or controlled as was done in this study. We do know that FM configuration (FM-only versus FM+EM), setting of the hearing aid microphones, and the FM gain relative to hearing aid gain (mix ratio) have all been shown to affect SNR. Understanding these trade-offs is important for clinical decision making, and for counseling about expectations. We also know that turning on the hearing aid microphone (the FM plus environmental mic, or FM+EM setting, also sometimes called FM+HA) greatly reduces speech recognition in noise compared to FM-only. However, it's oftentimes the default recommendation to allow for monitoring and overhearing. The reason I was particularly interested in this study is we've seen a proliferation of remote microphone technologies with adult hearing aid users. Therefore, this study is relevant because the increased introduction of remote microphone technologies (e.g., spouse microphones) expands the utility of this information to new populations.

What They Did

The researchers calculated the estimated SNR as a function of noise level and microphone conditions based on a theoretical model. Then, they evaluated speech recognition in noise in 10 adults and 10 children. Importantly, they evaluated individuals with normal hearing across the aided conditions, which does somewhat limit generalizability. They used the same BTE hearing aid, FM transmitter, and FM receiver across all the participants. They did provide a little bit of high frequency gain, even though these are individuals with normal hearing, and they sealed the hearing aid into the ear using custom, fully-occluding acrylic full shell ear molds with an appropriate slim tube for each individual listener. The hearing aid was set to use an omnidirectional microphone, noise reduction was off, and a direct audio input setting was set to 0 dB. FM gain was set to +12 dB, and pre-emphasis and FM de-emphasis, as well as digital signal processing, were turned off. For behavioral testing a 55 dB SPL noise was presented from two different speakers. One was very close to the FM microphone, and one further away from the hearing aid.

What They Found

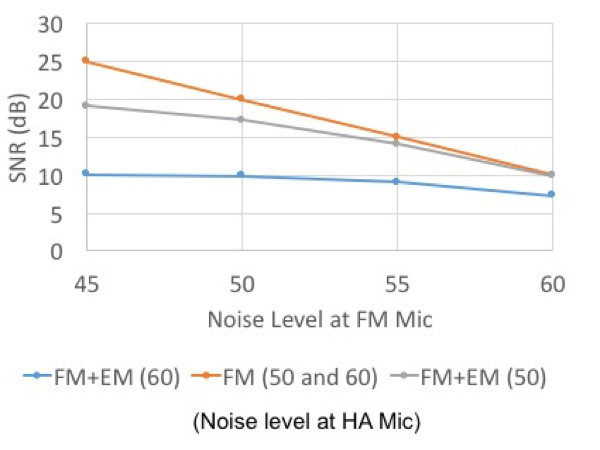

They compared FM-only to FM+HA and found that both children and adults have better performance with FM-only. This is something that has been shown a number of times before, including in individuals with hearing loss. In this study, the advantage was about 3.5 to 4 dB. They also calculated the signal to noise ratio as a function of noise level (Figure 1). First, speech was presented at 58 dB SPL at the hearing aid, and 70 dB SPL at the FM microphone. On the graph, the noise level at the FM mic (45, 50, 55, and 60) is on the x-axis, and the SNR in dB is y-axis. There were three conditions, FM+EM (60), FM (50 and 60), and FM+EM (50). The numbers in parentheses represent the noise level at the hearing aid microphone.

For the FM condition (the orange line), regardless of whether the noise level at the hearing aid mic was 50 or 60, there was a decrease in SNR as the noise level at the FM mic increased from 45 to 60. Every 5 dB increase in noise level results in a 5 dB decrease in SNR. This is not surprising for the FM-only condition; the SNR at the eardrum is primarily determined by the SNR at the FM mic.

We see something different when we look at the FM+EM conditions. For the FM+EM (50) condition (gray line), the SNR is poorer than the FM-only condition, but then starts decreasing with increasing noise level at the FM mic. For the FM+EM (60) condition (blue line), we see that that 60-dB noise level at the hearing aid mic limits the SNR for 45 and 50, and then the SNR drops as the noise level at the FM mic increases. In other words, for the FM+EM condition, the SNR is determined by the highest level of the speech, which is typically at the FM mic, and the highest level of noise, which can be either at the FM mic, or at the hearing aid mic.

Figure 1. Calculated SNR as a function of noise level.

The investigators also calculated SNR as a function of FM gain, or what is also referred to as mix ratio. In this case, FM gain was set to zero, +6, +8, or +10. For the FM-only conditions, there was no effect of increasing the FM gain. However, for the FM+EM settings, increasing FM gain improves SNR. When the noise levels at the FM and environmental microphones are similar, there is an SNR improvement of +2 dB. Greater improvement is seen when the level of the noise at the FM mic can be reduced relative to the level of noise at the hearing aid microphone.

Why is This Important?

We know that activation of the hearing microphone in the FM+EM condition can greatly limit FM benefit. This study points out that the SNR at the FM microphone also limits benefits, and that noise level at both the FM mic the hearing microphones is important. If we want to improve signal to noise ratio in these FM conditions, or remote microphone conditions, it's important that we maximize the SNR at the FM microphone in order to achieve the most improvement possible.

Clinical Tidbits: Some Interesting Research Findings

As I mentioned at the beginning, there are a couple other studies that I want to mention briefly, as they have important clinical points.

Probe Mic Measures – Insertion Depth

This first study analyzed what happens when we take probe mic measurements using different insertion depths (Vaisberg et al, 2016). The authors measured out various depths on the probe tube, and then inserted the probe tube in the ears of 14 subjects at these various depths (the depths were based on distance from the participants’ inter-tragal notch; consider that the average ear canal is about 25mm long, and the averge distance from the opening of the ear canal to the inter-tragal notch is 10mm). They looked at test-retest reliability, and then compared levels across frequencies.

Not surprisingly, they found that at a shallow insertion depth of 24mm, the test-retest differences in dB were fairly large, 4-5dB on average, with quite a large spread in the individual data. At the 26mm depth, the test-retest reliability improved, and continued to get better in the high frequencies at the 28mm depth. At the 30mm depth, test-retest reliability was good through 10 kHz, or so.

This study suggests that we might be able to measure the signal level fairly reliably out through 10 kHz, by using a 30mm depth, which is already what some audiologists use. These authors also attempted to look at accuracy. One of the weaknesses of this study is they didn't measure the signal level at the eardrum. They compared results to the 30mm depth, and assumed that was a fairly accurate measure of the signal level at the eardrum. They did point out that the size and direction of error was predictable in this study. That is, the more shallow the insertion depth, the more you would underestimate the level at the 30mm depth in the high frequencies. More work in this area is needed. However, I think this study points out that simply by going to a slightly deeper insertion depth when taking probe mic measures may allow us to take more reliable measures in the high frequencies.

Frequency Compression & Music Listening

Finally, I wanted to briefly mention a study that has looked at the effect of frequency compression on music perception in listeners with and without hearing loss, and with and without musical training (Bruna, Mussoi, & Bentler, 2015). This study looked at preference using paired comparisons, and strength of preference ratings. Listeners were asked to compare frequency compression on and off, and say which one they preferred, and then give a strength of preference rating.

Across all of the listeners, both those with and without hearing loss, those who had musical training and those who did not, less frequency compression was preferred for music. Listeners with musical training had stronger preferences for no frequency compression. Listeners with normal hearing had stronger preferences for no frequency compression than listeners with hearing impairment. However, the authors noted that for people with hearing loss, particularly those without musical training, the strength of preference for frequency compression on versus off, was not that strong as compared to their preferences for frequency compression on versus maximized. The authors suggested that mild amounts of frequency compression may not be detrimental to perceived sound quality for music, particularly in listeners with impaired hearing.

Questions and Answers

Considering the implications of use of sentence materials in auditory training, can we say that more the individual uses his hearing aids in conversational speech, listening, and responding, the greater improvement he will realize? In other words, the more you use it, the better your hearing capabilities will improve. Is perhaps real-world practice the best practice for hearing aid users?

I think there are a lot of studies out there looking at how well different types of targeted training can be generalized. We've seen this in kind of the popular brain training groups that have been out there, like trying to improve your cognition by doing things on a computer that may be translatable. One interesting thread in most of that research is that there is no practice better than doing the actual activity. I think that the advantage of auditory training is it sort of forces the practice. I wouldn't be surprised if the research finds that best type of training is the training that is most realistic to what we do every day.

References

Brons I., Houben R., & Dreschler, W.A. (2013) Perceptual effects of noise reduction with respect to personal preference, speech intelligibility, and listening effort. Ear and Hearing, 34, 29–41.

International Telecommunication Union (ITU). (2001) ITU-R Recommendation BS.1534: Method for the subjective assessment of intermediate quality level of coding systems (MUSHRA).

Kochkin, S. (2010). MarkeTrak VIII: Consumer satisfaction with hearing aids is slowly increasing. Hearing Journal, 63(1), 19-27.

Miller, C.W., Bates, E., & Brennan, M. (2016). The effects of frequency lowering on speech perception in noise with adult hearing-aid users. International Journal of Audiology, 55(5), 305–312. doi: 10.3109/14992027.2015.1137364

Mussoi, B.S.S., & Bentler, R.A. (2015) Impact of frequency compression on music perception. International Journal of Audiology, 54(9), 627-633.

Norrix, L.W., Camarota, K., Harris, F.P., & Dean, J. (2015). The effects of FM and hearing Aid microphone settings, FM gain and ambient noise levels at the tympanic membrane. JAAA, 27, 117–125.

Saunders, G.H., Smith, S.L., Chisolm, T.H., Frederick, M.T., McArdle, R.A., & Wilson, R.H. (2016). A randomized control trial: supplementing hearing aid use with listening and communication enhancement (LACE) auditory training. Ear and Hearing, 37(4), 381–396.

Scollie, S., Levy, C., Pourmand, N., Abbasalipour, P., Bagatto, M., Richert, F., Moodie, S., Crukley, J., & Parsa, V. (2016). Fitting noise management signal processing applying the American Academy of Audiology Pediatric Amplification Guideline: Verification protocols. JAAA, 27(3), 237-51. doi: 10.3766/jaaa.15060

Sweetow, R. W., & Sabes, J. H. (2006). The need for and development of an adaptive listening and communication enhancement (LACE) program. JAAA, 17, 538–558.

Vaisberg, J.M., Macpherson, E.A. & Scollie, S.D. (2016). Extended bandwidth real-ear measurement accuracy and repeatability to 10 kHz, International Journal of Audiology, 55(10), 580-586.

Zakis, J.A, & Hawkins, D.J. (2015). Wind noise within and across behind-the-ear and miniature behind-the-ear hearing aids. Journal of the Acoustical Society of America, 138(4), 2291-300. doi: 10.1121/1.4931442

Citation

Ricketts, T. (2017, June). Vanderbilt Audiology Journal Club - research examining benefit of hearing aid features. AudiologyOnline, Article 20408. Retrieved from www.audiologyonline.com