Learning Outcomes

After this course learners will be able to:

- Describe existing data on the topic of emotions and hearing loss.

- Explain how to better counsel patients and their families on the impact of hearing loss and emotions.

- Describe research tests used to assess emotional constructs.

Introduction

It is likely that we have all had conversations where we have felt annoyed or frustrated. Perhaps the person we were speaking with brought up a topic that made us uncomfortable, or told a joke that rubbed us the wrong way. When this happens, we may respond by saying, "OK fine, whatever" or make a similar remark to convey that we are annoyed or frustrated. What if our conversation partner ignored that cue and continued to carry on the conversation? Our annoyance would likely increase. What if this happened in conversations with other emotions, like when we have felt angry, for example? If your communication partner is not picking up on your emotions, it can take a toll on the relationship. Today, I'll be discussing research that suggests that individuals with hearing loss interpret emotions differently than individuals with normal hearing. This has nothing to do with audibility. The research takes into account that these individuals with hearing loss are hearing the signal, but they are interpreting it in a different way.

Emotion is defined as "an affective state of consciousness in which feelings (e.g., joy, sorrow, fear, hate) are experienced, as distinguished from cognitive and volitional states of consciousness". Emotions involve different components, such as subjective experience, cognitive processes, expressive behavior, psychophysiological changes, and instrumental behavior. All of these components of emotion are linked to our emotional environment. Our emotional environment involves not only our perception of the emotional world around us, but also the expression of our internal emotions, and how these two react.

Why Do We Care?

As hearing health care providers, why should we care about emotions? We may not see an immediate connection between emotions and hearing loss. Through the course of our emotions study at the Phonak Audiology Research Center (PARC), the number one question that I received from participants was, "What does this have to do with hearing loss?" This demonstrates that there is a misunderstanding about the role hearing loss plays in everyday life. It's more than just a loss of audibility; it can have a deep effect on relationships and the connection to the physical and emotional world. As hearing health care providers, we need to act as educators and inform individuals with hearing loss and their families of these emotional implications, and the strain that can hearing loss put on the family.

Why does Phonak cares about emotion? While many of our research studies revolve around hearing aid technology products and features, it's important that we stay connected to global audiology topics like emotions. Although not directly related to our product, it is vital to understand the people wearing our product in order to make better hearing aids. It's our goal to inform audiologists on this topic, so that they can more effectively counsel patients and families.

Our bodies are wired for emotion; emotions drive nearly all of our human behaviors. In 2014, a team of scientists in Finland asked 700 people (from Finland, Sweden and Taiwan) to map out the location on their bodies where they felt different emotions. The results were surprisingly consistent across cultures. The results were not based on any type of scan, but on each person's perception of the region of their body that they felt was the most activated by each emotion. This suggests that at least in perception, each emotion activates a distinct set of body parts, and the mind's recognition of those patterns helps us consciously identify the emotion within ourselves (Nummenmaa, Glerean, Hari & Hietanen, 2014).

Emotional Intelligence

Emotional intelligence plays an important role in many aspects of our well-being. It facilitates cognition, attention, happiness, cognitive performance and speech recognition. We know that the more socially engaged and connected people are, the happier they are. We also know that hearing loss can lead to feelings of disconnectedness and isolation. Additionally, research has shown that isolation increases depression, cardiovascular disease, and can have a significant impact on overall health. As hearing health care providers, it's important that we think holistically about the patient and their hearing loss, to help them understand these implications.

The topic of emotional intelligence is broad, with many definitions and differing theories. For the purposes of today's presentation, I will be focusing specifically on two domains of emotional intelligence: recognition and emotional range.

Recognition. Recognition is the act of one person expressing an emotion and the other person receiving that information. In this course, the individual with hearing loss is the person receiving the information. How accurately can they identify the emotion being conveyed by a conversational partner? As stated earlier, emotional recognition is important for relationships.

Emotional range. Emotional range is the act of exhibiting emotional feeling. If we think about the happiest and the saddest someone feels, how wide is this range between these two extremes? Do people experience the full range, or do they function within a more limited range of emotion, never truly experiencing the extremes (i.e., the highest highs or the lowest lows). Research suggests that people with hearing loss have a more limited emotional range than those with normal hearing.

Effect of Hearing Loss and Age on Emotional Responses to Non-Speech Sounds

The first study we're going to review was conducted by Erin Picou from Vanderbilt University (Picou, 2016) and deals with emotional range. Picou focused on non-speech sounds, and how hearing loss affects this domain. The test she used is called the International Affective Digitized Sounds test (IADS). The IADS test involves presentations of non-speech sounds of a wide variety (e.g., environmental sounds, alarm sounds, animal sounds, human sounds). The researcher administering the test must remain expressionless and not impart any bias whatsoever. Based on a person's facial expression, you can tell how they are reacting to some of the sounds. Sometimes the participant can identify the sound and reacts very strongly to it; other times, they don't recognize it, and they perceive it as a random sound.

Picou's study is based on the dimensional view of emotion, which suggests that most variability in judgements can be accounted for with three dimensions: arousal, valence and dominance. Arousal relates to how exciting or calming the sound is. Valence means how pleasant or unpleasant the sound is. Finally, dominance has to do with the level of control someone feels over the sound (control vs. submissive). Today, we're going to focus primarily on Picou's findings related to arousal and valence.

Valence is a psychological term that means the intrinsic pleasantness/goodness or unpleasantness/badness of something - in the case of Picou's study we are referring to the sounds. The rating scale was between 1 and 9, wtih 9 being a very pleasant sound and 1 being a very unpleasant sound. Some examples of sounds that were played include bees buzzing and a human sneezing. This is a very subjective task. Depending on a participant's experience with and perception of each sound, they would rate the sounds on the 9-point scale.

Picou's main research question was: What are the effects of acquired hearing loss and age on these emotional ratings of non-speech sounds? Her hypothesis was based on the fact that older adults tend to exhibit a positivity bias. In other words, with age, people they tend to see things more positively. Because Picou wanted to assess the effect of both hearing loss as well as age, she had three participant groups: a younger, normal hearing group; an older, normal hearing group (to assess the effect of age); and an older group with hearing loss (to assess the effect of hearing loss).

In addition to the main research question, Picou also wanted to examine the effect of presentation level. There were two participant groups (A and B) and four different presentation levels: Group A heard the sounds at 35 and 65 dB SPL; Group B heard the sounds at 50 and 80 dB SPL.

Results

The range of how pleasant participants rated the sounds stayed consistent for the young, normal hearing listeners and the older normal hearing listeners. Their ratings did not seem to be impacted much by presentation level. In contrast, the older group with hearing loss had a much more limited range compared to the other two participant groups, and their valence response ratings were lower than their peers with normal hearing. This seems to be an effect of hearing loss, and not necessarily age. This finding was especially true at the 80-dB presentation level. At this higher presentation level, the range of pleasantness is limited compared to the other two groups and compared to the other presentation levels .

Another main finding of this study is that listeners with hearing loss exhibited a reduced range of valence in responses. This could be due to recruitment, or possibly the loss of outer hair cells. With increasing presentation level, we're getting a reduced range of valence in responses, as well as a lower average pleasantness rating. When we extrapolate this to the field of audiology, we are commonly amplifying sounds for people with hearing loss (e.g., turning up the TV, turning up the telephone). These results suggest that by making things audible for people with hearing loss, we could be inadvertently causing more negative emotional responses.

This study left us with some questions. Can we can generalize this study to speech sounds (as the sounds presented in the study were non-speech sounds)? What happens with frequency-specific amplification? In Picou's study, the stimulus levels were presented in sound field; they were not through personalized hearing aid fittings with frequency-specific amplification.

Relationship Between Emotional Responses to Sound and Social Function

From the 2016 study, Picou found this pattern of limited emotional range with hearing loss and lower feelings of pleasantness. A second investigation by Picou, Buono and Virts (2017) tied these findings into behaviors or patterns seen in everyday social behavior. They found a strong relationship between feelings of isolation and the ratings of pleasantness.

Picou asked the following research questions:

- If listeners perceive pleasant sounds as unpleasant, are they less likely to engage socially?

- Is there a relationship between lab measures of emotional responses to sound and social connectedness?

In this study, the authors used the IADS test, along with a couple of subjective ratings scales (Perceived Disconnectedness and Social Isolation Scale and Hospital Anxiety and Depression Scale).

Results

The main finding of this study is that there was a significant relationship between feelings of disconnectedness and the average rating of valence. We can't infer causation, or which comes first: the unpleasant interpretation of sound leading to social isolation, or social isolation leading to greater discomfort in sounds. However, there is a strong correlation. Regardless of the reason, it means that finding sounds less pleasant corresponds to greater social isolation. In Picou's previous study, we saw that hearing loss and lower ratings of pleasantness go hand-in-hand, and we can infer some connection between hearing loss, social isolation, and feelings of unpleasantness in the environment.

In short, the findings strongly suggest that hearing loss has a significant impact on overall well-being, beyond a loss of audibility or hearing sensitivity. People with hearing loss experience a reduced dynamic range of emotions, occurring at particularly at high sound levels, and correlating with social isolation.

Perception of Emotional Speech by Listeners with Hearing Aids

Next, we'll move to look at another domain of emotion: recognition. Huiwen Goy is a researcher at Ryerson University in Toronto. Goy and her team investigated the perception of emotional speech by listeners with hearing aids (Goy, Pichora-Fuller, Singh & Russo, 2016). They were trying to understand if hearing aids help, or possibly even harm, emotional perception.

Goy and team used the Toronto Emotions Speech Set (TESS). This was a closed set task and requiring participants with hearing loss (unaided, without hearing aids) to listen to a sentence presented auditorily and identify the emotion being conveyed. The following seven emotions were provided as options to the listener:

- Happy

- Pleasant surprise

- Neutral

- Sad

- Disgust

- Angry

- Fear

In real life, we could imagine sad being very soft, angry possibly being louder, etc. In this scenario, the sounds were more balanced. The sentences were all read by the same female actor. There were no visuals for the listener; the sentences were only presented verbally, to test participants' auditory skills.

One of the limitations of this study is the fact that it was a closed set task. Also, listeners were given options to choose from, which simplified the task. Furthermore, the range of emotions represented were the seven basic emotions. There were not any nuanced emotions (e.g., jealousy, frustration) that may be more difficult to discern.

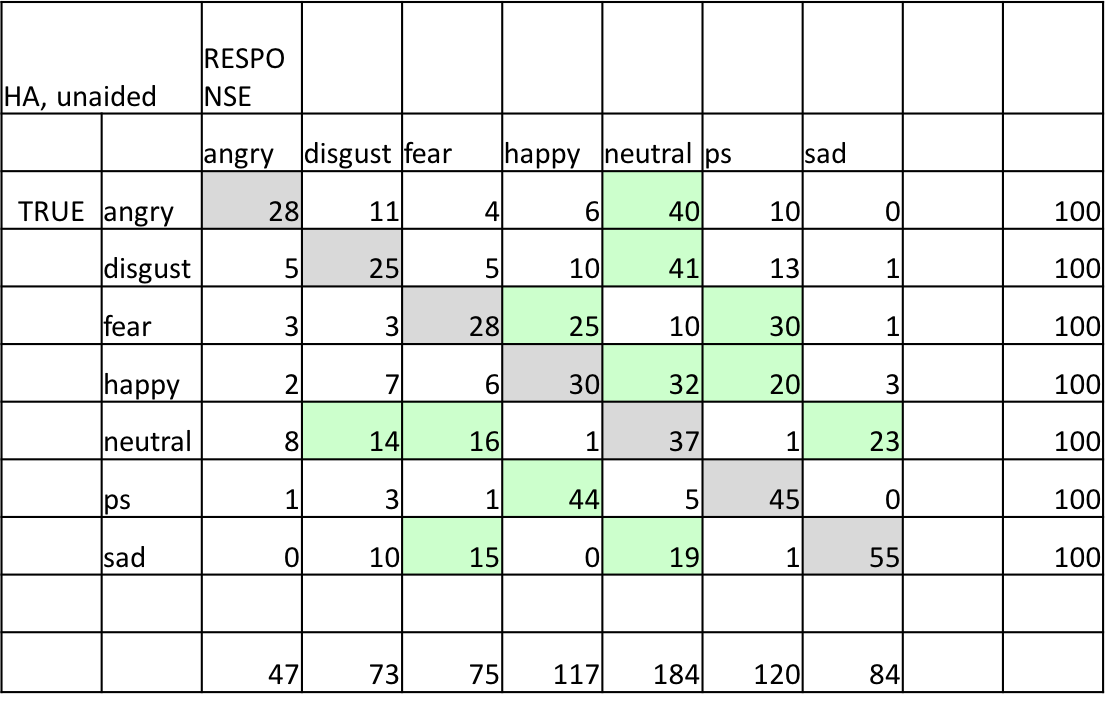

Results

The TESS responses of unaided hearing loss participants were plotted into a confusion matrix (Figure 1). The numbers in each box indicate the percentage of people that were confused about each emotion. When the emotion presented was angry, 40% of the participants perceived that emotion as neutral. Next, when presented with the emotion disgust, 41% responded neutral. Anger and disgust are both very strong emotions. To perceive them as neutral in real life situations could potentially cause social difficulties. There was another strong confusion between happy and pleasant surprise, however those are both positive emotions, and the confusion between those two makes a bit more sense.

Figure 1. Confusions made by HL listeners, when unaided.

Role of Hearing Aids

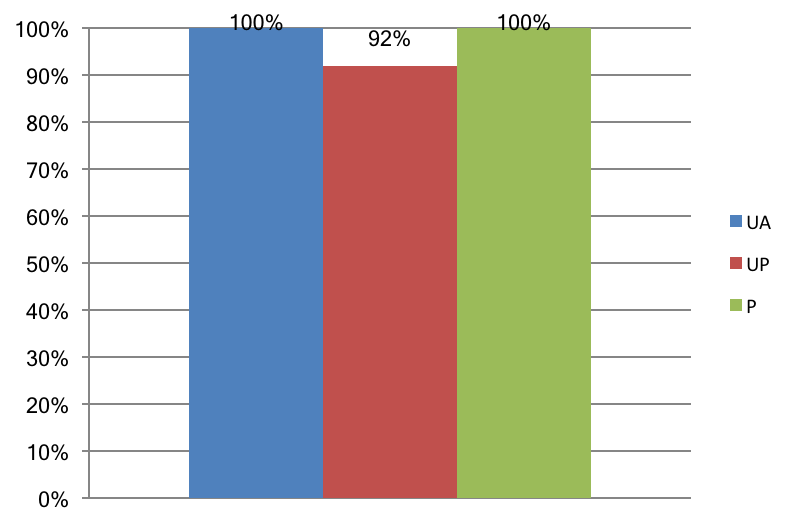

The next question that Goy and team wanted to address was whether or not hearing aids are beneficial in recognizing emotion. Young adults with normal hearing score about 90% on the TESS questions in quiet. Even in background noise, it doesn't affect the young, normal hearing listeners much. Similarly, with older normal hearing listeners, performance is fairly stable. There were no significant differences in this level of performance, suggesting that age does not have an impact on emotion recognition performance. In contrast, TESS scores dropped considerably in older listeners with hearing loss in the unaided condition (45%). This is an impact of hearing loss alone; this is not an age effect. Surprisingly, adding hearing aids for the older listeners with hearing loss improved their emotion recognition scores only slightly.

These participant groups demonstrated that unfortunately, hearing aids neither help nor significantly harm emotion recognition performance. There are a couple of reasons why this could be the case. First, the hearing aids may not be helping in identifying the cues necessary for emotion recognition. Although they may be increasing audibility, the user only receives a small amount of net benefit. In addition, the hearing aids may be distorting some of the cues needed for emotion recognition. There is also the possibility that perception of emotion is a purely cognitive skill, and may not be affected either positively or negatively by hearing aids. Hearing aids work at a peripheral level, and emotion recognition occurs at a much higher level in the brain, beyond the auditory system. At this point, these theories are speculative and require additional investigation. Whatever the case, we're not seeing any huge improvement with hearing aids, at least in the way they currently function.

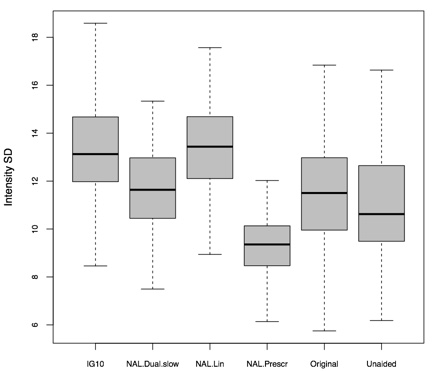

Huiwen Goy took her research a step further. She hypothesized that if we could compare the emotions on a raw recording level (i.e., an acoustic level), we could identify what acoustic cues people are using to identify emotions in speech, as well as what acoustic properties listeners are using. Her line of thinking was that if we could identify what's different between emotions, we'll not only understand the cues that are being missed by individuals with hearing loss, but also how we need to design hearing aids that are better attuned to these differences.

When recorded and plotted on a graph, emotions look different from one another, with varying frequency changes and different intensity and acoustic profiles. Some emotions appear flatter than others (e.g., sadness). Others have huge peaks (e.g., surprise). The wave form, the spectrum, the time domain, the intensity domain -- all of these characteristics could be used by the human brain and auditory system to identify one emotion from another.

Goy used linear discriminant analysis (a method used in statistics and pattern recognition) to find a linear combination of features that characterizes or separates two or more classes of objects or events. Goy determined that there were three main measures of emotions that correctly classify 96% of the emotional speech tokens:

- The mean of the fundamental frequency (F0)

- The standard deviation of the fundamental frequency (F0 SD)

- The spectral center of gravity

Goy found that these three measures in combination created a distinct profile that classify 96% of the speech tokens. This led her to believe that these are the cues that could be used by listeners to identify emotions in speech, and therefore could be disrupted or helped by hearing aids. Figure 2 shows how these particular cues are disrupted by certain hearing aid processing strategies. These are the same emotions now presented through a hearing aid with fast amplitude compression and a NAL prescriptive formula. You can see how much closer together the emotions are. They are no longer spanning a wide range. Using this visual, we can clearly see the effect of hearing aid processing. These emotions are much closer together, potentially leading to more difficulty identifying each of these emotions as distinct, as well as difficulty identifying them accurately.

Figure 2. Less intensity variation with NAL fast amplitude compression.

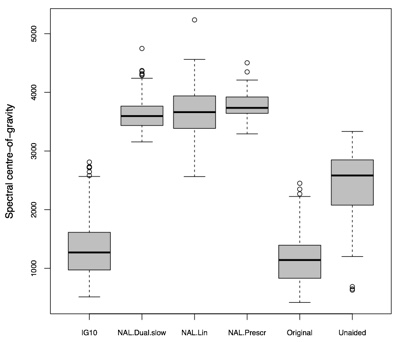

In Figure 3, we can also see more high frequency energy with NAL fittings. If we look at this spectral center of gravity domain, NAL could potentially disrupt the cues of this spectral profile that may be used by listeners.

Figure 3. More high frequency energy with NAL fitting.

Remaining Questions

Are we disrupting these emotion cues with hearing aids? Goy's research showed that older listeners with hearing loss have difficulty recognizing emotion, as compared to their normal hearing peers, indicative of a hearing loss related correlation. One thing to consider about Goy's investigation of acoustic cues in the aided condition is that the individuals tested were wearing their own personal hearing aids. They came in with hearing aids that were fit by their audiologist or hearing health care provider. That calls into question whether they were receiving sufficient audibility with their existing instruments. Were they wearing hearing aids that had the appropriate coupling, the appropriate fit, appropriate prescription target and the appropriate style for their hearing loss? Finally, we have this remaining question of whether this is a cognitive issue or a peripheral issue? Is it something that we can make better with hearing aids? Or is it a higher-level issue that emerges over time with the introduction of hearing loss?

The Effect of Hearing Aids on Emotion Recognition

Next, we'll review the study from the Phonak Audiology Research Center (PARC). Similar to Goy's study, we were looking at emotion recognition using the TESS. Participants had to identify emotions in a closed set task. We aimed to answer the following questions:

- Would we get a different result than Goy et al. (2016) with hearing aid fittings that are verified? When we can ensure audibility in hearing aids that are appropriately fit, what would be the result: same, better or worse?

- Can we do anything to the hearing aid processing to make emotion recognition better?

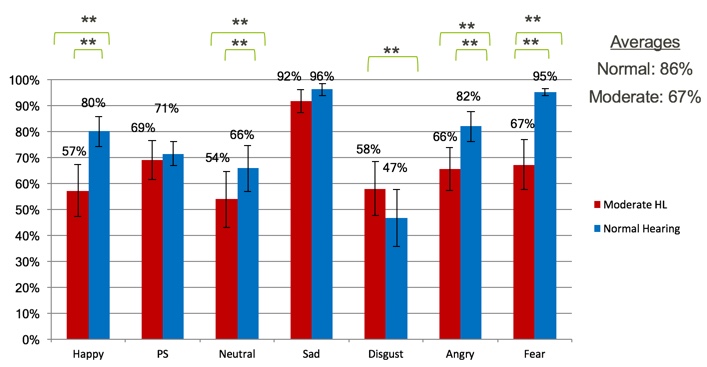

We used two groups of participants: a normal hearing group and a group with moderate hearing loss. The normal hearing group performed extremely well on the TESS, scoring the lowest percentage on the emotion disgust (78%) and the highest on sadness (99%), with an average performance of 90%. The experimental group consisted of 17 participants with moderate hearing loss, averaging 67 years of age. In Figure 4, we can see the comparison between the normal hearing group (blue) and the moderate hearing loss group (red). If we look at the average performance, normal hearing listeners had about 90% accuracy, while those with moderate hearing loss averaged 67% correct. There are numerous statistically significant differences at a P value of .01. It is evident that individuals with hearing loss exhibited significantly more difficulty in identifying emotions accurately than their normal hearing counterparts. The most difficult emotion for both groups to identify was disgust. In that case, the moderate hearing loss group did perform a little higher than the normal hearing group. Both groups performed their best in identifying sadness.

Figure 4. Unaided comparisons (normal hearing vs. moderate HL).

With regard to confusions, the normal hearing group made a small number of errors. With the moderate hearing loss group, we see the errors in the same places. For example, both groups confused neutral and disgust, with a higher percentage of errors for the moderate hearing loss group.

If we examine some of the most prominent errors (neutral/disgust), and we refer back to Goy's theory, we can see that neutral and disgust share a similar profile in these three domains:

- The mean of the fundamental frequency (F0)

- The standard deviation of the fundamental frequency (F0 SD)

- The spectral center of gravity

In the lower graph of Figure 5, neutral and disgust are clustered closely together on the spectral center of gravity. On the upper graph (the standard deviation of the fundamental frequency), neutral and disgust are also clustered together (the green and black circles). Now, we can see how some of these acoustic properties translate into confusion.

Figure 5. Combination of acoustic properties (unaided).

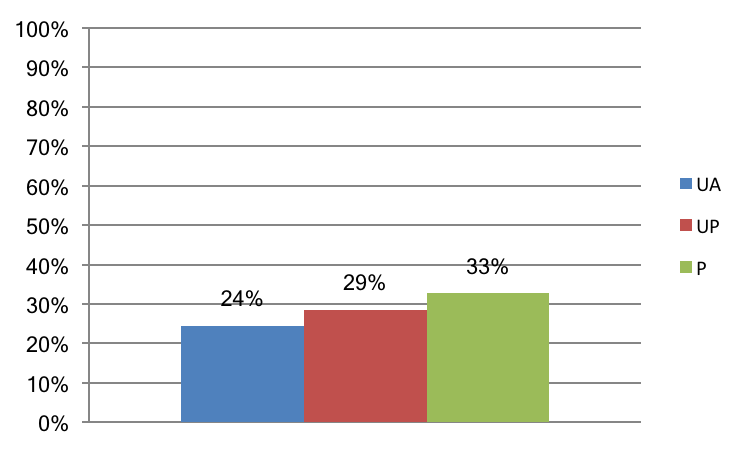

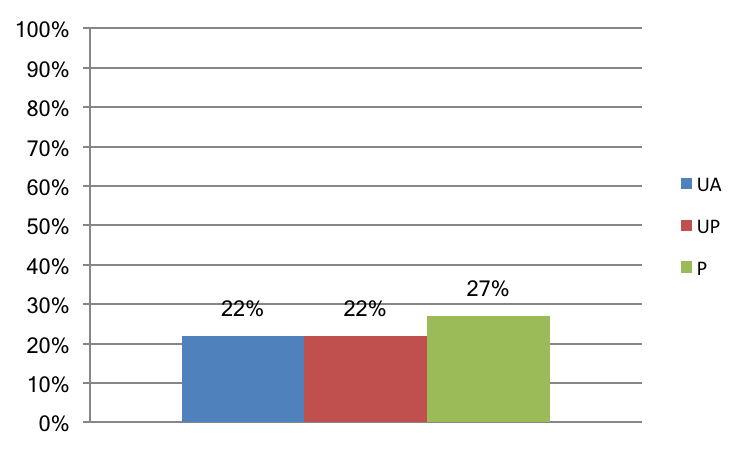

Aided Condition Results

Our next step was to fit the moderate hearing loss group with hearing aids and test them in the aided condition, as everything up to this point was unaided. We gave them two sets of hearing aids for this test. The first set of hearing aids was in a highly "processed" condition. In other words, we turned on all available sound cleaning features, we turned on frequency lowering, and we used prescribed wide dynamic range compression (WDRC). Essentially, we used all available technologies in the hearing aids. The second set of devices were in a lower, more "unprocessed" condition. We turned off all of the adaptive sound cleaning features. Anything adaptive that could be turned off, we turned off. We also turned off frequency lowering, and we switched into a linear amplification strategy for this condition. The purpose was to see turning off some of these adaptive features would allow us to achieve a better result, and if emotion identification would be easier without some of the extra processing. Our intent was to compare these two extremes.

In terms of the percentage of correct responses on the TESS, normal hearing listeners exhibited a similar performance across all three conditions (unaided, using unprocessed hearing aids or processed hearing aids). We found the same trend for individuals with moderate hearing loss. The percent of emotions correctly identified were virtually the same across the unaided, unprocessed and processed conditions. Even when we made these manipulations in the hearing aids, we did not see any difference in performance. This could mean that we did not make the proper changes in the hearing aid. It could also be possible that recognizing emotion in speech is more of a cognitive issue, where something is happening at a higher level that is not significantly impacted by changes in the hearing aid. With the Phonak study, we did find a correlation that was not revealed in the Goy study: a correlation with age. In our research, we found that as the age of participants increased, the performance on the TESS test decreased.

At Phonak, we are working on distinguishing between processing that is done at a cognitive level, and that which is achieved at a more peripheral level. Our goal is to make hearing aids more attuned to cues necessary for emotion recognition.

Case Studies

Following the Phonak study, one participant returned for a routine research appointment. After the participant left, he telephoned to convey an apology to me, as he felt that he had offended me during the appointment. I never felt offended during the appointment, but his misunderstanding highlighted the purpose of our study: to ascertain the disconnect between an emotion conveyed by a speaker, and the emotion perceived by a hearing-impaired listener.

Next, I will present a few case studies and discuss what types of cues people are using for this emotion recognition task.

Are Participants Using Acoustic Cues (Pitch/Temporal)?

The first case is a gentleman who is a lifelong musician and conductor at a local orchestra (Figure 6). He plays multiple instruments. If we think about the acoustic characteristics and the prosody of someone speaking using each of these emotions, would a person who is more attuned to music and changes in melody be better at this task? As it turned out, this gentleman was not particularly great at this task, leading us to believe that there must be something else at play here, at least with this individual.

Figure 6. Lifelong musician.

Is Audibility a Factor?

Figure 7 shows a normal hearing listener who had no hearing thresholds below 35 dB. However, this person had a very difficult time on this task. At least for this individual, audibility is not an issue. It just seems to be a skill that people have.

Figure 7. Normal hearing listener.

Does Duration of Hearing Loss Make a Difference?

Finally, we had a participant who had hearing loss from the age of six (Figure 8). Although he had hearing loss for quite a long time, he was not a consistent hearing aid user. Despite his hearing impairment, this listener was stellar at this task. In all three conditions, he scored close to 100%. One might assume that a person with longer-term hearing loss and inconsistent hearing aid use would not perform this well, but he definitely had the ability to effectively do this task.

Figure 8. Long duration of hearing loss.

Next Phase of Emotions Study

In the next phase of Phonak's emotions study, we are seeking to find answers to the following research questions:

- Is there a relationship between emotion “recognition” performance and experienced emotional range?

- How do hearing aids impact emotional range?

- How closely do we approximate emotional range in the lab as compared to the real world?

In order to achieve this, we will be putting our participants through a series of visits, using a variety of outcome measures.

Visit 1: Subjective Measures

On their first visit, participants will be given the Lubben Social Network Scale (LSNS). Developed by Lubben and Gironda in 2004, this scale is used to to gauge social isolation in older adults. We want to see how connected people perceive themselves to be, and relate this to their emotional dynamic range and their recognition ability. Perhaps if people are more social, and if they interact more with family members and friends, they're better at recognizing emotion.

We will also employ the Trait Emotional Intelligence Questionnaire (TEIQue), which was developed at the London Psychometric Laboratory (Petrides, 2009). This measure goes through 15 different facets of emotions, in order to assess the emotional world of each individual. We're also interested in whether or not the results of this questionnaire correlate with any of the other measures (e.g., emotion recognition).

Visit 2: Lab Measures

In their second visit, participants will complete the two tasks that we discussed previously: the IADS (to determine emotional range) and the TESS (emotional recognition). We are hoping to ascertain whether people with a more limited emotional range do not recognize emotion as easily.

Visit 3: Ecological Momentary Assessment

Finally, we will subject participants to a newer research tool called the Ecological Momentary Assessment (EMA). The EMA involves repeated sampling of subjects' current behaviors and experiences in real time, in their natural environments. The way the EMA will work in our study is that participants will be provided a cell phone that is paired to the hearing aids. They will be prompted to take a questionnaire in real time, based on the noise floor level and other considerations. They are going to be filling out real-time questionnaires of how pleasant the environment is, how loud the environment is, what they're listening to at that given point in time, to try to see if our lab measures of emotional range correspond to their emotional range in the real world. We will also try to determine if we are getting an accurate representation of their real life when we test in the lab. I think we're going to incorporate the EMA into more and more studies, so that we can have both the highly controlled lab research, as well as the real-world environment piece that brings everything together, because we know that people do not experience life in a sound booth.

Summary and Conclusion

In conclusion, hearing loss seems to correspond with lower ratings of pleasantness, as well as a more limited range of emotions, both of which correlate with greater feelings of disconnectedness and social isolation. As a result of our research at PARC, we found that hearing loss and age definitely impact emotion recognition ability. Additionally, emotion recognition seems to occur at a higher level, and may not be affected by hearing aids. In our studies, it seemed that hearing aids neither helped nor hindered emotion recognition, and audibility did not appear to be a confounding factor, although further research is needed in this area.

With regard to the relationship between emotions and hearing loss, we have a lot more work to do. I hope after participating in this presentation that you walk away with some additional information and insight to use when counseling patients with hearing loss and those who wear hearing aids. As hearing healthcare providers, we must be aware of these potential difficulties so we can better understand and serve our patient populations. We have a duty to convey these difficulties to families, to facilitate a better understanding of a more “holistic” effect of hearing loss. As a hearing aid manufacturer, Phonak will take these findings into consideration when we develop new hearing aid processing technologies.

References

Goy, G., Pichora-Fuller, M. K., Singh, G., Russo, F. (2016). Perception of Emotional Speech by Listeners with Hearing Aids. Journal of the Canadian Acoustical Association, 44(3), 182-183.

Lubben, J., & Gironda, M. (2004). Measuring social networks and assessing their benefits. Social networks and social exclusion: Sociological and policy perspectives, 20-34.

Nummenmaa, L., Glerean, E., Hari, R., & Hietanen, J. K. (2014). Bodily maps of emotions. Proceedings of the National Academy of Sciences, 111(2), 646-651.

Petrides, K. V. (2009). Psychometric properties of the trait emotional intelligence questionnaire (TEIQue). Assessing Emotional Intelligence, 85-101. Springer US.

Picou, E. M. (2016). How hearing loss and age affect emotional responses to nonspeech sounds. Journal of Speech, Language, and Hearing Research, 59(5), 1233-1246.

Picou, E. M., Buono, G. H., & Virts, M. L. (2017). Can Hearing Aids Affect Emotional Response to Sound?. The Hearing Journal, 70(5), 14-16.

Citation

Rakita, L. (2018, April). Hearing loss and emotions. AudiologyOnline, Article 22501. Retrieved from www.audiologyonline.com