From the desk of Gus Mueller

As more and more audiology journals and documents become digitally archived, many of us are realizing that we really don’t need all those floor to ceiling bookcases anymore, and stacks and stacks of old journals are “going to a new home.” As I continue to thin out my 40-year collection, there is one relic I just can’t seem to part with—my bound collection of the Maico Audiological Series.

As more and more audiology journals and documents become digitally archived, many of us are realizing that we really don’t need all those floor to ceiling bookcases anymore, and stacks and stacks of old journals are “going to a new home.” As I continue to thin out my 40-year collection, there is one relic I just can’t seem to part with—my bound collection of the Maico Audiological Series.

If you’re not familiar with the Maico series, these were short, easy to read summary articles on a given topic—great for the busy clinician. Sort of like . . . 20Q at AudiologyOnline! One of the articles from my collection is considerably more tattered than the others. It’s a 1972 article by Bill Lentz, PhD, entitled, "Speech discrimination in the presence of background noise using a hearing aid with a directionally-sensitive microphone.” This article reviewed this new technology that had just been introduced by Maico and the aided speech recognition findings were pretty impressive. I received my copy of the article at a Maico workshop in Denver in 1973, just about the time I was looking for a dissertation topic, and, as they say, the rest is history.

A lot has changed with directional technology since Lentz’s 1972 article, and over the years, you’ve probably seen several summary articles about this feature. In fact, some guy named Mueller wrote a “Ten Year Review of Directional” for Hearing Instruments in 1981. I did some checking, and noticed that over the past 10-15 years, most of these directional summary articles have been written by people with names like Bentler, Ricketts and Dittberner, who strangely enough, all have a University of Iowa connection. Since we’re due for another directional summary article, I sent the 20Q Question Man to Iowa City to see what he could find.

A lot has changed with directional technology since Lentz’s 1972 article, and over the years, you’ve probably seen several summary articles about this feature. In fact, some guy named Mueller wrote a “Ten Year Review of Directional” for Hearing Instruments in 1981. I did some checking, and noticed that over the past 10-15 years, most of these directional summary articles have been written by people with names like Bentler, Ricketts and Dittberner, who strangely enough, all have a University of Iowa connection. Since we’re due for another directional summary article, I sent the 20Q Question Man to Iowa City to see what he could find.

Our Hawkeye guest at 20Q this month is Yu-Hsiang Wu, MD, PhD, who among friends and colleagues simply goes by “Wu”. With a name like Wu, it’s hard to resist a quick “Wu-Who!” Notice that that this is with an exclamation, not a question mark, as if you’ve been following the directional microphone hearing aid literature the past few years—you know Wu. And we’ll certainly give him a “woo-hoo” for his work in helping us understand directional microphone function, and the potential benefits of this technology in the real world.

Dr. Wu earned his MD with a specialty in otolaryngology in Taiwan, and worked as an otolaryngologist for several years. This led to an interest in research with the hearing impaired, which took him to the University of Iowa to pursue a Ph.D. Currently, he is an Assistant Professor in the Department of Communication Sciences and Disorders, the University of Iowa.

As you know from his presentations and publications, Wu’s research goals have been to develop accurate, sensitive, and efficient methodologies to determine success of hearing health care, including acoustic/electroacoustic measures for hearing devices and behavioral/perceptual outcome measures for human subjects following rehabilitative intervention. He’s much too young to remember the Maico Audiological Series or Lentz’s article, but you’ll want to remember the important clinical points he makes in this excellent 20Q.

Gus Mueller, Ph.D.

Contributing Editor

February 2013

To browse the complete collection of 20Q with Gus Mueller articles, please visit www.audiologyonline.com/20Q

20Q: Gaining New Insights about Directional Hearing Aid Performance

1. I keep seeing your name associated with all these articles about directional technology. What's the deal with that?

1. I keep seeing your name associated with all these articles about directional technology. What's the deal with that?

Well, for starters, take note that I've spent the last 10 years in the Hearing Aid Lab at the University of Iowa. We have a long history of directional hearing aid research here. I have been inspired by fellow Hawkeyes like Todd Ricketts and Andrew Dittberner, and, of course, Ruth Bentler. So I guess it’s not too surprising that I became interested in directional hearing aid research by learning all of the interesting facets of this technology from my predecessors. I wanted to continue their research to study the only hearing aid technology, other than FM systems, that can improve speech understanding in background noise.

2. But directional hearing aids have been around since the days we were doing the SISI and ABLB! Don't we sort of already know everything there is to know about them?

You might think so, but not really. For example, in the past few years we have been looking at some new, more accurate electroacoustic assessments of directional processing schemes, and the relationship—or discrepancy, really—between how they function one way in the laboratory and another way in the real world. Furthermore, directional processing has evolved over time, from fixed systems to active, dynamic, adaptive systems. These are factors that really haven't been researched thoroughly in the past.

3. Interesting. So where do we start?

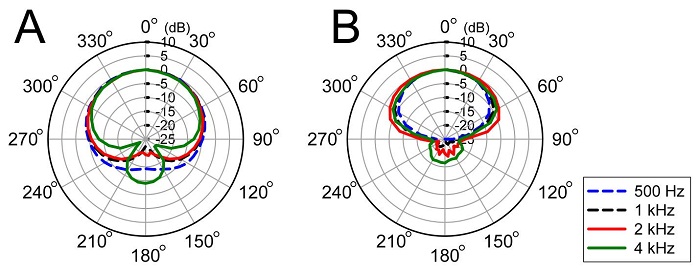

Well, first we could talk about the methods that I developed for evaluating the "goodness" of directional hearing aids (Wu & Bentler, 2007, 2009, 2011). It gets a little complicated, but I'll give you the short version. Traditionally, one useful way to evaluate directional systems is the polar plot. To obtain a polar plot, we present a signal sequentially and systematically to the hearing aid from different angles in an anechoic chamber (ANSI, 2010). The aid’s response to the signal arriving from each angle is recorded. The polar plot is a graph that shows the response of the hearing aid as a function of angle. As an example, Figure 1A shows several polar plots. In case you’re not familiar with looking at these types of patterns, the greater the distance the plot is from the center, the greater the output. As we would expect, these measures, taken in the anechoic sound field (not on the KEMAR head) have the greatest output at 0°. The polar plot of the 2 kHz-band (red curve in Figure 1A) indicates that, relative to sounds arriving from 0°, the hearing aid is approximately 20 dB less sensitive for sounds arriving from 180°. The data shown in the polar plot can further be used to calculate another important matrix to evaluate the goodness: the directivity index or DI (ANSI, 2010).

Figure 1. Polar plots measured from a hearing aid programmed to a fixed directional mode (A) and a directional adaptive mode (B).

4. I’ve seen polar plots many times before. What are the new, more accurate methods you mentioned?

First let me explain the limitations of the traditional method. It only works with a fixed directional system—a time-invariant system. For newer adaptive directional systems that can steer the directivity null (the least sensitive angle) to the noise source, the traditional method would result in inaccurate polar patterns and inflated directivity indexes. Figure 1 shows the polar plots measured from the same hearing aid in a fixed mode (A) and adaptive mode (B). The reason for the big difference between the two plots is that the directivity of the adaptive system is changing during the measurement. Think of assessing the behavior of a directional system as painting the portrait of a girl. A fixed directional system can be represented by a girl who sits very still, while adaptive directional systems are more like a girl who is always moving. Using the traditional method, we simply cannot obtain a beautiful portrait for a “time-variant” girl. We need to make the time-variant girl sit still.

5. How do we make the girl sit still?

We need something to catch her attention. That is, we need to create a sound scenario (e.g., speech in front, several noise sources from the side and back). This speech and noise would “fix” the directivity of a system. Then we need a signal (we call it the “probe signal”), like the traditional method, to present to the hearing aid from different angles. We don’t want the girl to notice this probe signal. We have found that a low-level signal or a transient signal can achieve this goal (Wu & Bentler, 2007, 2009). If we can obtain the hearing aid’s response to this probe signal (i.e., the probe response), then we can construct a polar plot.

Figure 2. Much of Dr. Wu’s research over the last decade has been with his faithful companion, KEMAR.

6. Wait a minute. You're telling me you're going to measure the response of speech, noise AND a probe signal all at the same time. How can you do that?

Good observation. We do need some “magic” to separate the probe response and the responses to speech and noise. The magic we use to do this is a phase-inversion technique that was first described by Hagerman and Olofsson (2004). Although we don’t have time to get into the details of this technique, I can tell you that it works pretty well with most hearing aids to “extract” the probe response. We have created many sound scenarios in our anechoic chamber and used these new methods to measure the directivity patterns of many new directional technologies. The results are very influential when forming our hypotheses and predicting experiment results.

7. So how does this apply to my clinic? I don’t have access to an anechoic chamber!

Although anechoic chambers are not readily accessible, clinical hearing aid test boxes are, and clinicians are encouraged to measure the directivity of a directional hearing aid to ensure that it meets reasonable and expected quality standards (American Academy of Audiology, 2006; American Speech-Language-Hearing Association, 1998). This verification is important because the directivity of directional systems could be easily eliminated if the microphone ports are clogged by debris or dust (Wu & Bentler, 2012a). Currently, at least one marketed hearing aid analyzer uses the traditional method (i.e., presenting stimulus sequentially to the hearing aid from different angles) to measure the polar plot. In order to obtain the accurate result from this system, you must set the hearing aid to the fixed directional mode. True, this isn’t as elaborate as the method I have described, but it will give you good information for making clinical decisions.

8. There certainly are times when the patients say their hearing aids don’t provide benefit in background noise. Should I be conducting test box directional measures on these hearing aids?

I think so. It’s a good place to start. While directional systems cannot restore normal hearing, it could be that the microphone ports on their hearing aids have become clogged over time, causing dysfunction of the directional system. When the microphone port is clogged, the directivity could be neutralized (i.e., become an omnidirectional pattern), or even worse, be reversed (i.e., the directional microphone is more sensitive to sound arriving from behind the listener!) (Wu & Bentler, 2012a). Therefore, it is crucial for clinicians to verify and follow up on the functionality of directional systems. I’d recommend keeping a “reference curve” of the directivity measure in the patient’s file (obtained when the hearing aid was fitted), so that you will know if performance has decreased.

However, with all that said, even with well-functioning directional hearing aids, hearing aid users still frequently report limited directional benefit in the real world. In case you are not familiar with the term, directional benefit is the benefit provided by directional processing relative to omnidirectional processing in the same hearing aid.

9. Wait, earlier you said that directional processing is the only hearing aid technology that can improve speech understanding in noise. Now you're saying that it may only provide limited benefit? Which one is correct?

Sorry, I should clarify. In fact, both of my statements are correct. The key point is the difference between the laboratory and the real world. Specifically, laboratory studies have consistently shown that directional processing can improve speech understanding in noise relative to omnidirectional processing (e.g., Bentler, Palmer, & Dittberner, 2004; Bentler, Palmer, & Mueller, 2006; Ricketts & Henry, 2002; Valente, Fabry, & Potts, 1995). However, significant directional benefit has rarely been observed in field trials (e.g., Cord, Surr, Walden, & Dyrlund, 2004; Cord, Surr, Walden, & Olson, 2002; Gnewikow, Ricketts, Bratt, & Mutchler, 2009; Humes, Ahlstrom, Bratt, & Peek, 2009; Palmer, Bentler, & Mueller, 2006; Surr, Walden, Cord, & Olson, 2002; Walden et al., 2000; Wu & Bentler, 2010b). In other words, there is a discrepancy in the directional benefit observed between laboratory and real-world settings.

My favorite example of this laboratory-field discrepancy is the large scale double-blind study conducted by Gnewikow and his colleagues (2009). They tested 94 hearing aid users in both the laboratory and the real world. The laboratory speech recognition tests indicated that listeners’ aided performance with the directional mode was better than that with the omnidirectional mode by 2 to 4 dB (Hearing in Noise Test; HINT) or by 10 to 20% (Connected Speech Test; CST), depending on the degree of hearing loss. However, these listeners did not report a clear advantage of the directional mode over the omnidirectional mode, as measured by two self-report questionnaires (Profile of Hearing Aid Benefit (PHAB) and Satisfaction with Amplification in Daily Life (SADL, Cox & Alexander, 1999)) after using each microphone mode for one month in the real world.

10. Wow! What do you think causes the discrepancy?

There are many reasons. One of them is that most questionnaires do not have sufficient sensitivity to detect directional benefit in the real world (Ricketts, Henry, & Gnewikow, 2003). For example, directional benefit is highly affected by the location/direction of speech and noise (Ricketts, 2000). For most directional technologies, directional processing could improve speech understanding when speech is from in front of the listener, while having a detrimental effect when speech is from the back. Now let’s take a look at a condition described in the questionnaire Abbreviated Profile of Hearing Aid Benefit (APHAB) “When I am in a crowded grocery store, talking with the cashier, I can follow the conversation.” Because the locations of the talker and noises are not specified, directional processing actually could have a positive, neutral, or even negative effect on speech understanding in this listening situation. If you ask a listener to report his/her average perception in this kind of listening situation after a month-long field trial, you should not be surprised if no clear directional benefit is observed. In short, because directional benefit is very situational, most questionnaires will not be able to demonstrate directional benefit if the listening situation is not clearly described in questionnaires.

11. So I can tell my patients to relax? The benefit is big and is there; we just can’t show it using questionnaires, right?

Well, not really. There also is evidence indicating that directional benefit observed in the laboratory may overestimate the benefit that occurs in the real world. My favorite example to illustrate this point is an interesting study conducted by Walden and his colleagues (Walden et al., 2005). In order to investigate the effect of environmental signal-to-noise ratio (SNR) on directional benefit, Walden et al. measured speech recognition performance with the directional and omnidirectional modes at a bunch of SNRs ranging from −15 to +15 dB. They found that the directional benefit varied greatly across SNRs. The benefit was the largest (around 30% in speech recognition score) when the SNR was between -6 and 0 dB. Outside this SNR range, directional benefit decreased considerably. The reason for the reduced benefit is ceiling or floor effect. Specifically, for speech recognition performance with the directional and omnidirectional modes, either mode is good when the SNR is high (ceiling effect) and neither mode is good when the SNR is low (floor effect). This research clearly indicated that directional benefit is situation-dependent.

12. How would this cause the discrepancy between the lab and real world? I still don’t get it.

To understand this, you first have to know the difference between efficacy and effectiveness studies. According to Cox (2005), an efficacy study examines whether a given treatment (in this case hearing aid technology) provides the desired benefit under optimal conditions - does it do what it was designed to do? An effectiveness study asks whether the treatment does what it was designed to do undertypical conditions, which for hearing aid research, should include a real-world field trial. Therefore, when researchers conduct a laboratory efficacy study to evaluate directional technologies, they typically test subjects at the “optimal” SNR (for example, -3 dB SNR, based on Walden’s data) so that the directional benefit can be more readily observed. However, the large directional benefit (for example, 30%, based on Walden’s data) observed by efficacy studies may not be realized in the real world if the optimal SNR used in the laboratory rarely occurs in hearing aid users’ everyday environments. For example, if a hearing aid user’s everyday environments are not terribly noisy (e.g., better than +3 dB most of the time), this user is unlikely to obtain the large directional benefit in the real world. In short, if the optimal conditions used in the laboratory do not match the typical conditions of listener’s everyday lives, the laboratory-field discrepancy occurs. I should also mention that the speech test that has been most frequently used with directional hearing aid research is the HINT. Because this test is adaptive, it drives the SNR to the point where directional benefit is most likely to occur, which may not be a common listening condition for the patient in the real world.

13. Hmmm, it sounds like the benefit of directional technology is affected by lifestyle?

It is very likely that this is the case. In one of our studies (Wu, 2010), we investigated whether age could affect obtained and perceived directional benefit. Our participants were 24 hearing impaired adults who ranged in age from 36-79 years and were fitted using hearing aids equipped with both omnidirectional and directional microphone programs. The directional benefit for each participant was assessed in the laboratory using the HINT and then they were sent into the field for four weeks, wearing the hearing aids. During the field trial, a paired-comparison technique (participants switched between both microphone settings in any given listening situation) was used and they recorded their perceptions using a paper and pencil journal. The laboratory HINT results indicated that there was no effect of age on directional benefit. The field data, however, showed a significant association between older participants and lower perceived directional benefit. Furthermore, the disparity between the laboratory and real-world results was greater for older than younger listeners. One reason that might explain this age-dependent discrepancy is that older listeners have different social lifestyles than younger listeners. Specifically, our data have shown that older listeners who are less active tend to encounter quieter listening situations than younger listeners. (Wu & Bentler, 2012b). In a quiet listening situation (i.e. SNR +10), they have already reached their maximum speech recognition, and thus the benefit provided by the directional hearing aid will not further improve performance. Therefore, the typical SNRs of younger listeners’ environments may be closer to the SNRs used in laboratory testing, resulting in less of a discrepancy between the laboratory and real world for these listeners.

14. You keep talking about benefit. But today’s patients don’t really have the chance to assess the benefit per se, as most all directional hearing aids automatically switch to directional. Why are you concerned with benefit?

I think I see what you’re getting at. It isn’t like the old days when the patients would switch back and forth between programs themselves and then tell us whether speech understanding got better (like we had them do in the study that I just mentioned). I guess what you’re asking is why don’t we just measure absolute performance for directional processing, and forget about benefit? There are several reasons for that, but I think the main reason is generalizibility. Let’s say we have two hearing aid models, A and B. If we want to know whether model A is better than model B (e.g., product evaluation), we will treat the hearing aid as a whole and measure the listener’s absolute performance with each model. Although the result (let’s say A is better than B) could help clinicians to select the better model for patients, it does not tell us why model A is better than B. This is because the design/setting of many hearing aid components, including channel numbers, chip, and bandwidth, could affect absolute performance. Therefore, if now we have a third model, C, we will not be able to use the result of A better than B to know if A is better than C; the generalizibility is limited. In contrast, because the benefit measure eliminates the effect of hearing aid components other than the one under investigation, the generalizibilty is better. For example, if we know a directional technology provides benefit on model A, this technology should provide similar benefit when it is implemented on models B and C. Therefore, although both absolute performance and benefit are important, researchers are more concerned about benefit.

15. OK. I think that makes sense. Now let’s get back to SNR. So is the SNR of the real-world environment the only thorny issue?

No, nothing is that simple. As I said, directional benefit is very situational. Therefore, if laboratory testing does not include all factors that could impact directional benefit, laboratory data will not predict real-world results. One factor that is common in the real world, but suspiciously absent in many laboratory studies, is visual cues.

It’s maybe something you haven’t thought much about, but let me explain why the effect of visual cues, or lipreading, is closely related to directional benefit. In most noisy situations where directional technology can work effectively (i.e., the talker is in front of and close to the listener), visual cues are almost always available. By utilizing visual cues, the level of a listener’s speech recognition performance can increase substantially (Hawkins, Montgomery, Mueller, & Sedge, 1988; MacLeod & Summerfield, 1987; Sumby & Pollack, 1954). Now imagine that you are a hearing aid user and you are in a noisy restaurant. You wish to take advantage of your directional technology, so you sit in front of your friend. Because you want to know to what extent this technology could help you, you switch between directional and omnidirectional modes during the conversation. Chances are, you will find out that your speech understanding is not very different between the two microphone modes. This is because, with the help of visual cues, your speech understanding with the omnidirectional mode has already approached your inherent limitation of the speech recognition ability (ceiling). In this case, you will not perceive much directional benefit when switching from omnidirectional to directional mode. Please note that you may still have a lot of difficulty in this face-to-face conversation because your ceiling performance may not be 100% correct, but rather your maximum attainable performance. If you recall the Walden et al. study that I mentioned before, you will remember that the effect of visual cues is identical to the above-mentioned effect of high SNR on directional benefit.

16. Interesting. I hadn’t really thought about his interaction before. Does this effect vary from person to person?

To some extent, yes. Let me tell you about another study we conducted. We measured directional benefit at several SNRs with and without visual cues (Wu & Bentler, 2010a, b). We found that listeners obtained significantly less directional benefit in the audio-visual condition than in the auditory-only condition at most SNRs. For SNRs higher than -2 dB, the directional benefit never exceeded 10% in audio-visual listening. More importantly, the optimal SNR for directional benefit in audio-visual listening was below (poorer than) -6 dB, which is not commonly experienced in everyday listening situations. We also found that for listeners who had better lipreading skills tended to obtain less directional benefit. We then compare the laboratory testing data to field trial data. The results revealed that, across participants, laboratory directional benefit measured in the audio-visual condition could predict the real-world result, while the benefit measured in the auditory-only condition could not.

17. This is starting to get depressing. From what you have said, it sounds like directional technology isn’t all that beneficial?

Don’t get me wrong. I am not saying that directional technologies provide no benefit in real-world audio-visual listening. They may still provide benefit, but the benefit is much smaller than what we observe in most laboratory testing (i.e., without visual cues). If you consider that listeners may not be able to perceive this small benefit, and that most questionnaires are not sensitive enough to detect directional benefit, it is not surprising that there is a discrepancy between the laboratory and the real world.

18. Are there any other factors that are different from the lab to the real world?

There probably are several, but one that immediately comes to mind is the hearing aid user’s voice. Let me use one of our recent studies to explain this. Traditional directional systems use polar patterns which are most sensitive in front of the listener. Recently, new algorithms can automatically employ backward-facing polar patterns when speech arrives from behind the listener. In an efficacy study designed to evaluate this new algorithm, we tested subjects in a speech-180°/noise-0° configuration using the HINT. Our initial results indicated that the HINT score obtained with the new algorithm was not significantly different from that with omnidirectional processing. This result was unexpected and was not consistent with electroacoustic measure results (obtained by the method I mentioned in the beginning). After investigating, we found that the non-significant result was an effect of the listeners’ voices. Specifically, we found that the new algorithm would employ the backward-facing polar pattern when the HINT sentence was presented from 180°. However, when listeners started repeating the HINT sentences, the algorithm responded to listeners’ own voices and adapted to an omnidirectional directivity pattern. When the next sentence was presented, the algorithm did not switch fast enough to the backward-facing pattern. As a result, listeners were listening via the omnidirectional pattern, instead of the backward-facing pattern. In a follow-up study, we modified the HINT by presenting a carrier phrase from 180° before each HINT sentence. Because the carrier phrase triggered the algorithm to employ the backward-facing pattern before the HINT sentences were presented, significant benefit was observed with the new algorithm.

19. I see the problem, but how would this cause the disparity between the lab and the real world?

This, again, is related to how efficacy studies evaluate hearing technologies. Because the research question of our efficacy study was “Is the new algorithm better than the old technology in the optimal condition,” we chose to report the results of the follow-up study, wherein the listeners’ voice did not affect the benefit of the new algorithm (Chalupper, Wu, & Weber, 2011). This reported benefit may be realized in the real-world scenario wherein hearing aid users simply listen to speech without responding—a scenario that is close to the test condition used in the follow-up study. However, according to the result of the original study, it is likely that the new algorithm will only provide limited benefit in the real-world scenario wherein both conversational partners are speaking back and forth fairly rapidly. Therefore, if listeners in a field trial are asked to summarize their perception regarding the new algorithm, they may report a benefit that is much smaller than that reported by the laboratory efficacy study.

20. Got it. You can bet I’ll use a lot of this information counseling my patients—and by the way, I’m still going to fit most all of them with directional technology! Do you have any final words for us?

To summarize, using traditional methods to measure the effects of modern hearing aid directional technologies is a bit like trying to paint a portrait of a subject that is in constant motion. When conducting electroacoustic measures, we need to force the technology to sit still (switch to the fixed directional mode) or we need to use new methods to catch its attention. When evaluating these technologies perceptually, we need to keep in mind that their effect is highly situational. This situational effect is hard to detect with traditional questionnaires, which typically collect average perception data and do not provide detailed information about the listening situation. Because the effect is dependent on the situation, the result obtained from one laboratory test condition should only be generalized to real-world conditions that share nearly identical characteristics. Therefore, clinicians and researchers must exercise caution when generalizing laboratory efficacy study results to real-world effectiveness. To minimize the laboratory-field discrepancy, it is necessary to develop laboratory test protocols/environments that can better represent/simulate the real world, and to develop field assessment tools that have sufficient sensitivity to detect the situation-dependent effect. The bottom line is this: when a laboratory efficacy study suggests that a new hearing technology is better than older technologies, don’t get too excited. This benefit may not be realized in the real world. On the other hand, when a field trial says that a new technology is not different from the older technology, don’t be too frustrated. The benefit might be present in the real world, but our current field assessment tools might not be sensitive enough to detect it.

Acknowledgement

The author thanks collaborators Ruth Bentler and Elizabeth Stangl for helpful conversations about hearing aid outcomes. The author also thanks Elizabeth Stangl for helping prepare this article. Preparation of this article was supported by the National Institutes of Health (1R03DC012551-01) and Department of Education/National Institute on Disability and Rehabilitation Research (DoEd-NIDRR 84.133E-1).

References

American Academy of Audiology. (2006). Guidelines for the audiologic management of adult hearing impairment. Retrieved January 28, 2013, from https://www.audiology.org/resources/documentlibrary/Documents/haguidelines.pdf

American Speech-Language-Hearing Association. (1998). Guidelines for hearing aid fitting for adults. Retrieved January, 28, 2013, from https://www.asha.org/docs/pdf/GL1998-00012.pdf

ANSI. (2010). Method of measurement of performance characteristics of hearing aids under simulated in-situ working conditions (ANSI S3.35). New York: American National Standards Institute.

Bentler, R. A., Palmer, C., & Dittberner, A. B. (2004). Hearing-in-noise: Comparison of listeners with normal and (aided) impaired hearing. Journal of the American Academy of Audiology, 15(3), 216-225.

Bentler, R. A., Palmer, C., & Mueller, H. G. (2006). Evaluation of a second-order directional microphone hearing aid: I. Speech perception outcomes. Journal of the American Academy of Audiology, 17(3), 179-189.

Chalupper, J., Wu, Y. H., & Weber, J. (2011). New algorithm automatically adjusts directional system for special situations. Hearing Journal, 64(1), 26-33.

Cord, M. T., Surr, R. K., Walden, B. E., & Dyrlund, O. (2004). Relationship between laboratory measures of directional advantage and everyday success with directional microphone hearing aids. Journal of the American Academy of Audiology, 15(5), 353-364.

Cord, M. T., Surr, R. K., Walden, B. E., & Olson, L. (2002). Performance of directional microphone hearing aids in everyday life. Journal of the American Academy of Audiology, 13(6), 295-307.

Cox, R. M. (2005). Evidence-based practice in provision of amplification. Journal of the American Academy of Audiology, 16(7), 419-438.

Cox, R. M., & Alexander, G. C. (1999). Measuring satisfaction with amplification in daily life: The SADL scale. Ear and Hearing, 20(4), 306-320.

Gnewikow, D., Ricketts, T., Bratt, G. W., Mutchler, L. C. (2009). Real-world benefit from directional microphone hearing aids. Journal of Rehabilitative Research & Development, 46(5), 603-618.

Hagerman, B., & Olofsson, A. (2004). A method to measure the effect of noise reduction algorithms using simultaneous speech and noise. Acta Acoustica, 90(2), 356-361.

Hawkins, D. B., Montgomery, A. A., Mueller, H. G., & Sedge, R. (1988). Assessment of speech intelligibility by hearing impaired listeners. In B. Berglund (Ed.), Noise as a public health problem: Proceedings of the 5th International Congress on Noise as a Public Health Problem (pp. 241-246). Stockholm: Swedish Council for Building Research.

Humes, L. E., Ahlstrom, J. B., Bratt, G. W., & Peek, B. F. (2009). Studies of hearing aid outcome measures in older adults: A comparison of technologies and an examination of individual differences. Seminars in Hearing, 30(2), 112-128.

MacLeod, A., & Summerfield, Q. (1987). Quantifying the contribution of vision to speech perception in noise. British Journal of Audiology, 21(2), 131-141.

Palmer, C., Bentler, R. A., & Mueller, H. G. (2006). Evaluation of a second-order directional microphone hearing aid: II. Self-report outcomes. Journal of the American Academy of Audiology, 17(3), 190-201.

Ricketts, T. (2000). Impact of noise source configuration on directional hearing aid benefit and performance. Ear and Hearing, 21(3), 194-205.

Ricketts, T., & Henry, P. (2002). Evaluation of an adaptive, directional-microphone hearing aid. International Journal of Audiology, 41(2), 100-112.

Ricketts, T., Henry, P., & Gnewikow, D. (2003). Full time directional versus user selectable microphone modes in hearing aids. Ear and Hear, 24(5), 424-439.

Sumby, W. H., & Pollack, I. (1954). Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America, 26(2), 212-215.

Surr, R. K., Walden, B. E., Cord, M. T., & Olson, L. (2002). Influence of environmental factors on hearing aid microphone preference. Journal of the American Academy of Audiology, 13(6), 308-322.

Valente, M., Fabry, D., & Potts, L. G. (1995). Recognition of speech in noise with hearing aids using dual microphones. Journal of the American Academy of Audiology, 6(6), 440-449.

Walden, B. E., Surr, R. K., Cord, M. T., et al. (2000). Comparison of benefits provided by different hearing aid technologies. Journal of the American Academy of Audiology, 11(10), 540-560.

Walden, B. E., Surr, R. K., Grant, K. W., et al. (2005). Effect of signal-to-noise ratio on directional microphone benefit and preference. Journal of the American Academy of Audiology, 16(9), 662-676.

Wu, Y. H. (2010). Effect of age on directional microphone hearing aid benefit and preference. Journal of the American Academy of Audiology, 21(2), 78-89.

Wu, Y. H., & Bentler, R. A. (2007). Using a signal cancellation technique to assess adaptive directivity of hearing aids. Journal of the Acoustical Society of America, 122(1), 496-511.

Wu, Y. H., & Bentler, R. A. (2009). Using a signal cancellation technique involving impulse response to assess directivity of hearing aids. Journal of the Acoustical Society of America, 126(6), 3214-3226.

Wu, Y. H., & Bentler, R. A. (2010a). Impact of visual cues on directional benefit and preference: Part I-laboratory tests. Ear and Hearing, 31(1), 22-34.

Wu, Y. H., & Bentler, R. A. (2010b). Impact of visual cues on directional benefit and preference: Part II--field tests. Ear and Hearing, 31, 35-46.

Wu, Y. H., & Bentler, R. A. (2011). A method to measure hearing aid directivity index and polar pattern in small and reverberant enclosures. International Journal of Audiology, 50, 405-416.

Wu, Y. H., & Bentler, R. A. (2012a). Clinical measures of hearing aid directivity: assumption, accuracy, and reliability. Ear and Hearing, 33, 44-56.

Wu, Y. H., & Bentler, R. A. (2012b). Do older adults have social lifestyles that place fewer demands on hearing? Journal of the American Academy of Audiology, 23, 697-711.

Cite this content as:

Wu, Y. (2013, February). 20Q: Gaining new insights about directional hearing aid performance. AudiologyOnline, Article #11617. Retrieved from https://www.audiologyonline.com/