Since the introduction of the directional hearing aid over 40 years ago, this technology has proven to be one of the most successful solutions for improving speech understanding in background noise. Well-performing bilaterally-fitted directional instruments can improve the signal-to-noise ratio (SNR) by 6 dB or more (Powers and Beilin, 2013). This degree of improvement is substantial, and often determines whether a patient can follow a conversation. Moreover, the benefit is present for individuals with a wide range of hearing loss, different audiometric configurations and listening needs.

Historically, directional amplification has been designed so that maximum amplification is applied to signals from the front of the user, with maximum attenuation applied to signals originating from the back. This of course makes sense, as in most listening situations the wearer faces the speaker of interest. Even when a conversation originates from a location other than the front, most listeners will turn their head to face the talker. In this position, the fundamental design of directional technology is working at or near its maximum efficiency.

So while we would expect an SNR improvement using traditional directional microphone technology for most listening situations, there are some cases when the wearer is unable to face the speaker. One such commonplace situation is when the hearing aid user is driving a car and a passenger in the back seat is speaking. Here, the noise comes from the sides and front while, speech comes from the back—yet the hearing aid user cannot turn to face the speaker. In fact, in this situation we would predict that traditional directional technology would actually be worse than omnidirectional. Other similar communication situations might occur in group events, large gatherings, when the wearer is in a wheelchair, or when having a conversation while walking.

Directional Steering

An ideal microphone system should thus be able to steer maximum directivity toward speech, regardless of the direction from which it originates—even if it originates from behind the listener. To respond to this technology need, in 2010 Siemens developed a new “full-directional” algorithm, termed SpeechFocus, for steering directionality. The SpeechFocus algorithm addressed the limitations of traditional directional microphones by adding more unique polar patterns as options for certain listening environments. In addition to offering all the functionalities of an adaptive directional microphone, when necessary SpeechFocus can automatically suppress noise coming from the front of the wearer and focus on speech coming from a different direction, such as from behind. The result is a polar pattern referred to as reverse-cardioid or anti-cardioid. The algorithm continuously scans sounds in the listening environment for speech patterns. When it detects speech, it selects the directivity pattern most effective for focusing on that dominant speech source, which would include selecting an omnidirectional pattern if noise is not present at a significantly high level.

Early Research

Shortly after the introduction of the SpeechFocus algorithm, an efficacy study was conducted by Mueller, Weber and Belanova (2011). In this research, hearing-impaired participants were fitted bilaterally and tested using three different hearing aid settings: 1) fixed omnidirectional 2) automatic directional without SpeechFocus enabled (which results in a hypercadioid pattern for a typical diffuse noise field) and 3) automatic directional with SpeechFocus enabled, which allowed for automatic switching to the SpeechFocus (anti-cardioid) pattern based on the signal classification system. When enabled in the software, this anti-cardioid polar pattern was designed to activate automatically when three conditions existed simultaneously:

- Speech and noise both present in the listening environment

- Speech originating from behind the listener, and

- An overall signal level of ~65 dB SPL or greater

The speech material used by Mueller et al. (2011) was the Hearing In Noise Test (HINT), which was conducted in the soundfield with the participants seated and aided bilaterally. All testing was carried out with the HINT sentences presented from the 180° azimuth loudspeaker and the HINT noise was presented from the 0° azimuth. The noise was presented at a constant level of 72 dBA; the level of the sentences was adaptive (2-dB steps). The authors conducted the HINT word recognition measures for the three different microphone modes for two conditions. In Condition 1, the participants responded to the sentences in the conventional test manner. In Condition 2, while repeating back the HINT sentences, the participants simultaneously performed a task. The task consisted of watching a video monitor directly in front of them and using a hand control to drive a car on a road via a computer video game. The Condition 3 performance for the driving task was not scored—it was simply included as a distraction.

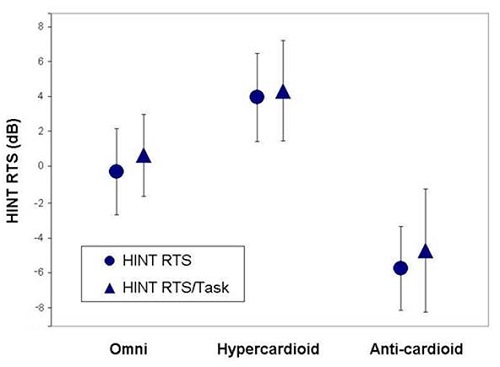

The results of the Mueller et al. (2011) research are shown in Figure 1.

Figure 1. The mean HINT RTS score for three different hearing aid conditions: omnidirectional, directional (hypercardioid pattern) and directional (anti-cardioid pattern). Performance shown for standard HINT procedure, and also when participants were performing a driving task during speech recognition measure (adapted from Mueller et al., 2011).

First, observe that performing the driving task had little effect on the participant’s word recognition for any of the three conditions. What are clearly observed, however, are the performance differences for the three hearing aid settings. Note that when the SpeechFocus algorithm was enabled, HINT scores improved by over 5 dB from omnidirectional. Also observe that when the hearing aid was allowed to switch to traditional directional (as some products will do in this listening environment), HINT scores were nearly 10 dB poorer. The authors report that when individual HINT performance was compared for the HINT RTS scores for the SpeechFocus versus omnidirectional microphone modes, all 21 participants showed an advantage for the SpeechFocus setting; all but one was 2.6 dB or larger. Moreover, 81 percent had a benefit of at least 4 dB, and three participants had a SpeechFocus benefit of over 9 dB.

These early findings using the SpeechFocus algorithm were encouraging. While they clearly show that a large SNR advantage is possible, they also support the automatic function, as these results only could have occurred if the anti-cardioid pattern was effectively steered by the signal classification system.

Follow-up Clinical Evaluation

In the past year, two new research studies have emerged from the University of Iowa, examining the Siemens SpeechFocus algorithm. In general, these studies have supported the earlier reported benefit of this technology. The first of these papers is from Wu, Stangl and Bentler, (2013a).

Wu et al. (2013a) used a clinical design similar to that used by Mueller et al (2011), which we described earlier. The HINT speech material was used, and the testing was conducted in a sound-treated booth, with the speech and noise signals presented at 180 and 0 degree azimuth, respectively. The noise (standard HINT noise signal) was fixed at 72 dB SPL. For each participant, the Siemens Pure 701 RIC instruments were fitted to the NAL-NL1 prescriptive algorithm, and programed to three different microphone conditions, saved in different programs: omnidirectional, conventional directional and directional with SpeechFocus enabled. The two directional programs operated automatically, steered by the signal classification system.

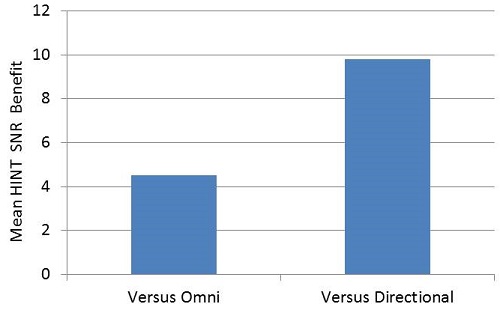

The results from this study were similar to those of Mueller et al. (2011), and the benefit of SpeechFocus is shown in Figure 2.

Figure 2. The mean HINT benefit for the SpeechFocus (anti-cardioid) algorithm when compared to the omnidirectional and the traditional directional microphone modes. (Adapted from Wu et al., 2013a).

The main effect of microphone mode was significant, and follow-up analysis revealed that the differences between all microphone modes was also significant (p<0.0001). Note that when compared to omnidirectional, the benefit is around 4.5 dB, and when compared to conventional directional, the advantage is nearly 10 dB. Analysis of individual data revealed the SpeechFocus SNR benefit was at least 2 dB for 100% of the participants, and 4 dB or greater for 67%.

In this publication, Wu and colleagues discuss an experiment variable that could have negated the measured SpeechFocus advantage. They observed that when the hearing aid user would report back the HINT sentence verbally, his or her voice would switch the program back to traditional directional (the signal classification system detected speech from the front). When the next HINT sentence was then presented from behind the listener, it would take approximately one second to switch back to the SpeechFocus algorithm. This minimal delay is seldom a factor for real-world conversations, yet it was for the sentences of the HINT. For the sentence to be scored accurately, the participant must correctly identify all key words, and one of these is always the first or second word of the sentence. In this study, the researchers solved the problem by adding a short introductory phrase to the HINT sentences. Since the time of this study, the SpeechFocus transition time has been shortened to less than one second, which has provided an even faster, yet smooth transition to the anti-cardioid polar pattern when speech originates from the back.

The overall findings of Wu et al. (2013a) were in agreement with those of Mueller et al. (2011), and again confirmed the efficacy of the SpeechFocus algorithm. However, one shortcoming was that for both of these studies, performance was measured in a controlled clinical setting. Would the SpeechFocus benefits be the same for real-world listening?

Real-world Study of Directional Steering

In a separate University of Iowa study, Wu, Stangl, Bentler and Stanziola (2013b) examined the effectiveness of the SpeechFocus algorithm in a realistic real-world setting. Because a common use-case for this special directional algorithm is having a conversation from the front seat of a car, while someone is talking to you from the back seat, this was the listening situation selected. As pointed out by the authors, the acoustic characteristics of this situation differ substantially from what was used in previous SpeechFocus research. For example, the noise encountered in motor vehicles include such things as noise of the vehicle itself, other traffic, wind, and the tires against the pavement. Moreover, the reverberations of the interior of an automobile are greater than observed in the clinical test settings used in previous research.

Because it would be very difficult to conduct aided speech testing while someone was riding in a car, an alternative approach was used. In a moving van traveling 70 mph on the Iowa Interstate highway, the automobile/road noise, together with the Connected Speech Test (CST; Cox et al., 1987) sentences, were recorded bilaterally from hearing aids coupled to the KEMAR manikin. The KEMAR was positioned on the passenger side of a customized Ford van. The loudspeaker that presented the CST sentences was placed at 180 degree azimuth relative to the head of the KEMAR. The noise levels at KEMAR’s head were ~75-78 dB. Recordings in the van were made for three hearing aid conditions: omnidirectional, conventional directional and directional with SpeechFocus enabled. The two directional programs operated automatically, steered by the signal classification system.

The participants (n=25) all had bilateral downward-sloping sensorineural hearing loss. The recorded stimuli from the van were played to the participants via a pair of insert earphones. The signal was modified for the individual’s hearing loss, and the gain that would be applied for a NAL-NL1 fitting. During testing, the listener was asked to repeat the CST sentences. Each passage consisted of nine or ten sentences. Scoring was based on the number of key words repeated by the listener out of 25 key words per passage, totaling 50 key words per condition.

In addition to speech recognition measures, the authors employed a second task. The three programs (omni, traditional directional and SpeechFocus) were compared using a forced-choice paired-comparison paradigm. In each comparison, two recordings of the same CST sentence, which were recorded through two different programs of the hearing aid, were presented. The participants were asked to express their preference regarding what hearing aid program they would choose to use if they were driving an automobile for a long period of time. For reliability purposes, the comparisons were repeated 10 times.

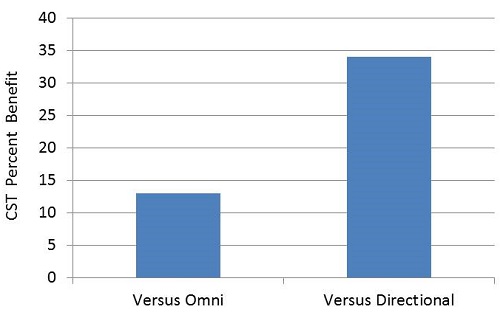

The results of the CST speech recognition measures are shown in Figure 3.

Figure 3. The mean CST word recognition benefit for the SpeechFocus (anti-cardioid) algorithm when compared to the omnidirectional and the traditional directional microphone modes. (Adapted from Wu et al., 2013a).

The mean percent improvement obtained for the SpeechFocus algorithm when compared to the other two microphone modes is displayed; the benefit was 16 and 36 percent for these two conditions, which was significant (p<0.0001). Consistent with the previous clinical studies, the benefit was substantially greater when compared to the traditional directional setting than when compared to omnidirectional processing.

It is difficult to directly compare these Wu et al. (2013b) data to the Wu et al. (2013a) findings, as the clinical study examined benefit referenced to an SNR improvement, and these data based on real-world recordings examined benefit referenced to percent correct. If we roughly consider that every dB of SNR improvement results in an 8-10 dB percent increase for speech recognition, then we would conclude the real-world noises and the reverberations present in the van as it traveled down the highway reduced the overall SpeechFocus benefit by about one half. Again, this is a rough approximation, as different speech material was used (with potential floor and ceiling effects), and other factors easily could have contributed to the differences. We do know, however, that reverberation will reduce directional benefit, regardless of what algorithm is used. Fortunately, most hearing aid users will be in a vehicle with less noise and reverberation than the cargo van used in this study, so even greater benefit may be possible.

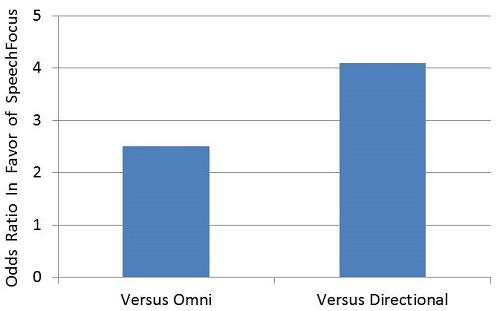

Recall that Wu et al. (2013b) also examined the participant’s preferences for the different microphone modes. The findings were analyzed using the odds ratio of a hearing aid user preferring a specific microphone setting. For example, if for the ten trials omnidirectional was preferred five times, and SpeechFocus was preferred five times, then the odds of SpeechFocus being favored would be 1:1. The analysis showed a significant difference in preferences, in favor of SpeechFocus (See Figure 4).

Figure 4. Based on individual preferences (10 trials), displayed are the odds ratio that a person would prefer the SpeechFocus (anti-cardioid) algorithm compared to either omnidirectional or traditional directional. (Adapted from Wu et al., 2013b).

When compared to omnidirectional, SpeechFocus was favored by 2.5:1, and when compared to traditional directional, the odds were 4.1:1. More specifically, for the SpeechFocus versus omnidirectional comparisons (10 A-B comparisons for each participant), 20 of the 25 participants favored SpeechFocus, four did not have a significant preference, and one favored omnidirectional. When SpeechFocus was compared to traditional directional, 24 of the 25 participants favored SpeechFocus, and one participant did not have a significant preference. These findings are consistent with the benefits in speech understanding shown in Figure 3.

In Closing

The Siemens SpeechFocus algorithm has been available since 2010, and research with this product is starting to emerge in referred publications. In this paper, we review three of these articles. The efficacy clinical studies (Mueller et al., 2011; Wu et al., 2013a), which consisted of behavioral measures under relatively stable and listener-friendly conditions, show a large benefit for the SpeechFocus algorithm—approximately 4.5-5.0 dB SNR advantage when compared to omnidirectional processing, and a ~10 dB advantage when compared to traditional directional. As expected, when a real-world reverberant environment was employed, the benefit was reduced, but still substantial. For example, when compared to standard directional, the benefit was 35 percent for speech recognition. While it might seem obvious that a large benefit would be present for SpeechFocus for the talker-in-back condition when compared to traditional directional, this is a very important and relevant comparison. This is because when some models of hearing aids are set to the “automatic” mode, which is the most common programmed setting, traditional directional is the microphone mode that will result for listening to speech in a car.

What is critical (and sometimes forgotten about the design of all the studies discussed here) is that the activation of the SpeechFocus algorithm was steered by the decision matrix of the signal classification system. That is, SpeechFocus was not simply fixed in a given hearing aid program, but rather it was automatically triggered only when certain acoustic conditions were detected. Hence, the positive findings observed in these studies are not only a tribute to this unique directional algorithm itself, but are a strong reflection of the accuracy of the hearing aid’s signal classification system.

References

Cox, R.M., Alexander, G.C., & Gilmore, C. (1987). Development of the Connected Speech Test (CST). Ear and Hearing, 8(5 Suppl), 119S-126S.

Mueller, H.G., Weber, J., & Bellanova, M. (2011). Clinical evaluation of a new hearing aid anti-cardioid directivity pattern. International Journal of Audiology, 50(4), 249-254.

Powers, T.A., & Beilin, J. (2013). True advances in hearing aid technology: what are they and where’s the proof? Hearing Review 20(1), 32-39.

Wu, Y., Stangl, E., & Bentler, R. (2013a). Hearing-aid users’ voices: A factor that could affect directional benefit. International Journal of Audiology. 52,789-794.

Wu, Y., Stangl, E., Bentler, R., & Stanziola, R. (2013b). The effect of hearing aid technologies on listening in an automobile. Journal of the American Academy of Audiology, 24(6), 474-485.

Cite this content as:

Powers, T., Branda, E., & Beilin, J. (2014, September). Directional steering for special listening situations: benefit supported by research evidence. AudiologyOnline, Article 12974. Retrieved from: https://www.audiologyonline.com