Editor’s Note: This text course is an edited transcript of a live seminar. Download supplemental course materials.

I titled this presentation "A Time-Compressed Overview" as it is impossible to cover auditory processing in just one hour. I have uploaded many more slides than I will present, but I wanted you to have the information for your reference. Here is an overview of what I will talk about today: basic anatomy and physiology, definitions of auditory processing, tests that are available for assessment, how to interpret them, and then I will touch on management.

Neuroanatomy and Neurophysiology of the Central Auditory Nervous System

We are all familiar with the central auditory nervous system (CANS). When we are assessing children for processing disorders, it is important to know right or left handedness because of the dominant hemisphere for language. Ninety-six percent of right-handed people are left-brain dominant, while only 4% are right-brain dominant. Of those who are left-handed or ambidextrous, 70% are still left-brain dominant for language and right-brain dominant for music, math and other similar skills. The important areas that we look at and assess with auditory processing are the frontal and prefrontal areas, as well as Brodmann's areas in the left temporal lobe. By no means are those the only areas that contribute to auditory processing, however.

The ultimate comprehension of a signal, such as speech or tones, depends upon the extraction of information at multiple levels throughout the auditory nervous system. There are very complex interactions between the sensory and the higher-order cognitive and linguistic operations. The way we are taught the auditory nervous system, that sound travels from the cochlea along the pathway to the cochlear nucleus to the superior olivary complex and all the way to the cortex, is an incredibly simplistic view. What really happens is simultaneous, sequential, hierarchical and reverse processing.

The encoding of the auditory signal from the nerve to the brain is called auditory bottom-up. Bottom-up denotes those mechanisms that occur in the system prior to higher-order cognitive or linguistic operations. However, bottom-up factors are influenced by higher-order factors such as attention, memory and linguistic competence. All of those executive functions are considered top-down. The reason that bottom-up and top-down processing work together is because of the complexity of the CANS. We do not process in strictly a hierarchical manner.

The information processing theory states that the processing of information is done with both bottom-up factors, which is the sensory encoding of the message, and top-down factors, such as cognition, language, and other higher-order functions. Those all work together to affect the ultimate processing of auditory input. It gets a little difficult when we try to define auditory processing with this complexity of the CANS.

What is Auditory Processing Disorder?

Auditory processing disorder (APD) is very hard to define, but that has not prohibited many experts from putting out definitions. Each child will present with different symptoms, and no one definition or theory will fit every child. What all definitions seem to have in common is that the disorder manifests through the auditory modality. The most well-known definitions have come from the Bruton Consensus conference (Jerger & Musiek, 2000) the American Speech-Language-Hearing Association (ASHA, 2005), Katz et al. (2002), Tallal (1980), Chermak (1998), Flexer (1994), Bellis (2003), and Keith (1977; 1981).

The Bruton Consensus conference took place in 2000 in Dallas, Texas. This was after the first ASHA conference on auditory processing. The Bruton group believed that an "auditory processing disorder" was a deficit in the processing of information specific to the auditory modality. They described the disorder as being associated with difficulties in listening to speech, language, and learning. In its purest form, it was conceptualized as a deficit in the processing of auditory information.

ASHA reconvened again on the topic in 2005. Their definition is probably the most commonly-used. It refers to the efficiency and the effectiveness by which the auditory nervous system utilizes information. ASHA’s definition (2005) states that “APD is defined as sound localization and lateralization, temporal aspects of recognition, auditory pattern, and auditory discrimination.” Paula Tallal (1980) believes that APD is an inability to accurately perceive auditory signals of brief duration when presented at rapid rates. This definition refers to speech. Based upon her description, she designed the very well-known remediation program Fast ForWord. She has a body of research to support that temporal processing is the basis for much of auditory processing.

A very simplistic view that is good for describing auditory processing to parents is related to the theory of intrinsic and extrinsic redundancy. If the speech signal is normal and the subject has a normal processing system, then speech understanding is good. If we compromise the signal in some manner, but the subject has a normal auditory processing system, then performance would be fair to good. If the signal is normal, but the subject has a compromised auditory nervous system, then understanding would be fair to good. If both the signal and the auditory system are compromised in some way, then understanding would be very poor. This is a great tool for explaining processing to parents, although it is certainly not all-encompassing.

According to Gail Chermak (1998), neurobiological connections are key to auditory processing. This includes the biology of the nervous system, the study of the development and the function of the nervous system, and emphasis on how the nerve cells generate and how they control behavior. Jack Katz, in his Buffalo Model, refers to auditory processing as “what we do with what we hear”. This does explain, in a simplistic view, what happens. Carol Flexer (1994) reminds us that an auditory processing disorder is not a hearing impairment. It is a problem that causes difficulty in understanding the meaning of the incoming sounds. Bob Keith (1977) defined auditory processing disorder as the inability or the impaired ability to attend to, discriminate, recognize, remember, or understand auditory information. Again, you will see the common thread through all of these definitions and descriptions is the auditory modality. I will refer to Teri Bellis’ work throughout this presentation, as I look to her work, among others, for guidance on assessment and remediation of auditory processing.

When you consider the complexity of the CANS, the notion of complete modality specificity of APD (auditory only) is neurophysiologically untenable. However, we can think of APD as primarily an auditory disorder. Patients with APD present with difficulties, deficits, and complaints that are going to more pronounced in the auditory modality, however, having a purely auditory processing problem is probably not realistic when you consider the complexity of the system.

Test Principles

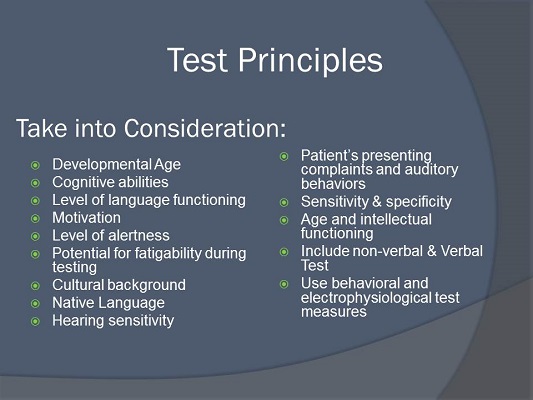

Let's talk about what we need to consider before we test a patient. Some considerations are developmental age, cognitive abilities, language, motivation, and others listed in Figure 1. You need to pay attention to the patient’s level of alertness. You need to know the native or dominant language and hearing sensitivity. What are the complaints? You should never have a standard APD test battery that you give to everyone. The test battery has to be tailored and customized to the patient and to the presenting symptoms. APD can never be assessed in isolation. We rely heavily upon information from other professionals, especially speech-language pathologists, neuropsychologists, neurologists, and teachers. The comorbidity of processing problems and cognitive and linguistic problems is very high.

Figure 1. Factors to consider in assessment of auditory processing disorder.

When I test children for auditory processing, I prefer to have the results of other professionals' assessments already available to me whenever possible. When the patient comes to me, they have already had their speech and language evaluation, neuropsychology evaluation and attention deficit evaluation, among others. If I do not have those evaluations, I put a cautionary note in my report that says that my testing might be somewhat limited because I do not have all of the information.

What do we need to keep in mind when we are assessing the patient? You have to administer tests that require the greatest amount of attention and mental effort early on in the test session, because these test sessions can be very long. I keep juice and goldfish crackers nearby and give children a lot of breaks. If they do not ask for a break, I offer one. I find that if I do not do that, the chances of them fatiguing and giving me results that are not optimum are very high. Any patient that I see who takes medication for any kind of cognitive or behavioral condition needs to have taken the medication as scheduled before the testing. I want those factors controlled so that I can ensure valid test results.

Keep in mind that testing is completed in a quiet, sound-treated room with no extraneous distractions. I only see APD patients in the morning, so that they come in well rested. Testing a child who is medicated, well rested and not stressed after school does not always provide a realistic picture of how they process information in the real world. The results may not indicate the full effects of the processing disorder because the testing conditions do not reflect real-world situations. That is why it is very important to have evaluations from other professionals as well as from parents and teachers.

I like Terri Bellis’ recommendation for a test battery, which includes a dichotic listening task, temporal patterning, monaural low-redundancy speech tests, gap detection, binaural interaction, and electrophysiologic measures. Again, this is just a recommendation, and you should never do all of these tests arbitrarily, but you should ensure that you cover these areas. We should not always have to do electrophysiology, in my opinion, and I will get to that in a moment.

We should assess four general processes or behaviors: binaural separation, binaural integration, monaural separation/closure, and auditory pattern temporal ordering and temporal resolution. These are the processes that occur in the CANS. Binaural separation is when two competing stimuli are delivered at the same time and you ask the individual to ignore one side. Binaural integration is two stimuli presented simultaneously and you ask the person to say both of the stimuli back to you. Monaural separation and closure is reduces the extrinsic redundancy of the speech signal, like speech in noise and low-pass filtered speech. Auditory pattern temporal ordering is typically evaluated with frequency or duration pattern tests.

Binaural separation refers to our ability to process the auditory message that is in one ear while ignoring a disparate message presented to the opposite ear at the same time. Listening to the phone and listening to someone else talk simultaneously is an example. A classroom is a real life situation where this task is important. As I said before, integration is the ability to process differing information that is presented to both ears simultaneously. Monaural separation is our ability to listen to a target message when it is presented in the same ear as a competing message. This almost never happens in real life. Rarely do we have two things going on in one ear at the same time. Auditory closure is our ability to utilize our intrinsic and extrinsic redundancy to fill in the missing or distorted portions of the signal. This plays a very important role in everyday life, such as when listening in a noisy classroom, when listening in a noisy restaurant, or many other situations where we hear multiple messages and have to be able to pick out the important one. Auditory closure is a function we use everyday.

Auditory pattern temporal ordering is the ability to recognize the acoustic contours of speech, the discrimination of the stimuli, sequencing, gestalt pattern perception and trace memory. All of these things are very important in processing. Being able to recognize the acoustic contours of speech is going to help to extract and utilize certain prosodic aspects of speech such as rhythm, stress, intonation. For example, these two statements have very different meanings: “You can’t go with us” versus “You can’t go with us.” Children who have difficulties with the temporal portion of auditory processing are often socially awkward. They do not understand humor or sarcasm. They do not understand where the emphasis is in what is said to them.

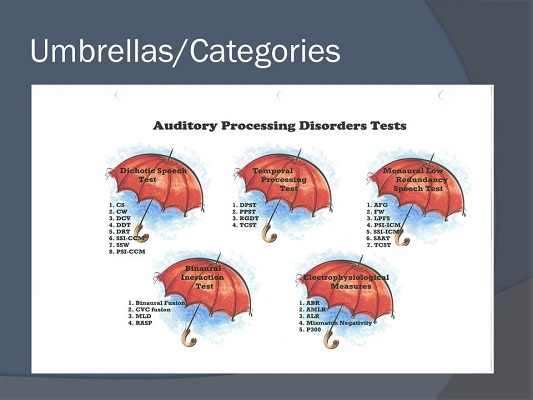

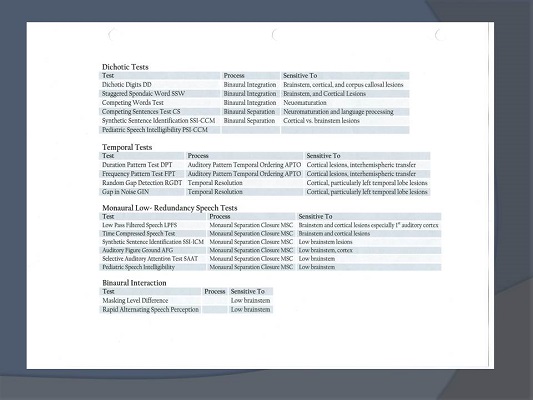

Figure 2 shows the information I give to audiology students in a handout that categorizes auditory processing tests in five areas: dichotic speech tests, temporal processing tests, monaural low-redundancy speech tests, binaural interaction tests, and electrophysiology. Figure 3 provides much of the same information in a different format, with the exception of electrophysiology. This is a wealth of information that I encourage you to use and reference for information about the types of APD tests currently available.

Figure 2. Five umbrellas of auditory processing tests. View enlarged image (PDF)

Figure 3. Auditory processing tests, broken down categorically. View enlarged image (PDF)

Review of Behavioral Tests

Dichotic Listening

Let’s start with dichotic listening. Information presented to the left ear must traverse to the right hemisphere and cross the corpus callosum in order to be perceived and labeled in the left hemisphere. The left side is the language-dominant hemisphere 96% of the time in right-handed persons and 70% of time in left-handed persons. Information presented to the right ear is directly transmitted to the left hemisphere without any need for the right hemisphere or the corpus callosum. Processing information from either ear is ultimately going to rely upon the integrity of the left hemisphere, because it is, again, language dominant. Dysfunction of the right hemisphere or the corpus callosum would impact the message that would come from the left ear only. Doreen Kimura (1961a; 1961b) theorized that the contralateral pathways are stronger and more numerous than the ipsilateral pathways. When dichotic or competing auditory stimuli are presented, the ipsilateral pathways are suppressed by the stronger contralateral pathways. It is an incredibly important concept. Dr. Kimura has a recent article on this topic on AudiologyOnline. Keep in mind, however, that the right-ear advantage can appear in tests that are not dichotic, but it is more pronounced in dichotic tests.

When we refer to the right-ear advantage, we are referring to ear asymmetry, i.e., the scores for the right ear are consistently higher than the scores for the left ear. That is because the right ear has the direct pathway to the language-dominant hemisphere. The right-ear advantage is going to be stronger as the linguistic content increases from consonant-vowels to sentences.

What kinds of tests can assess this? Here are some dichotic speech tests: Dichotic Digits Test, which is a binaural integration task; Dichotic Consonant Vowel Test for binaural integration; Staggered Spondaic Word test (SSW); Competing Sentences Test for binaural separation; the Dichotic Sentence Identification for binaural separation; and the Synthetic Sentence Identification with Contralateral Competing Message (SSI-CCM), which is a binaural separation task. With this test, you have the Davy Crockett story in one ear and nonsense sentences in the other ear. There is also the competing words segment on the SCAN-3. There are others, but these are the most prevalent.

Temporal Processing

Temporal processing is necessary to understand speech and music. In speech, it is necessary to be able to discriminate subtle cues such as voicing or the discrimination of similar words. Voicing the words “dime” and “time” or the distinction between the words “boost” and “boots” are based upon our temporal or timing skills. In music, we need to have temporal processing skills to perceive the order of the musical notes or the chords, and determine if the frequencies of the notes are ascending or descending with respect to the adjacent notes or chords. However, despite its importance in speech and music understanding, there are very few tests that are available for widespread clinical use.

One test of temporal processing is the Random Gap Detection Test (RGDT). This is a very common test, and it has now been compressed and put onto the SCAN-3. Other tests include the Gaps in Noise (GIN) test, the Pitch Pattern Sequence Test (PPST), and the Duration Patterns Sequence Test (DPST). These are the most commonly used tests of temporal processing, but keep in mind that the RGDT and the GIN test are actually assessing temporal resolution. Tasks such as the PPST and DPST may provide information regarding neuromaturation in the child with learning disabilities by indicating the degree of myelination of the corpus callosum.

These tests can be sensitive to right-hemisphere and left-hemisphere dysfunction. You can use them, specifically the pattern tests, to discriminate between which hemisphere has the problem. For example, if you are administering the pattern test and the patient is able to correctly label the responses, high-low-high, long-short-long, then you can pretty much assume that their cortical function is okay. Should you have a patient who can only respond by humming or with a motor gesture of high-low-high with their hand, it is pretty specific in letting you know that that patient probably has a left-hemisphere dysfunction. The right hemisphere is able to discern a pattern, but the left hemisphere cannot label it.

Monaural Low-Redundancy Speech Tests

Monaural low-redundancy speech tests are the oldest tests that have been used to assess the CANS. They are administered monaurally with degraded stimuli. We can degrade the stimuli by changing the frequency or the spectral content, time compression, low-pass filtering, or adding background noise or reverberation. These things will degrade the extrinsic redundancy of the signal.

The degree of redundancy associated with speech will affect performance. For example, sentences have a lot of redundancy, so we can usually understand them, even if parts are missing or distorted. Nonsense syllables have low redundancy. We cannot predict or close on them because they are nonsense. Extrinsic redundancy comes from the multiple, overlapping acoustic and linguistic cues that are in speech and language, like phonemes, prosody, syntactic and semantic cues. Intrinsic redundancy rises from the structure and the physiology of the CANS. Because we have multiple and parallel pathways going on all the time from bottom-up and top-down, our CANS is intrinsically redundant, but if there is a problem in the processing, as soon as we degrade the extrinsic redundancy of the signal, the person's performance will decline.

Some of the most common monaural low-redundancy speech tests are: the Time Compressed Sentences Test (TCST), which can also sometimes be considered a temporal test, because we are compressing the temporal aspect of the speech signal; low-pass filtered speech; the SSI with Ipsilateral Competing Message (ICM), which is the Davy Crockett story and nonsense sentences in the same ear; the Pediatric Speech Intelligibility (PSI); the Selective Auditory Attention Test (SAAT); and any auditory figure-ground test, including those of the SCAN-3. I do not think that monaural low-redundancy speech tests are even moderately sensitive; they are mildly or not very sensitive to disorders of the CANS. They are not as sensitive as other measures, however, they do give us a picture of how the person performs in the real world.

Binaural Interaction

Binaural interaction is not widely used clinically, and there are not many tests available. It is different from dichotic listening in that, although there are two stimuli presented to the ears, they are presented sequentially and not simultaneously. The information presented to each ear is composed of a portion of the entire message. This occurs at the low brainstem, particularly the superior olivary complex (SOC). The primary responsibilities of the low brainstem are sound transmission, integration of sounds, reflexive behavior, localization/lateralization, and speech in noise.

We have good tests available to evaluate the low brainstem. Auditory brainstem response (ABR) testing gives us a great picture of the brainstem, as do the masking level difference (MLD) test and the acoustic reflex paradigm. The need for additional behavioral tests of the brainstem integrity is limited, in my opinion. That being said, there are some behavioral tests of binaural interaction available, such as the Rapidly Alternating Speech Perception (RASP) test, and binaural fusion tests such as the Ivey, NU-6 and CVC (consonant-vowel-consonant) fusion. If I can get a good picture of the brainstem function via acoustic reflexes and/or ABR and/or MLD, which are highly sensitive to brainstem lesions, I do not feel the need to do additional testing.

Electrophysiology

This brings us to the question, “Should we routinely be doing electrophysiology in our assessment of auditory processing disorders?” The answer is maybe. Eletrophysiological results can validate the results of behavioral data when you find abnormalities on both. One benefit is that evoked potentials can be elicited with non-speech signals, which permit the validation of processing disorders independent of language status, but you have to verify that peripheral hearing is normal. Other electrophysiological tests are the ABR, which has never been shown to be very sensitive to auditory processing disorders, the middle latency response (MLR), the auditory late latency response (ALR), mismatch negativity (MMN), and P300. We also have the BioMark, which was popular several years ago.

BioMark

The theory behind the BioMark was to use very limited speech signals to assess the brainstem. Instead of doing an ABR with tones or clicks, someone thought to do it with speech. What we found, though, was that it was only about 33% sensitive to abnormalities, so it has kind of fallen out of use.

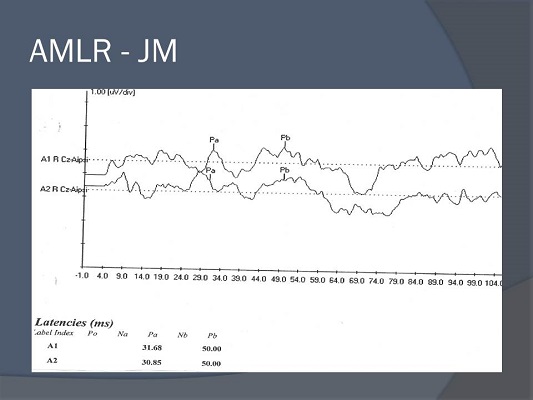

AMLR

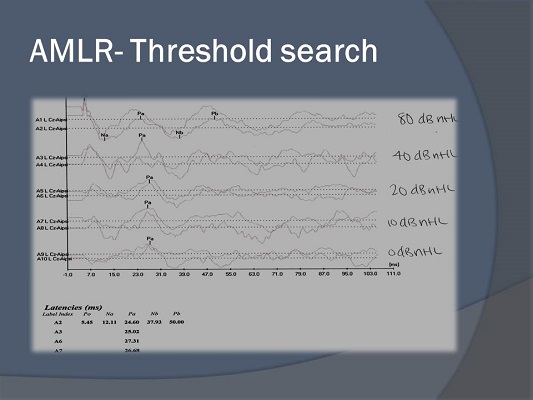

The MLR or the auditory (A)MLR has a lot of research behind it for persons with auditory processing disorders. It originates from the thalamus, A1, temporo-parietal lobe and lemniscal auditory pathways. It is definitely affected by maturation. We want to look at the amplitude, not necessarily latency. The best thing to do is to record the AMLR over both temporal lobes and compare to see if there is a difference. We are looking at the wave forms of Na, Pa, Nb and Pb, usually anywhere between 10 and 80 msec. It is also useful for doing frequency threshold estimation, if, for whatever reason, you cannot do an ABR. In fact, the AMLR was used for threshold estimation before the ABR became popular.

The AMLR is exogenous, meaning we do not need a patient response. It is negatively affected by noise, drugs, sleep, sedation, and especially postauricular muscle movement. One of my students did a threshold estimation with the AMLR, which you can see in Figure 4. The amplitude does not decrease much as we approach threshold. Latency does not shift very much either. That is typical, because we are not assessing the brainstem, which we know has a latency shift as we decrease intensity. We are assessing the thalamocortical pathways, so it is a great tool for assessing threshold.

Figure 4. AMLR threshold search.

When it comes to the AMLR there is conflicting research. Some have shown it to be different in children with language problems (Arehole, Augustine, & Simhardi, 1995), and some have shown no difference of the Pa component when testing children with language impairment compared to children without language impairment (Kraus, Smith, Reed, Stein & Cartee, 1985). In general, we know from the literature that children who have processing problems, language delays, and/or learning disabilities may have a prolonged latency, significantly reduced amplitude and poor morphology compared to their normal peers. It is important to keep in mind that among the evoked potential responses that we can use for APD assessments, the AMLR and P300 are abnormal most often. However, it is questionable in young children, because the waveforms are not adult-like until about 10 years of age. Obviously that is the very population that we are assessing for processing disorders, so it is a bit of a catch-22. Although it may show you something, it is not always mature in the age that you wish to test.

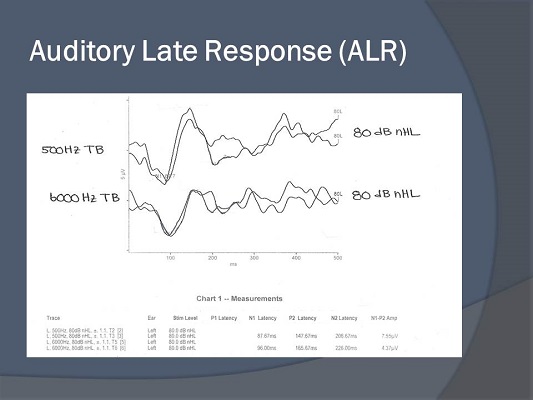

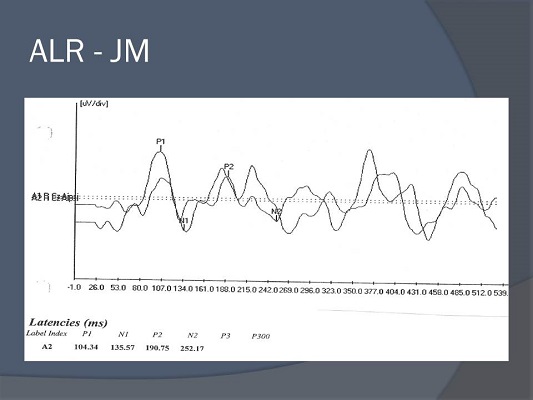

ALR

We do not know the precise generators of the ALR, but likely they are from A1. We do know that the most commonly occurring waveforms between 100 and 300 msec are N1 and P2. We can also obtain threshold responses with the ALR. It gives us a picture of the cortex. It has been shown to be very effective in demonstrating pre-and post-hearing aid and cochlear implant benefit. It is also exogenous, meaning that you do not need the person to respond, but it is affected by drugs, sleep, and sedation. In the literature, we see that the latency and the morphology of the P1 response can show us that an individual’s auditory nervous pathway is changing (Sharma, 2005). It can show us neuroplasticity pre-and post-hearing aid and cochlear implant fitting. Overall, what you see is that the latency, amplitude, and the morphology of the late response are good indications of processing problems (Tremblay, Kraus, McGee, Ponton, & Otis, 2001).

Figure 5 is an ALR that was recorded in my clinic. One is a 500 Hz tone burst, and one is a 6000 Hz tone burst. Both look very nice and are marked if you look closely. We have N1 and P2, which are the most commonly occurring waveforms.

Figure 5. ALR morphology.

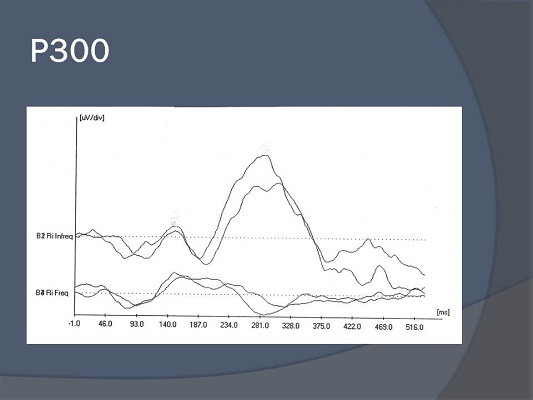

P300

The P300 is probably the most researched evoked potential out there. Its generators are the medial temporal lobe, hippocampus and other centers within the limbic system, and the auditory cortex. We can assess cortical function with this. The major waveform is the P3, which occurs at approximately 300 msec. in a young, normal adult. It is very sensitive to lesions of the CANS. We can use the P300 to monitor progress throughout therapy, any kind of demyelinating disease, schizophrenia and Alzheimer's. It has been studied more in patients with those types of disorders than for auditory processing. The P300 is actually endogenous, meaning that you have to have the person pay attention to the stimuli in order to record their response. Figure 6 shows a P300 recorded in my lab. We have a robust waveform right around 300 msec. There is no mistaking a P300 when you see it. It is enormous compared to ABR waveforms. The literature shows that we can use the P300 pre-and post-intervention for auditory processing and see an improvement, thus documenting neuroplasticity (Jirsa, 2010).

Figure 6. P300 response.

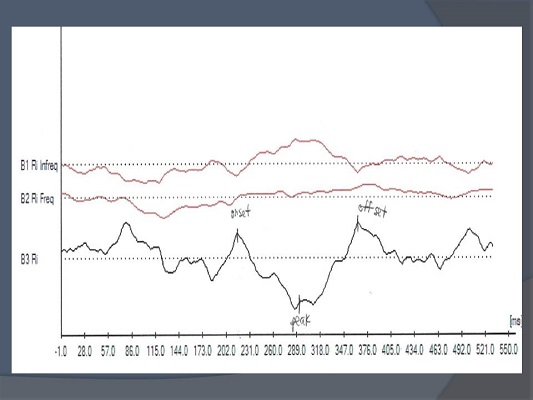

MMN

MMN stands for mismatch negativity. There was a flurry of interest in MMN about 20 years ago, mostly from the lab at Northwestern University. It had potential, and I think it is still being done by some audiologists. The biggest downfall of the MMN is that it is not present in all normals. If it is not present in all normals, it is not a very good monitoring tool for us to use. The MMN measures the ability for your brain to tell us that it is discriminating a change in stimuli. You do not have to do anything or even pay attention. It is our way to record that your brain recognized that there is a difference between “pa” and “ba,” which has implications for monitoring progress for those persons with language processing problems. Figure 7 shows a recorded MMN.

Figure 7. Mismatch negativity recording.

You are delivering a frequent stimulus of pa, pa, pa, pa, pa with an infrequent ba, such as pa, pa, pa, ba, pa, pa, pa, pa, ba, et cetera. You will see a nice dip, or a negativity (with waveform subtraction), and this tells us that the brain has recognized the difference between those two stimuli. Again, MMN is not used very much today because it is not present in all normals.

Differential Diagnosis of (C)APD

The behavior characteristics of children with auditory processing obviously can be very similar to those of persons who have attention deficit, language problems, learning disabilities, reading or spelling problems. The similarities in the behavioral manifestations of all of these have led people to question whether or not these disorders could reflect a single developmental disorder. There is not nearly enough time to go into that theory, but there are ways to tease out the differences. We need to be able to distinguish APD from attention deficit-hyperactivity disorder (ADHD), language impairment, cognition problems, memory issue, pervasive developmental delays and chronological age. That is one of the reasons I find it very important for us to have evaluations from other professionals, to give the appropriate types of tests for the complaints/symptoms, and to look as much as we can specifically at the auditory modality. It is not possible to make a differential diagnosis in every case, but I try to make sure that I have as much information as possible to cover all my bases.

Interpretation of AP Assessment Results

Data from an AP assessment may be analyzed for four general purposes: to identify if the child has APD or not; to identify what underlying processes are affected; to provide site of lesion information, and for development of an APD sub-profile. Putting patients into a profile can help you create a management program.

Profiles. According to Bellis and Ferre (Bellis, 2003), there are three primary subtypes or profiles of APD based upon the presumed anatomical site of dysfunction: auditory decoding deficit, with the region of dysfunction being the left auditory cortex; prosodic dysfunction stemming from the right auditory cortex; and integration dysfunction, which is a problem with the corpus callosum. Under each of these profiles is a list of how the child will perform on behavioral tests and electrophysiological tests, classroom manifestations, and then recommendations. If I did not have a profile to classify my identified patients, it would be very difficult to make management recommendations. For example, you cannot make blanket recommendations such as every child needs to use an FM system, because if they do not have difficulties processing in noise but still have difficulties with the temporal aspects of speech, an FM system will serve no purpose.

Katz (2002) has four categories or profiles: decoding, tolerance fading memory, integration, and organization. I find the SSW to be an invaluable tool in helping me recommend academic modifications that I can make for a child. It is sensitive to auditory processing problems, but I also think that it is very sensitive to learning problems and memory problems. I like that about the SSW and Katz’ whole battery because it can truly guide me in developing a management profile.

Neuroplasticity and Remediation

Let's think about management and neuroplasticity for a second. We talked briefly about using evoked potentials to monitor the neuroplasticity of the system pre- and post-intervention for the APD. We know the CANS is plastic. It is most obvious to us during a young child’s development, but it remains malleable throughout life or we could never learn. It gives us the opportunity to change, but only when it is done in a very intense and timely fashion. Frequency is more important than duration. Therapy for once a week for an hour is not as effective as twice a week for half an hour. The more therapy you can give them, the better. Because we have a hard time quantifying how much time it is going to take for the system to remap, it is hard to know when to change or maintain what we are doing. That is why we need tools to monitor efficacy.

Management for Children with Auditory Processing Disorders

APD management in the educational setting can be divided into three main categories: we can change the environment, we can directly remediate the disorder and we can improve the child's learning and listening skills. For environmental management, we need to consider both acoustic and non-acoustic factors. An FM system, preferential seating, earplugs and note takers are a few examples of environmental management.

Improving the learning and listening skills using compensatory strategies are things we teach the child to do for himself/herself. When they are in a noisy situations and it is hard for them, they need advocate for themselves and pay attention when they can. We can teach the child that when someone says, “Lastly, I'd like to present this…,” that the child knows that it is the last piece of information to come. Cognitive and metacognitive strategies such as mnemonic strategies and reauditorization are top-down skills that are taught by speech-language pathologists or the aural rehabilitation professionals. It is a combination of teaching bottom-up (usually direct treatment) and top-down strategies to maximize the plasticity of the system to change.

Gauging efficacy

How do we know it works? We talk to the teachers. We do educational performance evaluations including the SIFTER (Screening Instrument for Targeting Educational Risk), the CHAPS (Children’s Auditory Performance Scale), and the LIFE. We do behavioral speech perception teting. Evoked potentials are a great tool. We do re-evaluation with the same behavioral test of auditory processing. We do journaling. I like to have older children write things down such as what strategies worked, what did not work, what they noticed, etc. I like to have the parents of younger children write things down. It is a great way to get a qualitative assessment. You have the hard, objective measures of the evoked potentials and you have the more qualitative measures like the classroom questionnaires and journaling. Taken together, you can judge the efficacy of treatment.

Factors that Influence Success

What is going to influence treatment? The treatment schedule will have an impact, such as the time of day, length of each session, and duration and frequency of training. The auditory environment will have an influence on treatment. Is the patient wearing headphones? Are we testing in soundfield? The patient’s motivation will also impact treatment.

Computer Assisted Management

I have put up a few Web sites that can be used for remediation (Figure 8). Not all of these are appropriate for all types of APD. If I am putting a child in a prosodic profile for temporal processing problems, Earobics is probably not appropriate, as it is more for a child who is put into a decoding deficit. Mind Dabble is wonderful for strengthening your brain all the way around. It has fun, interactive brain training activities that can work on memory and processing. I frequently recommend that website to parents.

Figure 8. Web sites for training and remediation techniques.

Case Studies

Case 1 - JM

The first case that I am going to present to you is a 10-year-old male, JM. He did not have a significant history of otitis media, which often can be associated with later effects of auditory processing. He has definite difficulties with math and reading. He had repeated the first grade. He has had tutoring services, and his current teacher modifies his in-class assignments. He was diagnosed with attention deficit disorder, for which his mother is giving him an herbal supplement. He had a complete psychoeducational evaluation both privately and through his school. I gave him the SCAN-C. He was normal on all subtests of the SCAN-C. He was normal in the right ear for time compressed sentences, and he was also normal on gap detection. He was not normal on the duration pattern test and the time compressed sentences test in the left ear, which is typically the weaker ear.

He had a very difficult time with rapid speech when it was presented to his left ear, and with recognizing duration. Those weaknesses sometimes can cause difficulty understanding the pragmatics of language. During the DPST (duration pattern), he was unable to respond correctly to any stimuli verbally. We now know that something language-based is going on. When he hummed, his score continued to be in the abnormal range, but he was able to get approximately half of them correct.

Figure 9 shows his MLR. You can see some waveforms, but they are somewhat delayed in latency. Figure 10 shows his ALR. His P1 looks okay, but everything else does not look great. The AMLR has poor morphology. His evoked potential results taken with his behavioral test results indicate that he has auditory processing problems in very specific areas, namely in the temporal domain. These are probably focused on the right and left hemispheres because he has so much difficulty labeling the responses.

Figure 9. MLR of case study JM.

Figure 10. ALR of case study JM.

What recommendations do we have for JM? First, he needs a language evaluation. Those results were not back to me yet, but I would suspect that they are going to find that he has difficulties with pragmatics, decoding issues, and that he has some phonemic problems. I would recommend a private tutor at home and school to increase comprehension. We need to provide some visual augmentations. We need to give him more than auditory support. We need to put vision, motor, and audition all together. He needs to work on some top-down skills, also. We need to work on memory. These children typically have poor memory skills because they have difficulty with patterning and sequencing.

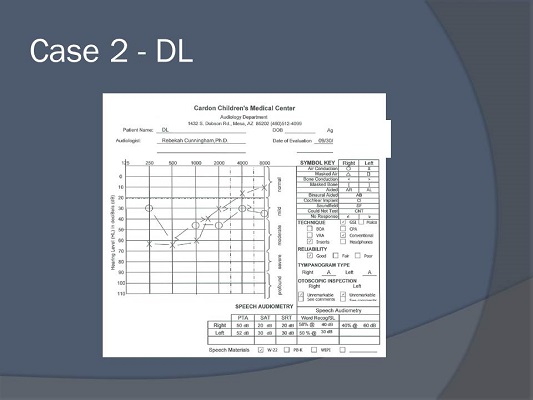

Case 2 - DL

This is a very interesting case of a 12-year-old girl who was referred from a neurotologist for ABR. The primary complaint was her inability to hear in noise. She had several audiograms performed. She had tinnitus, which is always alarming in a young person, and hearing loss. Her audiograms were very inconsistent over the last few years. She has migraines. She has a lot of difficulty in a public school, but she was homeschooled for most of her life. Her mother reported that she did not speak until she was three years old. Everything else was developmentally appropriate. There was no significant history of otitis media and no family history of hearing loss. Her MRI was normal. Figure 11 shows her audiogram. It looks very unusual. There is an asymmetry. If you look closely, you can see that her word recognition was poor at a level you would have thought was audible.

Figure 11. Audiometric data for case study DL.

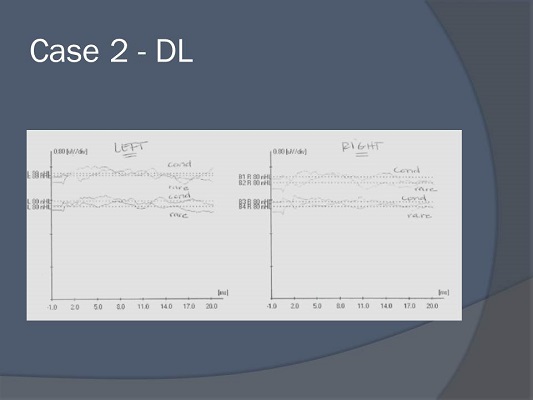

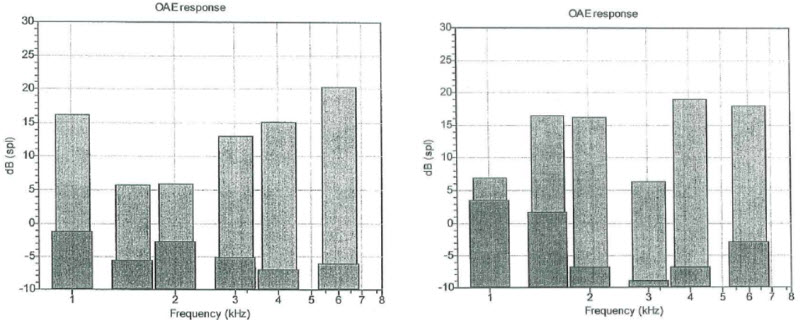

There are robust otoacoustic emissions in both ears (Figure 12). Her ABR is shown in Figure 13. You may not be able to see it very well, but there is nothing there. Based on these results, I suspect the child has auditory neuropathy spectrum disorder.

Figure 12. Otoacoustic emissions for case study DL.

Figure 13. ABR tracings of right and left ear for case study DL.

I went ahead and did her auditory processing evaluation. However, if a person has a peripheral hearing loss, I do not like to do auditory processing testing unless it is absolutely necessary. Although some tests purport to be relatively insensitive to peripheral hearing loss, some of those measures say that if you make it loud enough you can still perform the test with high sensitivity. I am wary of that. Too many times I have seen results you would not expect for the degree of hearing loss, so I do not like to test people with hearing loss for APD.

However, this child came to me with an order for the testing, so I did it. Surprisingly, she had normal gap detection, normal digits in her right ear, and she had normal duration pattern test results. That does not make sense with her hearing loss, right? Remember, she had present OAEs. She could not do anything on the SCAN-A. The digit scores in her left ear were abnormal, and her time compressed sentences were abnormal at 40% time compression, so I did not even try 60% compression. This was a child on which I wanted to perform evoked potentials.

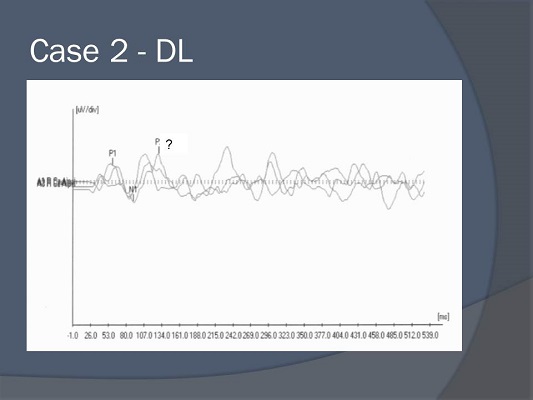

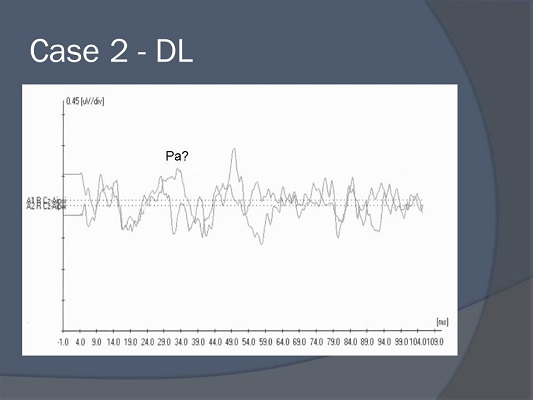

I got some poor result on her late potentials (Figure 14). Figure 15 shows her MLRs, and I think that probably is Pa, but the morphology is very poor. In summary, she had absent waveforms, normal tympanograms, present otoacoustic emissions, no acoustic reflexes, odd hearing loss, spondee thresholds that did not correlate with her pure-tone thresholds, and poor word recognition. I still see this child three years later. She has a trial with an FM trainer, she uses speech-reading programs like the Dragon speech-to-text, and computer-based auditory training programs. She was referred back to neurotology and neurology for a complete workup for auditory neuropathy spectrum disorder.

Figure 14. ALR tracings for case study DL.

Figure 15. MLR tracings for case study DL.

Question and Answer

If JM has timing issues, might he not respond to Interactive Metronome?

The answer is yes, but I do not think that that is a comprehensive approach to his management. The Interactive Metronome measures the milliseconds that someone is off when clapping to the signal, and it can be used during therapy sessions. The metronome tries to address timing issues. It can be used in addition to and with other therapeutic interventions.

If a patient has a hearing loss and is diagnosed with an auditory processing disorder, what do you recommend for amplification? If we are unable to slow down time constants in a hearing aid, what do you recommend from there?

In my opinion, every patient who has a peripheral hearing loss already has an inherent processing problem because the periphery is compromised by the hearing loss. I think it would be very wise for us to start using some very simple measures of dichotic listening on most of the patients who come into us for hearing aids, something like dichotic digits. That is very simple. We can increase the loudness. Again, the accuracy is questionable, but if we can get a sense of the person’s right-ear advantage with a bilateral hearing loss, we can make judgments if they are really going to do well with binaural amplification because they have a right-ear advantage. I am not crazy about testing people with hearing loss, but if I am going to do it, I want to make sure that they do not maintain that strong right-ear advantage, because then they are not going to be a very good candidate for bilateral amplification. I know that goes against everything we have always said, but I have seen it too many times, especially with the degradation of the auditory system as we age. It gets very complicated.

Do you think auditory steady-state response (ASSR) has the potential to look at auditory processing disorders?

I think ASSR has come and gone. I know some people are still doing it, but I do not think that we have ever really proven that it is better than the ABR at assessing thresholds. I do not know about a future for that, and I have never really considered that. I guess I would have say right off the top of my head, no, I do not.

What frequency or duration of therapy is required for sustained therapeutic effect?

I do not know that I can answer that because it is so hard to gauge. I try to do classroom questionnaires every four to six weeks and re-evaluate evoked potentials not until about four to six months post-therapy. The earliest I am going to do a re-evaluation of behavioral tests is six months; sometimes it is a year.

What is the SSI-CCM?

It is the synthetic sentence identification test with contralateral competing message. The SSI-CCM or the SSI, in general, has a story about Davy Crockett on one track and nonsense sentences on another track. The CCM means it has a contralateral competing message, so on one side, we have the Davy Crockett story and on the other side, we have the nonsense sentences. The patient is instructed to ignore the Davy Crockett story and only identify the nonsense sentences. Therefore, it is a test of binaural separation.

Do you have a requirement for diagnosis of auditory processing disorder? ASHA says it is performance deficits on one test if scores are at least three standard deviations below the mean.

I guess I would have to say I look at it more qualitatively. If I have a child who is doing very poorly in every temporal test that I administer, then I am going to say, yes, he probably does. If I have a child who does poorly in every dichotic test, or a child who does poorly in all tests, then yes. I would say probably something like three out of five, but I usually do way more than five tests. If I have a child who does poor here, slightly poor here, normal here, and is all over the place, I am probably not going to give a diagnosis of APD. I am going to try to address those specific areas or area in which he did not do well. I would say if I am doing eight tests, I need to see at least four or five of them that are pretty poor.

What is the youngest age at which you do testing?

I frequently have people call me and say, “My speech pathologist said that I should have my three-year old child tested for APD.” I try to go out there and educate, educate, educate. Normative data for children under the age of eight is weak. I will not diagnose APD based upon the results of one test. Eight years old is the youngest age at which I will test. I will do screeners or discuss information with parents of children who are younger than that.

In addition, I did want to say that maturation of the auditory system happens around 12 years of age. That is when we should start to see adult-like performance. However, I think recent research indicates that perhaps it is not even 12 years of age; it is even a little bit older. Where we stand right now is 12, which is the cutoff for adult normative data.

What further reading in auditory processing disorders do you recommend?

I am very fond of the original Teri Bellis book that came out in 2002. I also like the two-book series by Chermak and Musiek (2006a; 2006b). Book one is behavioral assessment and the second one is intervention. They are pretty technical, but they have the good information.

References

Arehole, S., Augustine, L. E., & Simhadri, R. (1995). Middle latency responses in children with learning disorder: Preliminary findings. Journal of Communication Disorders, 28, 21-38.

American Speech-Language Hearing Association. (2005). Central auditory processing disorders. Retrieved from https://www.asha.org/docs/html/TR2005-00043.html

Bellis, T. J. (2002). When the brain can’t hear: Unraveling the mystery of auditory processing disorder. New York, NY: Atria Books.

Bellis, T. J. (2003). Assessment and management of central auditory processing disorders in the educational setting: From science to practice (2nd ed.). Clifton Park, NY: Delmar Learning.

Chermak, G. D. (1998). Managing central auditory processing disorders: Metalinguistic and metacognitive approaches. Seminars in Hearing, 19(4), 379–392.

Chermak, G. D., & Musiek, F. E. (1997). Central auditory processing disorders: New perspectives. San Diego, CA: Singular Publishing Group.

Chermak, G. D., & Musiek, F. E. (2006a). Handbook of (central) auditory processing disorders, Volume 1: Auditory neuroscience and diagnosis. San Diego, CA: Plural Publishing

Chermak, G. D., & Musiek, F. E. (2006b). Handbook of (central) auditory processing disorder, Volume 2: Comprehensive intervention. San Diego, CA: Plural Publishing.

Flexer, C. (1994). Facilitating Hearing and Listening in Young Children. San Diego, CA: Singular Publishing Group, Inc.

Jerger, J. & Musiek, F. E. (2000). Report of the consensus conference on the diagnosis of auditory processing disorders in school-age children. Journal of the American Academy of Audiology, 11(9), 467-474.

Jirsa, R. R. (2010). Clinical efficacy of electrophysiological measures in APD management programs. Seminars in Hearing, 34(4), 349-355.

Katz, J., Johnson, C. D., Tillery, K., Bradham, T., Brandner, S., Delagrange, T., Ferre, J, et al. (2002). Clinical and research concerns regarding the 2000 APD consensus report and recommendations. Audiology Today, 14(2), 14-17.

Keith, R. W. (1977). Central auditory dysfunction. New York, NY: Grune & Stratton.

Keith, R. W. (1981). Central auditory and language disorders in children. San Diego, CA: College-Hill Press.

Kimura, D. (1961a). Some effects of temporal-lobe damage on auditory perception. Canadian Journal of Psychology, 15, 156-165.

Kimura, D. (1961b). Cerebral dominance and the perception of verbal stimuli. Canadian Journal of Psychology, 15, 166-170.

Kraus, N., Smith, D., Reed, N., Stein, L., & Cartee, C. (1985). Auditory middle latency responses in children: effects of age and diagnostic category. Electroencephalography and Clinical Neurophysiology, 62, 343-351.

Sharma, A. (2005). P1 latency as a biomarker for central auditory development in children with hearing impairment. Journal of the American Academy of Audiology, 16, 564-573.

Tallal, P. (1980). Auditory temporal perception, phonics, and reading disabilities in children. Brain and Language, 9, 182-198.

Tremblay, K., Kraus, N., McGee, T., Ponton, C., & Otis, B. (2001). Central auditory plasticity: changes in the N1-P2 complex after speech-sound training. Ear and Hearing, 22, 79-90.

Cite this content as:

Cunningham, R. (2013, July). APD in children: A time-compressed overview. AudiologyOnline, Article 11953. Retrieved from: https://www.audiologyonline.com/