From the Desk of Gus Mueller

At one time or another, most all of us have been in a listening situation where we are seated in the back of an average-sized room for a meeting or a social event. The person talking at the front of the room is soft-spoken, there is no microphone, and the room has poor acoustics. You try to position yourself to see the speaker to maybe get some visual cues, and cock your head from side to side, to maybe pick up some phase advantages. At some point, however, when nothing is working, you simply sit and back and give up.

At one time or another, most all of us have been in a listening situation where we are seated in the back of an average-sized room for a meeting or a social event. The person talking at the front of the room is soft-spoken, there is no microphone, and the room has poor acoustics. You try to position yourself to see the speaker to maybe get some visual cues, and cock your head from side to side, to maybe pick up some phase advantages. At some point, however, when nothing is working, you simply sit and back and give up.

Now, if you’re simply attending your neighborhood’s annual business meeting, and the content you were trying to hear was simply a rehash of your leash laws, not much lost. But what if that person in the back was an elementary school child, and what they were trying to hear was the math assignment for tomorrow? What if that person has a hearing loss or an auditory processing deficit?

Gus Mueller

There is considerable research to show, that with just a little bit of work, we can make huge improvements in the understanding of speech in classrooms, and these efforts result in long-term benefit for the children. I lived in Colorado for many years, and it was great to have one of the U.S. experts on this topic just down the road. He’s this month’s guest author here at 20Q.

Daniel Ostergren, AuD, is the managing member of Audiology Resources, LLC, Fort Collins, CO., providing general consulting services in clinical audiology and classroom acoustics. You’ve no doubt seen Dr. Ostergren’s name associated with classroom amplification, as he has been the liaison to the American National Standards Institute/Acoustical Society of America for the American Academy of Audiology. In addition, he has served as Chair of the Academy’s Task Force on Classroom Acoustics and currently is a candidate for the Academy’s Board of Directors. He also is a charter member of the Colorado Academy of Audiology, and recently served as President of this organization.

As you might expect from an audiologist who has dedicated much of his career to room acoustics, Dan’s living room loudspeakers were once part of a performance art installation at the Guggenheim Museum in New York, and are custom made of dryer lint, recycled newsprint, and shellac. He’s also a musician, and a certified voting member of the Grammy Awards. Are you listening Lorde?

I don’t know about you, but my vote goes to this 20Q article as a timely review of how we are continuing to work to improve speech understanding in the classroom.

Gus Mueller, Ph.D.

Contributing Editor

November 2013

To browse the complete collection of 20Q with Gus Mueller articles, please visit www.audiologyonline.com/20Q

20Q: Improving Speech Understanding in the Classroom - Today's Solutions

Daniel Ostergren

1. I haven’t heard that much about classroom acoustics, or “classroom amplification” of late. I assumed this was because most of the issues had been addressed?

Not entirely true, unfortunately. There have indeed been meaningful advances made in both areas, and in some unexpected ways. Still, significant issues remain.

2. I have to ask, what’s the big deal about classroom acoustics anyway – my friends and I seemed to do just fine in school, and we didn’t have any acoustic treatments or soundfield systems?

Well, it could be that you and your friends were attentive and motivated students. But, out of curiosity, how many of your peers spoke English as a second language? How many had persistent or permanent significant hearing loss? How many had other receptive language or developmental challenges? Chances are those students were not in your peer group because they were often educated in an environment separate from the mainstream school. In 1970, less than 1 in 5 children with so-called disabilities received their education in typical public schools. That changed in 1975 with the passage of Public Law 94-142, the Education for All Handicapped Children Act. This law went on to become the Individuals with Disabilities Education Act (IDEA), and has helped to ensure that all students have access to a free and appropriate education (FAPE) – audiologists aren’t the only ones fond of acronyms. The keyword though is access.

3. Access? If the teacher is speaking, or if peers are participating in the class discussion, everyone has access to these interactions.

One would think so. However, just as physical barriers limit physical access in schools and other public places for those with mobility challenges (think ramps vs stairs), poor acoustical conditions such as noise from ventilation systems or reverberation from high ceilings and reflective wall surfaces limit access to both direct and indirect instruction for students with receptive communication challenges. This concept of “acoustical access” came almost 20 years after PL 94-142 was passed. It took researchers some time to realize that noise and reverberation in the general education classroom were compromising acoustical access for students who were Deaf/Hard of Hearing, were second language learners, had other receptive communication challenges, etc. Only then did audiologists take action. I had the privilege of working with Joe Smaldino and Carl Crandell in writing an early set of guidelines for classroom acoustics that were published by ASHA in 1995. The research conducted up until that time suggested that for students with hearing loss, a signal-to-noise ratio (SNR) of 15 dB, (note: it’s actually a “difference”, not a “ratio”) and a maximum reverberation time of roughly .5 seconds were needed in a typical classroom of 10,000 cubic feet or less to ensure that neither direct or indirect instruction were being compromised. This implied that the unoccupied sound level should be approximately 35 dBA (which we can talk about later). Since that time, various guidelines have been published. The most recent and relevant is the ANSI/ASA S12.60-2010, Acoustical Performance Criteria, Design Requirements, and Guidelines for Schools, Part 1: Permanent Schools (ANSI/ASA, 2010), which I’ll simply refer to as the “ANSI Standard.”

4. That all sounds familiar. Are you saying that if the classroom is fairly quiet, and not too reverberant, we’re good to go?

It sounds pretty simple, except the ANSI Standard is referencing an unoccupied classroom - there are no students in the room yet, and we haven’t examined the nature of the heating/ventilation/air conditioning (HVAC) systems that might be running, or projectors, or other teaching technologies being used. What was being described originally was simply the acoustics of a large, unoccupied box, and what, theoretically, was needed to ensure acoustical access. The active classroom is a much more complicated acoustic landscape. Fortunately, if we consider three variables in the context of the dynamics of an active classroom we’ll be on our way to knowing whether acoustical access is favorable and/or whether the use of a soundfield system might be advisable. Those variables are:

- The acoustical impact of masking by background noise

- Temporal masking of the teacher’s voice by reverberation

- The distance between the teacher and student

Those are the “big three” that combine to compromise acoustical access for children.

5. Tell me more about the “big three”.

The first, background noise, is simply acoustical energy that is not the desired signal. Don’t forget that the “desired signal” may be the teacher’s direct instruction, discussion from peers (indirect instruction), or perhaps material that is being presented via media sources such as Internet content, etc. Noise sources, on the other hand, typically include the HVAC system in the room, noise from hallways or adjacent rooms, external traffic sounds, and even air traffic contributes to unwanted noise in classrooms located in urban areas.

6. Makes sense that these would be potential problems with communication. What about reverberation?

To a large part, reverberation is a form of noise. That sounds strange, doesn’t it? It is a type of noise because if the desired signal does not decay fast enough, the reflected energy interacts temporally with the desired signal and interferes with accurate receptive communication. So, some reverberant energy becomes noise that began as desired signal! Of course, other ambient noise culprits are contributing too.

The effects of reverberation can be influenced by the third factor, the distance between the desired signal and the student. As the distance increases between the listener and the source of instruction, the sound pressure level decreases. It decreases in a rough approximation of the Inverse Square Law, which states that for every doubling of distance, sound pressure level decreases by 6 dB. So, it may not be that uncommon for a student to be sitting in a noisy and reverberant classroom, and at such a distance that direct or indirect instruction is not intelligible in any significant way. It would be accurate to say that this student is outside the critical distance for accurate receptive communication. Critical distance is the distance from the source at which the direct and reflected energy are equal, and it varies as a function of noise and reverberation. Combine this with the fact that children do not have the vocabulary and auditory closure skills that adults may have and the process of communication, and therefore education, has been significantly undermined.

7. You’re right, things can go downhill quickly! How does one know if the desired SNR of 15 dB or better is in place?

Fortunately, a little math gets us most of the way there and the technology needed to accurately measure noise level and reverberation time may already be in your hand – your smart phone! I’ll come back to that. First, we need to measure and calculate the dimensions and total volume of the classroom. A laser distance measurement device, easily obtained at a hardware store, will provide these data. It doesn’t hurt to take a few photos too – just for the record. Next, a dedicated sound level meter (SLM) or calibrated SLM app for your smart phone (yes, they’re available and can be quite accurate) will get us through the next step. Theoretically, if the unoccupied noise level in a typical classroom (10,000 cubic feet or less) is no greater than 35 dBA, the teacher’s voice will not be less than approximately 50 dBA anywhere in the room as predicted by the Inverse Square Law. (This is a simplification, but we’re sticking with it for now.) So, there’s our +15 dB signal-to-noise ratio. So far, so good. Reverberation measurement is another matter. There are reverb time calculators that rely on knowing the room dimensions, and surface materials, etc. Yet, believe it or not, there are smart phone apps for measuring reverberation time that are very accurate. The unit of measurement we are using is RT-60, which is the amount of time in milliseconds required for a sound to decay by 60 dB. An impulse sound (e.g., a loud hand clap works fine) is introduced into the room, and the RT-60 is calculated.

8. You’re moving a little fast—this isn’t a measurement I’ve ever conducted.

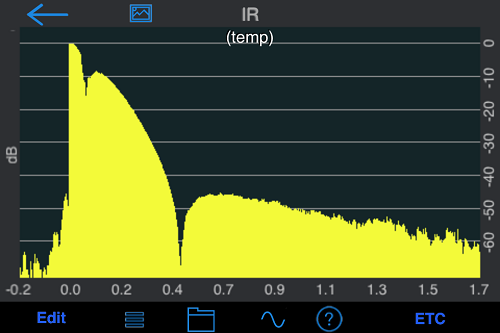

Okay—I’ll show you an example. In Figure 1 is an Energy/Time Curve screen shot of an impulse (handclap) that I measured in my office using my smart phone just now.

Figure 1. Energy/Time Curve (ETC) of a hand clap in my office. Shown on the Y-axis is sound energy referenced to 0 dBFS (Digital Audio Maximum), on the X-axis is time, in seconds. The yellow display shows the variations in the decay of the sound energy of the hand clap over time. Also shown are various measurement options of the instrument used.

You can see interesting variations in the reflected energy in the room (in dB on the Y axis; represented with the yellow shading) as a function of time (in seconds on the X axis). The handclap occurred at 0.0 seconds; the first reflection occurs at about .1 seconds (100 msec); the second reflection occurs at about .55 seconds (550 msec), with gradual decline after that. Well, as you can see, that doesn’t tell us RT-60, or the amount of time it takes for a sound to decay by 60 dB in my office. But . . . a simple press of a button yields Figure 2.

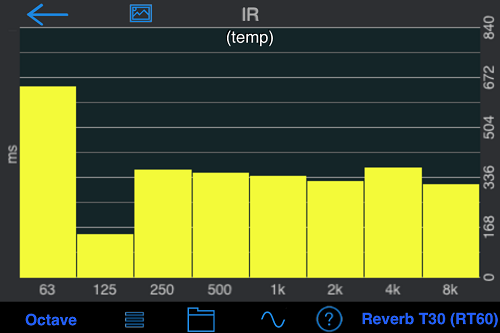

Figure 2. Octave band RT-60 analysis of my office. Shown on the Y-Axis is the decay time in milliseconds, on the X-Axis are octave bands from 63 Hz to 8 kHz.

Now we can see how quickly sound decays in milliseconds (Y axis) as a function of octave band (X axis) in my office – perfect! You’ll notice that, for the most part, RT-60 is roughly 330 msec for the six higher frequency octave bands shown. My office is quite “dry”, or non-reverberant. What about the longer decay in the low frequency octave bands? My office is a rather minimally appointed cube of a space, and there is some flutter echo present. Hence the anomalies in the low frequencies – it is what it is.

9. What about the “unoccupied noise level?”

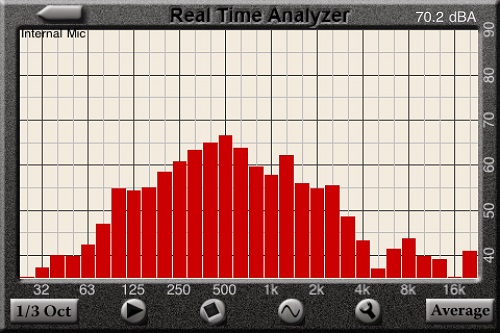

I was just getting to that. Figure 3 shows an SPL graph indicating an overall level of just over 30 dBA – pretty quiet! Again, these are data I collected using my cell phone app.

Figure 3. SPL Graph of my office. The Y-Axis is dBSPL A-Weighted, on the X-Axis is duration of the measurement.

Let me point out that the SPL Graph is time stamped, has LEQ data, and duration of measurement data. I was able to take all of these acoustical measures, email them from my phone, and include them in this article in less than 5 minutes! The dimensional measurements might require one extra minute, and perhaps two minutes for the photos (if I wanted to include them). My point is that there’s no reason we can’t accurately and affordably assess the acoustical properties of classrooms and compare these measures with published guidelines.

10. I agree, that’s impressive, but is it really necessary? Why not just put soundfield systems in all classrooms regardless of their acoustical properties?

That does sound like an easy solution, but there is a problem with this approach. Recall that I mentioned that at least some of the reverberant noise in the classroom started out as desired or direct signal. In rooms with excessive reverberation times, adding more sound energy from a soundfield system may actually create additional problems, not solve them. We may increase audibility at the expense of intelligibility. For example, have you ever struggled to understand the public address system in a large, reverberant, and noisy airport? It may have been loud enough, but reverberation made it less intelligible.

11. I get your point but I’m not so sure how it applies to a classroom?

Remember that our primary interest in measuring classroom acoustics and implementing soundfield systems is to ensure acoustical access to both direct and indirect instruction. Let’s just consider direct instruction for a moment. When the teacher is speaking, we know that the lower frequency acoustical energy is carrying what I would call the “audibility content” of the signal. For the listener, this is the “Did I hear that?” aspect of receptive communication. The higher frequency acoustical energy is carrying the “intelligibility content” of the signal. This is the “Did I understand that?” aspect of receptive communication. Frankly, audibility is rarely a problem. You know the PA announcer in the airport is speaking because the lower frequencies are being propagated quite nicely, thank you. However, the high frequency or intelligibility cues are lost due to a variety of factors such as reverberation, absorption by the large volume of air, and upward spread of masking in the ear, among others.

12. Whoa! Upward spread of masking?

Yes. I hate to say it, but the ear is involved. When you boil it all down, there are masking influences rooted in both the temporal and frequency domains of speech that compromise the intelligibility of speech for students in classrooms all across the country. This is the essence of what many of us have been wrestling with for decades. It is rarely an audibility issue; it is an intelligibility issue. It is ensuring that all students have access to intelligible direct and indirect instruction, from all sources, in all settings, at all times.

13. That takes us back to your examples of acoustic measures. Your office looked great, but what do you do if the classroom doesn’t meet the ANSI Standard?

Before I answer that, let me ask you a question: Do you think that the more common “violation” of the ANSI Standard is excessive background noise, or excessive reverberation? Or, maybe a roughly even split? Background noise is generally the biggest culprit. And this brings us to a fascinating discussion point: the difference between “passive” and “active” acoustical treatments. If reverberation were the biggest problem, designing classrooms that include sound absorbing materials, or retrofitting existing classrooms with these materials, would solve the problem in a fairly cost effective fashion. Sound absorbing materials are “passive” in the sense that their physical properties alone accomplish their intended job. You don’t have to plug them in to an electrical outlet. However, because background noise appears to be the bigger problem, we are faced with different challenges. Changing the type of HVAC systems in use, or adding insulation to walls, for example, can be very expensive. A soundfield system, or more accurately, a Classroom Audio Distribution System(s) (CADS) – the term officially adopted by the ANSI Standard, might be a more desirable choice. CADS are considered “active” systems, meaning that one plugs it into an electrical outlet, and active changes occur to the source signals. The objective with CADS is to help us approach the goal I mentioned earlier – access to direct and indirect instruction, from all sources, in all settings, at all times. However, they are not, nor were they ever intended to be, a replacement for an acoustically favorable classroom. Assessing and addressing the room acoustics is always the first priority. However, a properly placed basic CADS unit (specifically when used for direct instruction) does do something remarkable. It creates a second acoustical source that interacts with the critical distance in the room. This is key, as we can now provide a more even distribution of the desired signal throughout a larger area in the room.

14. I’m okay with your new CADS label, but what’s wrong with the term “soundfield system” that we’ve used for years?

Nothing really. I imagine you have been conducting soundfield measures in your test booth for years. And that’s where the term belongs – in the audiometric test environment. However, in a classroom, active systems are designed to distribute audio from any source as evenly as possible throughout the enclosure. They are not strictly amplification systems like a public address system. And they generally must be able to reproduce the signal from the teacher, or a handheld mic being used by a student, or a media source, or even an FM transmitter in use with an ear level FM system that a student is using. So, we now have a system for distributing audio (not just “sound”, but audio from a variety of sources), throughout a classroom. This is rather sophisticated as compared to our rather antiquated notion of a soundfield system, don’t you agree?

15. You’re making it sound a little mysterious. If I had a CADS sitting in front of me, in simple terms, what would I be looking at?

In its most basic configuration, you would be looking at a microphone/transmitter worn by the instructor, and a speaker/receiver system that would be placed in the classroom so that the output of the speaker provides good audibility and intelligibility cues throughout the room. The signal from the microphone/transmitter might be an FM or infrared signal, or even a digital signal that carries additional system control information. And the speaker/receiver would likely have various input and output capabilities to provide flexibility and connectivity for various interfacing purposes. It is also common to include a second microphone/transmitter for students to use to ensure that student comments and questions are accessible to the class as a whole.

16. Got it, and thanks for clearing that up. You mentioned critical distance once again. When using CADS, do we now have two critical distances?

Actually, all sound sources in an enclosure will have a critical distance for audibility and intelligibility. Remember that the acoustical properties of the room dictate critical distance, not the nature of the CADS. For our purposes though, consider the example I have in Figure 4. My friend, Steve, tends to provide large group instruction from the front of the class, near the white board. I have measured his room and it actually meets ANSI Standards. To be clear, Figure 4 does not represent the actual critical distance(s) in his room; however, you’ll note that the signal from the CADS near the far wall is providing a second source of direct acoustical support. As Steve provides instruction, the CADS is distributing and supporting his signal throughout the classroom. Additionally, Steve is certainly going to be moving about to some extent as he teaches. He may turn toward the whiteboard, limiting the visual cues some students rely upon. Intelligibility cues (high frequency energy) may also be reduced when not facing the class directly. A CADS ensures a stable distribution of that energy as Steve teaches, which is exactly what we want.

You may be familiar with a computer speech analysis program called Language ENvironment Analysis (LENA), available from lenafoundation.org. Among the many capabilities of this program is the ability to measure intelligible adult speech using a metric called estimated Adult Word Count (AWC). What you can do is have LENA score the estimated Adult Word Count throughout an entire school day with and without a CADS in use. I’m telling you this, because I used this technology to do a computer-assisted language analysis of intelligible adult speech in Steve’s classroom, and on days when the CADS was used, estimated AWC increased by an average of approximately 5,000 words per day! Steve didn’t speak 5,000 more words; the computer scored about 5,000 words as being intelligible compared to those days when CADS was not in use. Therefore, students appeared to have access to 5,000 more intelligible words per day with a CADS than without. That’s quite significant, and I hope to expand on this initial pilot project.

Figure 4. Approximate sound distribution patterns of multiple sources in a classroom. The red arrows depict an approximate critical distance from the direct source. The blue arrows depict the approximate critical distance from the CADS.

An issue to consider is the appropriate output of the CADS. I view CADS as a second, “acoustical version” of the teacher. Meaning, if I could clone the acoustical output of the source and place the new source anywhere in the room, I would simply set the level such that it was roughly equal to the SPL of the teacher at a distance of one meter – roughly 65-70 dBA. This should be an output level that will provide support without distraction. Another great use for the calibrated SLM in your smartphone!

I hope you’re starting to get a good sense of the big picture: Measure the room, address what you can through passive measures, and use CADS as needed to ensure students receive audibility and intelligibility cues anywhere in the room.

17. I think I’m there, but I do have a more practical question. I’ve noticed that manufacturers differ in the kinds of speakers they use, each claiming theirs is better. Is one speaker really better than another?

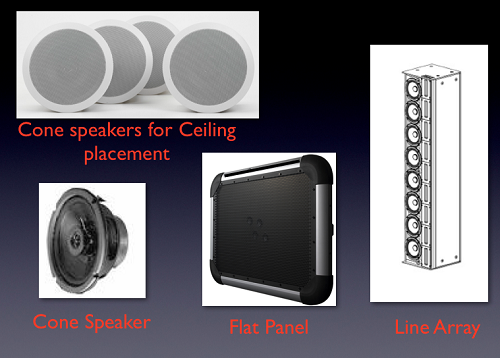

I like to say that “everything in audio is a tradeoff”. That’s true here too. Let’s look at Figure 5. There are essentially three types of speakers used in CADS: cone or piston motion speakers, flat panel/forced resonance speakers, and line array speakers (which are essentially a column of cone speakers).

Figure 5. Types of speakers used in classroom audio distribution systems - traditional cone or piston motion speakers, flat panel/forced resonance transducers, and line array cone speaker columns.

Each of the speakers has strengths and weaknesses. For example, a cone speaker has good dynamic range, and a fairly wide frequency response. However, it is quite directional at high frequencies. In other words, they have a tendency to “beam” the high frequency intelligibility cues of speech straight ahead. This limits access for those listening off axis to the speaker. Therefore, it is common to see multiple cone speakers mounted in the ceiling of a classroom to ensure good coverage.

A flat panel/forced resonance speaker isn’t really a speaker. “Transducer” is the more accurate term. It works the way a vibrating tuning fork works on a table surface – it causes the entire surface to vibrate. Flat panels tend to work great within the frequency and dynamic range of speech, but you might not want one for your home theater as low frequency extension and very wide dynamic range signals are tough for flat panels to handle. That said, they excel at being very non-directional, particularly in the crucial high frequency, speech intelligibility range. This can be great for CADS as the intelligibility cues of speech are distributed quite evenly.

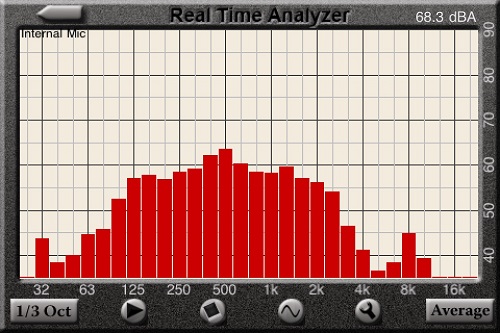

Take a look at Figures 6 and Figure 7, which are averaged speech spectrum analyses of our audiology friend, Carol Flexer, speaking through a flat panel/forced resonance speaker during a lecture not long ago. Note that the high frequencies are still quite intact even 90 degrees off axis. What this means is that flat panel speakers can be quite versatile in their placement as their dispersion is very wide.

Figure 6. Average speech spectrum at 1 Meter, On Axis. On the Y-Axis is sound pressure level in dBA. On the X-axis are 1/3 octave bands from 32 Hz to 16 kHz.

Figure 7. Average speech spectrum at 1 meter, 90 Degrees Off Axis. On the Y-Axis is sound pressure level in dBA. On the X-axis are 1/3 octave bands from 32 Hz to 16 kHz.

Finally, we come to line array speaker systems. Line arrays have the advantages of cone speakers, but by stacking the speakers in a tower, the vertical spread of the signal is limited through acoustical interference (a topic for another day). In a nutshell, the sound from a line array spreads nicely horizontally, but not vertically. It projects well, but placement height is an important variable. Imagine a sort of “pancake” of sound propagation and you’ll have the right idea. Proponents of line array speaker systems also point out that because there is greater vertical control of the wavefront, there is less reduction of sound pressure level over distance. In essence, line arrays are claimed to beat the Inverse Square Law. Hmm… physics is physics in my view, but line arrays certainly have their place in the CADS world.

I haven’t really answered your question regarding which one is best. The easy answer is: The one that gets used. Ok, that’s a smarty-pants answer. Yet honestly, if you know the properties of the room, and know the tradeoffs and proper setup of each type of CADS, you can’t go wrong with any of them. In fact, my guess is that the speaker type will be less important than some of the other features and functions that are now available. Knowing how the systems work acoustically in the classroom is the foundation though, and that’s why I wanted to take some time to discuss these basic differences.

18. What about students using personal FM systems in the classroom? How do those systems work with CADS?

Yes, some students with hearing instruments are also using personal FM systems to achieve access to direct and indirect instruction. We need to ensure that CADS can integrate with personal FM systems as seamlessly and as transparently as possible. The signal being received by the student using a personal FM system should not be degraded by using CADS in the classroom as well. Every CADS manufacturer can provide detailed information regarding interfacing their systems with personal FM systems. As a general reference, check out: Remote Microphone Hearing Assistance Technologies for Children and Youth from Birth to 21 Years Supplement B: Classroom Audio Distribution Systems – Selection and Verification (American Academy of Audiology, 2011). You’ll find great information to ensure best practice regarding CADS use with personal FM systems, and more.

19. I will check it out. One last thing, what about those new features and functions you hinted at earlier?

It goes without saying that occupied noise levels in classrooms vary. Depending on the activities both within the classroom, as well as noise that may be entering the room from outside sources, the acoustical landscape varies and is dynamic. Yet, many CADS are set to a certain level, and remain at that level irrespective of the variations of noise level in the room. At least one manufacturer has addressed this issue by allowing monitoring of the noise level in the room via the teacher’s mic, and electronically varying CADS output accordingly. This approach is designed to ensure that our goal of +15 dB signal-to-noise ratio is reached even as noise levels vary in the classroom. Pretty clever! Yet, this is still an acoustical feature, if you will, and things are getting much more interesting – let me explain.

So far, everything I’ve talked about has been based on the assumption that CADS use has been a way to help ensure that all students have access to direct and indirect instruction in all settings, at all times. In other words, CADS have been traditionally seen as being an augmentative technology. Curriculum delivery remained exactly as it was without CADS in place. CADS were simply an active acoustical enhancement for students in the classroom. The “new frontier” is using CADS as an integrated technology for content delivery and interactions with students – very cool! For example, what if CADS could record and store instructional content as well as improve acoustical access? Even better, what if CADS could upload that content to a school Internet server or cloud for students to access as needed, even from home? Wow – the word “access” just took on a whole new meaning! It’s not restricted to simple acoustical access now, but expands to include “on-demand” access of the curriculum - quite a departure from CADS' original goals. The term for this expanded role for CADS is lesson capture, and it is being integrated into CADS in various ways by various manufacturers. It is here to stay, and teachers are finding innovative ways to use lesson capture to improve their content delivery and to foster student engagement.

Here’s another development that is now coming into its own: differentiated instruction. This is a flexible teaching strategy that is designed to maximize each individual student's capabilities as a learner. In essence, a teacher skilled at differentiated instruction can reach each student at their unique educational readiness level and structure the curriculum accordingly. At least one classroom audio distribution system has been designed specifically for differentiated instruction by providing multiple flat panel transducers throughout the classroom. The transducers, or pods, can be individually selected by the teacher for directed instruction, and provide audio monitoring from each pod site as well – two way communication! In practice, this means that the instructor can select zones within the classroom for differentiated instruction, and students can participate by transmitting their responses and insights to the whole class. “Reciprocal access” – wow!

20. The future looks bright?

You bet it does. CADS are becoming true instructional technology systems, not just augmentative systems to address acoustical access issues. The root word of education is “educe”, which is “to bring forth from”. Classroom audio distribution systems are helping educe students’ potential in ways that were not imagined even a few short years ago. Audiologists should be quite proud to be making such an impact on the educational future for all children. I know I am.

References

American Academy of Audiology. (2011). Remote microphone hearing assistance technologies for children and youth from birth to 21 years Supplement B: Classroom audio distribution systems – selection and verification. Retrieved from: https://www.audiology.org/resources/documentlibrary/Documents/HAT_Guidelines_Supplement_A.pdf

American National Standards Institute/Acoustical Society of America. (2010). ANSI/ASA S12.60-2010/Part 1 Acoustical performance criteria, design requirements, and guidelines for schools, Part 1: Permanent schools. Available from the Acoustical Society of America via https://scitation.aip.org/.

American Speech-Language-Hearing Association. (1995). Guidelines for addressing acoustics in educational settings [Guidelines]. Available from www.asha.org/policy.

Cite this content as:

Ostergren, D. (2013, November). 20Q: Improving speech understanding in the classroom - today's solutions. AudiologyOnline, Article 12285. Retrieved from: https://www.audiologyonline.com