From the Desk of Gus Mueller

From the Desk of Gus Mueller

In the world of clinical decision analysis, audiologic or other, important concepts to consider are the sensitivity and specificity of the tests that we use to detect a specific disorder or pathology. To refresh your memory, sensitivity is the % of positive findings for a group who are known to have the pathology (true positives), whereas, specificity is the % of negative findings for patients who are known not to have the pathology (true negatives). The test’s cut-off value for normal vs. abnormal can be raised or lowered, in an attempt to optimize both values—however, as one goes up, nearly always the other goes down.

We also need to consider the predictive value of a given test. Positive and negative predictive values are influenced by the prevalence of the disorder in the population that is being tested. If your caseload has a higher prevalence, then it’s more likely that patients who test positive truly have the disorder. For example, a large right vs. left QuickSIN score asymmetry is more likely to be a “hit” for a retro-cochlear pathology in a major otology office, than if the person were tested at the neighborhood hearing aid shop.

There are several published charts, going back over 40 years, that provide the sensitivity and specificity of common diagnostic audiologic tests—some of which we still use today. In most cases, the pathology of interest was a nVIII disorder. The patient was placed in the “true positive” group based on CT scans or surgery findings. But what if we’re testing to detect an auditory processing disorder (APD) that is not related to a specific lesion? How is it possible to calculate the “goodness” of a given test if we don’t have a true positive or true negative reference? Fortunately, many researchers have thought about this, and one of them is here this month to not only talk about clinical decision analysis, but several other issues surrounding the area of APD diagnosis and testing.

Andrew J. Vermiglio, AuD, is an associate professor at East Carolina University where he is the director of the Speech Perception Lab. You’re probably familiar with his research and publications in the areas of speech recognition in noise testing, diagnostic accuracy studies, and the central auditory processing disorder (CAPD) construct.

Prior to entering academia, Dr. Vermiglio worked for several years as a senior research associate at the House Ear Institute in Los Angeles, California. He is one of the developers of past commercial versions of the Hearing in Noise Test (HINT), and was part of the team that developed the HINT in 18 languages.

Over the years, Andy has encouraged careful thought regarding how we identify and test for APD. In this engaging 20Q, he reviews the many issues surrounding this sometimes-elusive entity, questioning if we have, or ever will have a “gold standard.”

Gus Mueller, PhD

Contributing Editor

Browse the complete collection of 20Q with Gus Mueller CEU articles at www.audiologyonline.com/20Q

20Q: Auditory Processing Disorders - Is there a Gold Standard?

Learning Outcomes

After reading this article, professionals will be able to:

- Describe the origins of the APD construct.

- Describe the implications of the APD construct for diagnostics.

- Evaluate the quality of diagnostic accuracy studies for APD tests and test batteries.

1. I find this topic to be rather confusing. Can you give me a clear and simple description of exactly what is an auditory processing disorder?

At an American Academy of Audiology conference in 2009, Dr. James Jerger commented, “there seem to be more descriptions of APD than there are children who actually have it.” Wilson (2019) wrote a very interesting article on the definition of auditory processing disorders (APD). He stated that the “ongoing failure to produce a unified definition” has contributed to APD lacking a few critical scientific elements. According to Wilson, these elements include: an account of the essence of APD; an account of the properties of APD; and a demonstration of APD. Now, I know this probably doesn’t seem very helpful when you are trying to understand the basics but knowing that there are problems with the definition of APD is a critical part of our discussion. I can say that based on the APD guidelines from the American Academy of Audiology (AAA, 2010), the APD construct is as follows: tests that are sensitive to the presence of a lesion of the central auditory nervous system (CANS) are also sensitive to an APD in the absence of a CANS lesion. Perhaps we should examine the history that led up to the APD construct. This will provide some answers for you.

2. Before we get to the history, what do you mean by the APD construct?

The word “construct” is defined as “an idea or a belief that is based on various pieces of evidence that have not always been proved to be true” (Oxford, 2023). As we review the history, we’ll note various pieces of evidence that have led to the idea of APD. Now, as you probably realize, this may seem like an impossible task because there are so many different definitions of APD, and there is apparently no clear avenue for finding a “unified definition.” However, a historical view of the APD construct will offer some clarity.

3. This all sounds confusing. Please tell me about the history that led to the APD construct.

A few years back, I reviewed the history of the classic research often cited as the roots of APD (Vermiglio, 2016). There are a series of studies that demonstrated that some patients with normal pure-tone thresholds had lesions of the CANS, such as a tumor in the temporal lobe. Now it seems odd that a disruption of the CANS would not affect the patients’ pure-tone thresholds. In one of the earliest studies, Bocca et al. (1954) evaluated a patient with a temporal lobe tumor and found that both pure-tone threshold and speech perception in quiet results were within normal limits. This indicated that the pure-tone threshold and speech perception in quiet tests were not sensitive to the presence of the tumor. The authors decided to try a new speech perception test to see if it might be more sensitive to the presence of the disorder. In this new test, the high-frequency content of the speech stimulus was filtered out. The results of this filtered speech test demonstrated that the ear opposite the tumor had poorer speech perception than the ear ipsilateral to the tumor. For this patient, the filtered speech test appeared to be sensitive to the presence of a temporal lobe tumor. In a subsequent study, Bocca et al. (1955) demonstrated that the filtered speech test was more sensitive than pure-tone threshold testing for most of their patients (n=18) with temporal lobe tumors.

4. That is interesting, but what does this have to do with APD?

We’re getting there! As we continue with this 1950s history, there were a number of other studies that demonstrated that certain behavioral tests are more sensitive to lesions of the CANS than pure-tone threshold testing. These tests include a sound localization test used for the detection of temporal lobe lesions (Sanchez Longo et al., 1957); tonal pattern discrimination and verbal story recall were found to be sensitive to the presence of a temporal lobectomy (Milner, 1958); and speech recognition in noise testing was used for the detection of temporal lobe lesions (Sinha, 1959). Once we get to the 1960s, dichotic listening testing was found to be sensitive to the presence of a temporal lobectomy in the classic work of Kimura (1961); distorted speech was found to be sensitive to a left temporal glioblastoma and left temporal epilepsy (Jerger, 1960); staggered spondaic words were found to be sensitive to the presence of unilateral head trauma (Katz et al., 1963); and in later years, time compressed speech was found to be sensitive to diffuse and discrete cortical lesions (Kurdziel et al., 1976). These tests should sound familiar since many of these, or various versions of these tests, are commonly used as part of an APD test battery today. Notice that in all of these classic studies, the target disorders (or target conditions) were known, and their presence was verifiable. In a diagnostic accuracy study, the test used for the verification of a target disorder is called the “gold” or reference standard test.

5. APD is the same as a lesion of CANS, correct?

Well yes, and no! An APD is sometimes referred to as a central auditory processing disorder (CAPD) or a (central) auditory processing disorder or (C)APD. According to the guidelines on (C)APD, from the American Academy of Audiology (AAA, 2010), “(C)APD has been linked to a number of different etiological bases, including frank neurological lesions…” The authors also wrote, “Since (C)APD is defined as dysfunction within the CANS (ASHA, 2005; Bellis, 2003; Jerger & Musiek, 2000) the test battery used in diagnosis and assessment should include tests known to identify lesions of the CANS (including diffused lesions) and to define its functional auditory deficits (e.g., listening deficits).” In other words, tests with documented sensitivity to the presence of lesions of the CANS are relevant for the detection of a (C)APD (or APD). According to the AAA (2010), APD may exist with and without a lesion of the CANS.

6. Well, this sounds reasonable. Tests sensitive to lesions of the CANS should be used to detect APD in the absence of a lesion of the CANS. Do I have this right?

This is the main presumption of the APD construct put forth by the AAA (2010). An early description of this idea was presented by Jack Willeford (Willeford, 1976). He administered tests with “established diagnostic value in brain-damaged adults” to children with learning disabilities and “presumed auditory dysfunction.” These tests included dichotically competing messages, filtered speech, binaural fusion (dichotic), and alternating speech tests—this became known among clinicians as the “Willeford Battery.” Willeford thought that these tests would be useful in the study of disabilities in reading, spelling, mathematics, language, and “a wide variety of functions observed in children who experienced difficulty with the academic process and/or social accommodation.”

7. APD tests that are sensitive to lesions of the CANS can give us insight into learning difficulties in children. If this is the case, why does there seem to be disagreement about APD amongst professionals in audiology and speech-language-pathology?

This is where the heavy lifting comes in. At first glance, this seems like a reasonable idea. However, the scientific method involves not only the formulation of ideas (or hypotheses), but it includes the testing of those ideas (Meyer, 2021). An investigation of the APD construct requires an examination of its philosophical foundation. This involves the concept of the legitimacy of disorders (a legitimate disorder is called a clinical entity), and the basics of diagnostics. We also need a clear understanding of the diagnostic system used to determine the ability of the test under evaluation or index test to identify the presence and absence of a target disorder. A diagnostic system includes the index test, the target disorder, and the “gold” or reference standard test or test battery.

8. Well, that’s all well and good, but I’m really only interested in knowing what APD is and how to diagnose it so that I can help children and adults with auditory problems not detected by pure-tone threshold testing.

I’m all for helping patients too, and that includes understanding the evidence behind the tests that we use. As an audiologist, I believe that it’s important for you to understand the controversy surrounding APD. You will need to explain these issues to your patients when they ask about this testing. You will need to be able to discuss these issues with your patient’s family members and your colleagues in speech-language-pathology, along with physicians, schoolteachers, school psychologists, occupational therapists, and others. In this age of the internet, there is easy access to vast amounts of information on APD. A lot of this information is, well let’s just say it’s not very helpful. In the presence of competing points of view, your patients and colleagues will look to you for clarity and guidance supported by research evidence.

9. What are some of the controversial issues that I should know about?

According to the British Society of Audiology, (BSA, 2011), “APD diagnosis is based on a large number of tests, none of which have robust scientific validity, not least because there is no agreed ‘gold standard’ [aka reference standard] against which validity can be assessed. Management strategies are consequently and similarly under-informed.” The insurance company Aetna (2023) published a report on this topic stating: “Aetna’s policy on APD is based upon the limited evidence for APD as a distinct pathophysiologic entity, upon a lack of evidence of established criteria and well validated instruments to diagnose APD and reliably distinguish it from other conditions affecting listening and/or spoken language comprehension, and upon the lack of evidence from well-designed clinical studies proving the effectiveness of interventions for treating APD. The reported frequent co-occurrence of APD with other disorders affecting listening and/or spoken language comprehension suggests that APD is not, in fact, a distinct clinical entity.”

10. Wait a minute, now why should we believe anything from an insurance company? What do they know about APD?

A reasonable question, however, it appears that Aetna did their homework. They based their conclusions on a review of over 70 research articles and books in audiology and neuroscience. Their review is well-written. You can read it here Auditory Processing Disorder (APD) - Medical Clinical Policy Bulletins | Aetna.

11. Okay, I’ll take a look. What does Aetna mean when they say that APD is not a distinct clinical entity? I’m unfamiliar with that term.

I’m glad you noticed that. This is part of the heavy lifting I was talking about. After reading Aetna’s policy on APD, I too wanted more information on the concept of the clinical entity. I had never heard of this term before. After a lengthy search I found an old research article titled, “On the Clinical Entity” by Guttentag (1949). The story behind Guttentag’s article is compelling (California, 2000). Otto Earnst Guttentag was a German physician with a Jewish background who lived during the time of Hitler’s rise to power. Dr. Guttentag fled to the United States where he eventually landed a position as a professor of the philosophy of medicine at the University of California at San Francsico (UCSF). He joined the U.S. Army, and at the conclusion of WWII, Guttentag was asked by the American Military Government to help with the rehabilitation of the German medical schools. You can only imagine how chaotic this time must have been. It was during this period that Guttentag wrote his article on the clinical entity (Guttentag, 1949).

12. What did Guttentag say about the clinical entity and what does it have to do with APD?

Guttentag (1949) described the problems with the identification of legitimate disorders (also called clinical entities) in medicine. Following a very interesting review of the literature he presented the criteria for the identification of a clinical entity. I wrote about these criteria in an article called, “On the Clinical Entity in Audiology: (Central) Auditory Processing and Speech Recognition in Noise Disorders” (Vermiglio, 2014). The criteria are based on the writings of Guttentag (1949), the Food and Drug Administration (FDA, 2000) and the writings of Sydenham in 1676 as translated by Meynell (2006). The Sydenham-Guttentag criteria for the clinical entity are as follows:

- The clinical entity must possess an unambiguous definition.

- It must represent a homogeneous patient group.

- It must represent a perceived limitation.

- It must facilitate diagnosis.

- It must facilitate intervention.

In the article, these criteria were applied to APD to determine if it is a legitimate disorder (clinical entity).

13. What did you find? Is APD a clinical entity?

We’ll take it one criterion at a time. First, APD does not have an unambiguous definition. As noted earlier there are many descriptions of APD (Jerger, 2008). Recall that Wilson (2019) wrote about the ongoing failure to provide a unified definition of APD. Second, because APD can mean so many different things it does not represent a homogeneous patient group (ASHA, 2005).

Third, it is unclear if APD represents a perceived limitation for the patient. Dillon et al. (2012) questioned the relationship between APD test results and a problem in real life for the patient. They wrote that “a fail does not necessarily indicate that the patient actually has a problem.” This is consistent with Chermak et al. (2017) who wrote, “Since central auditory measures show a significant degree of construct validity in neurological models of CAPD, [the] lack of agreement between observer-report and performance-based measures indicates that subjective report of listening difficulties is not predictive of true CAPD.” This represents a disconnect between an APD diagnosis and the presence of a limitation for the patient.

Fourth, APD does not facilitate diagnosis. As previously noted by the British Society of Audiology (BSA, 2011) and Aetna (2023), APD tests are of questionable validity. The Excellus medical policy (Excellus, 2021) states, “Based upon our criteria and assessment of the peer-reviewed literature, auditory processing disorder (APD) testing is considered not medically necessary, as it does not improve patient outcomes, and there is insufficient evidence to support the validity of the diagnostic tests utilized in diagnosing an [APD].” The authors also wrote, “Although APD testing may be accepted among some practitioners, an evidence-based approach to testing is limited, due to the many different tests utilized, the lack of a ‘gold standard’ test for comparison, the heterogeneous nature of patients who have been tested (based both on age and symptoms), and the uncertain impact on the overall health of the patient.”

Fifth, APD does not facilitate intervention. In a study commissioned by ASHA, Fey et al. (2011) reviewed the literature and concluded, “The evidence base is too small and weak to provide clear guidance to speech-language pathologists faced with treating children with diagnosed APD...” The bottom line is that using the Sydenham-Guttentag criteria, APD is not a clinical entity. That is, it is not a legitimate disorder.

14. I know some audiologists who would disagree with your conclusion. But moving on, what is the concern with the lack of a “gold standard” test for APD. Is this really an important issue?

I certainly think so. This brings us to the controversial area of diagnostics and APD. In the literature we find a poor understanding of APD and diagnostics in general. Without a clear understanding of diagnostics, it is not possible to know the accuracy of the tests or test batteries for APD. Problems with the procurement of a “gold” or reference standard test for the diagnosis of APD has been noted by several authors (Aetna, 2022; ASHA, 2005; Chermak et al., 2017; Schow et al., 2020; Vermiglio, 2018). Schow et al. (2021) wrote, “Unfortunately, all of us doing work in this area have difficulty finding an ideal reference (gold) standard and, therefore, finding rock solid sensitivity numbers remains elusive.” The absence of a “gold” or reference standard test produces uncertainty in diagnostics. It also results in the inability to validate the very presence of the disorder and therefore verification of an APD diagnosis is not possible.

15. What exactly is a “gold standard” test, and how does it relate to diagnostics?

The “gold” or reference standard test is an integral part of a diagnostic system used in diagnostic accuracy studies. It is used to evaluate the accuracy of the test under evaluation, which is called an index test or index test battery. A diagnostic accuracy study is used to determine the ability of the index test to correctly identify the presence and absence of the target disorder (or clinical entity). Positive and negative index test results indicate that the disorder is present or absent, respectively. The results of the index test are compared to the results of a “gold” or reference standard test to determine if the positive and negative index test results are true or false. According to Bossuyt et al. (2003), the “gold” or reference standard test is considered the best available method to establish the presence and absence of the target disorder. It may be a single test or test battery, often referred to as a “lax” or “strict” approach (Wilson et al., 2004). According to Papesh et al. (2023) “no gold standard currently exists for the diagnosis of APD in that there is currently no agreed-upon test measure that conclusively indicates an auditory processing deficit. Rather, clinical diagnoses of APD generally depend upon the results of a battery of tests that assess several areas of auditory function.” APD diagnoses, therefore, are based on positive test or test battery results without the possibility of verification.

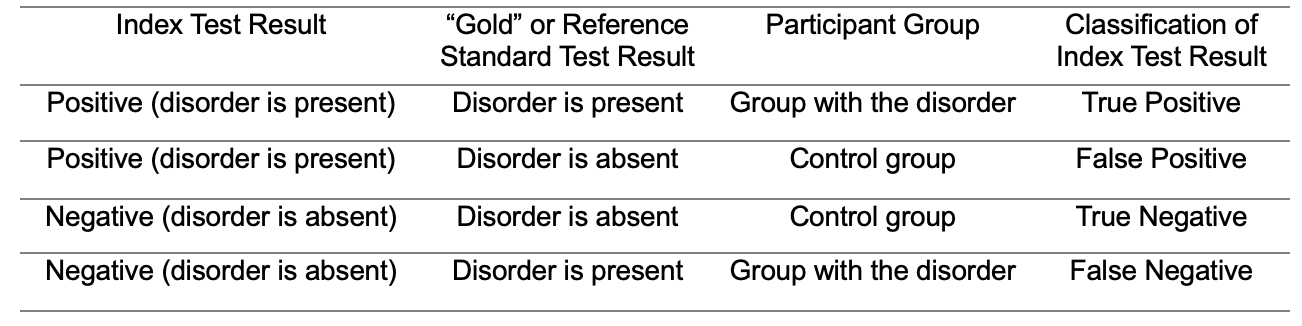

In a diagnostic accuracy study, sensitivity refers to the ability of the index test to correctly determine the presence, and specificity is the ability of the index test to correctly determine the absence of the target disorder. The “gold” or reference standard test is used to determine the placement of the participants into groups with and without the target disorder. This is very important to know since not all diagnostic accuracy studies adequately identify the “gold” or reference standard test. The group without the disorder is called the control group. Table 1 shows the classification of index test results. This table shows the index test result, the “gold” or reference standard test result, and the participant’s group. This information is used to classify the index test result. If a positive index test result is found for a participant from the group with the disorder, it is classified as a true positive index test result. If a positive index test result is found for a participant from the control group, it is classified as a false positive index test result. If a negative index test result is found for a participant from the control group, it is classified as a true negative index test result. If a negative index test result is found for a participant from the group with the disorder, it is classified as a false negative index test result. Sensitivity, sometimes referred to as the “hit rate,” is the percentage of true positive index test results. Specificity is the percentage of true negative index test results (the specificity, subtracted from 100% would provide the “false alarm” rate). Again, unless a “gold” or reference standard test is used it is not possible to know the ability of our tests to determine the presence and absence of the target disorder. In clinical audiology, we use sensitivity and specificity values to determine what should or should not be included in our test battery. For example, Koors et al. (2013) conducted a meta-analysis of several studies on the diagnostic accuracy of the auditory brainstem response (ABR) for the detection of vestibular schwannomas. The authors noted that their review of 43 studies revealed a pooled sensitivity of 93.4% for the detection of vestibular schwannomas. This is useful information for clinicians when deciding whether or not to recommend ABR testing for patients with potential retrocochlear lesions.

Table 1. Classification of index test results.

16. I get it, but didn’t AAA provide adequate guidance for the accurate diagnosis of APD?

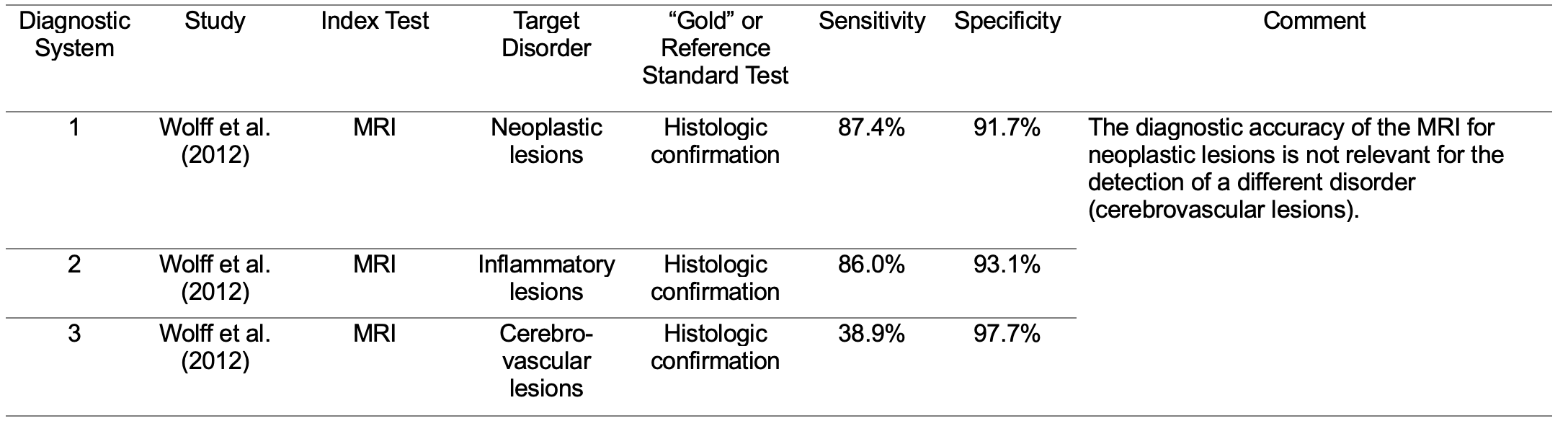

No, and this is a significant problem! According to the AAA (2010), the diagnostic accuracy of a test used to detect one disorder (e.g., a lesion of the CANS) is considered relevant for the detection of a separate disorder (e.g., an APD in the absence of a CANS lesion). This presumption is a form of something often referred to as target displacement. The study by Wolff et al. (2012) indicates that target displacement in diagnostics is not necessarily appropriate. Wolff and colleagues investigated the diagnostic accuracy of magnetic resonance imaging or MRI (in this case, the index test) for the detection of three different brain lesions: neoplastic, inflammatory, and cerebrovascular (Table 2, diagnostic systems 1 - 3). Histologic confirmation was used as the “gold” or reference standard test. The sensitivity of the MRI for the detection of neoplastic lesions was 87.4%, but only 38.9% for the detection of cerebrovascular lesions. The sensitivity of an index test for the detection of one disorder (e.g., cerebrovascular lesions) must be evaluated directly and not inferred from the sensitivity of the same index test for the detection of a separate disorder (e.g., neoplastic lesions). Target displacement in diagnostics is risky. I believe that the “accurate diagnosis” of APD based on the AAA (2010) document is based on the logical fallacy of target displacement.

Table 2. Examples of diagnostic systems used to evaluate the accuracy of the MRI when used to determine the presence and absence of three different brain lesions.

17. I see your point, but isn’t this just an issue of competing professional opinions?

That is a very important question. Chermak et al. (2017) have argued that the inclusion of APD in the International Classification of Disease, 10th revision (ICD-10; code H93.25) “confirms the physiological nature of this disorder and supports the medical necessity for care.” The ICD is a classification system developed by the World Health Organization and 10 international centers so that medical terms can be grouped for statistical purposes (CDC, 2001). However, according to Aetna (2023), even though APD is listed in the ICD-10, “Aetna considers any diagnostic tests or treatments for the management of auditory processing disorder (APD) (previously known as central auditory processing disorder (CAPD)) experimental and investigational because there is insufficient scientific evidence to support the validity of any diagnostic tests and the effectiveness of any treatment for APD.”

Chermak et al. (2018) wrote that, “a clinically useful and widely adopted gold standard for the diagnosis of APD is in use across the world, and this efficient test battery leads to verifiable diagnosis of APD…” Let’s carefully examine this statement. There are multiple tests used for the diagnosis of APD. There is no standardized test battery. It is not clear which test battery Chermak and colleagues are referring to. Should any test battery with any combination of tests be considered a “gold standard?” This is a presumption that must be tested. When Chermak et al. state that it is efficient, this implies that a diagnostic accuracy study was conducted and that the sensitivity and specificity of the “gold standard” test battery are known. However, Chermak and colleagues did not present evidence to support this idea.

18. But aren’t there studies where an APD test battery is used as a “gold” standard?

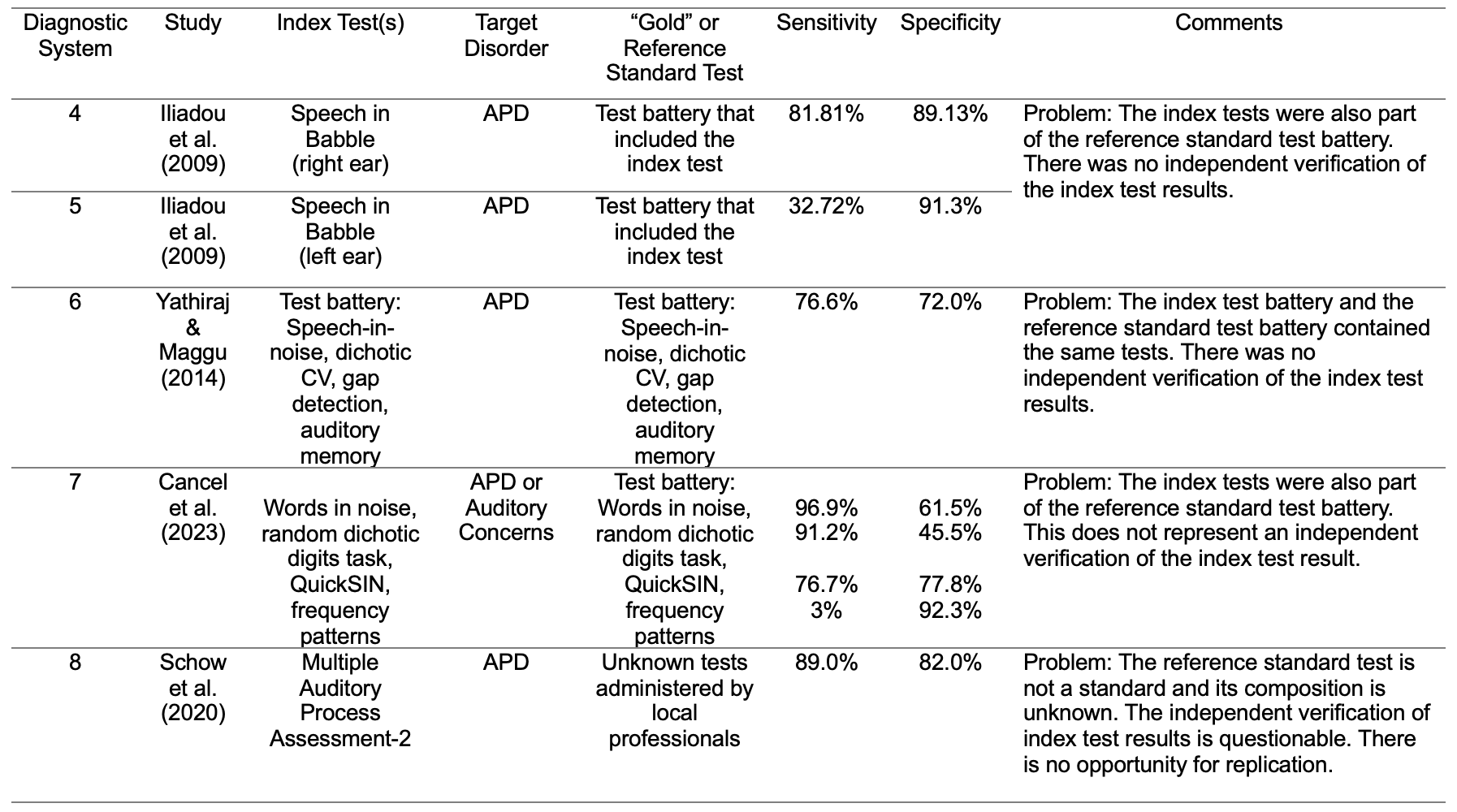

Unfortunately, you’re right. One approach to circumvent the lack of a gold standard test for APD has been to arbitrarily select an APD test battery and call it a “gold standard,” even in the absence of empirical support (Cancel et al., 2023; Iliadou et al., 2009, Yathiraj and Maggu, 2014). In some diagnostic accuracy studies, the “gold” or reference standard test battery for an APD was unknown or not specified (Keith, 2012; Schow et al., 2020). In these types of studies, it is not uncommon to find that the index test is also part of the gold standard test battery. Table 3 shows the diagnostic systems (4-8) from four diagnostic accuracy studies (Cancel et al., 2023; Iliadou et al., 2009; Schow et al., 2020; Yathiraj & Maggu, 2014). In each study, the target disorder was an APD.

- Iliadou and colleagues evaluated the diagnostic accuracy of various index tests including a speech in babble test for the detection of an APD (diagnostic systems 4 and 5). However, the index tests were also used as part of the reference standard test battery.

- Yathiraj and Maggu (2014) investigated the diagnostic accuracy of an index test battery (diagnostic system 6) for the detection of an APD. However, the tests in the index test battery were also part of the reference standard test battery.

- Cancel et al. (2023) investigated the ability of individual behavioral tests to detect the presence of an APD or an auditory concern (diagnostic system 7). The authors appear to have included the index tests as part of the reference standard test battery (personal communication: Parthasarathy, corresponding author, on September 17, 2023).

- Schow et al. (2020) reported on the diagnostic accuracy of the Multiple Auditory Processing Assessment-2 (MAPA-2) test battery for the identification of an APD (diagnostic system 8). However, the “gold” or reference standard test batteries were unknown and administered by “local professionals.” The occurrence of the index test(s) also appearing as part of the ”gold” or reference standard test or test battery is unknown but probable.

Ransohoff and Feinstein (1978) wrote that incorporation bias occurs when the results of the index test are incorporated into the evidence (from the “gold” or reference standard test) used to diagnose the disease. They also stated that since the evidence used for the “true” diagnosis should be independent of the index test result, such incorporations will “obviously bias the apparent accuracy of the [index] test.”

Across the studies in Table 3, even if the index tests were not included in the gold standard test batteries, the authors would still need to provide evidence of the accuracy of their selected gold standard tests. This is because the legitimacy of these gold standard tests is not self-evident.

Table 3. Examples of diagnostic systems for tests used to determine the presence and absence of an APD. Problems with each of these diagnostic systems are noted in the comments.

19. Well, this is all a little disturbing. Should we simply abandon the idea of APD testing?

I’m glad you brought this up. To my way of thinking, we have a choice to make. Either we can assess an APD in the absence of a CANS lesion, that is, an ambiguous disorder that represents a heterogeneous patient group, where it’s not clear if a functional limitation exists, and one without the benefit of an empirically based gold standard test, or we can identify and diagnose legitimate disorders (clinical entities) using tests with known and reasonable diagnostic accuracy. These legitimate disorders include lesions of the CANS. They may also include some disorders currently associated with the APD construct. For example, according to the Sydenham-Guttentag criteria that we discussed earlier, a speech recognition in noise (SRN) disorder is a clinical entity. Unambiguously defined clinical entities lend themselves to the procurement of reasonable “gold” or reference standard tests. These tests may then be evaluated in diagnostic accuracy studies in order to determine their diagnostic efficiency.

An SRN disorder may be defined simply as difficulty recognizing speech in the presence of a competing signal. An SRN disorder in the presence of normal pure-tone thresholds has been called by many names including psychogenic deafness (King, 1954), obscure auditory dysfunction (Saunders & Haggard, 1989), and the King-Kopetzky Syndrome (Hinchcliffe, 1992). It has also been called an APD (Pryce et., 2010), however an APD is ambiguous, and it may or may not include SRN difficulties (Kuk et al., 2008; Vermiglio, 2014). An SRN disorder, on the other hand, is unambiguous.

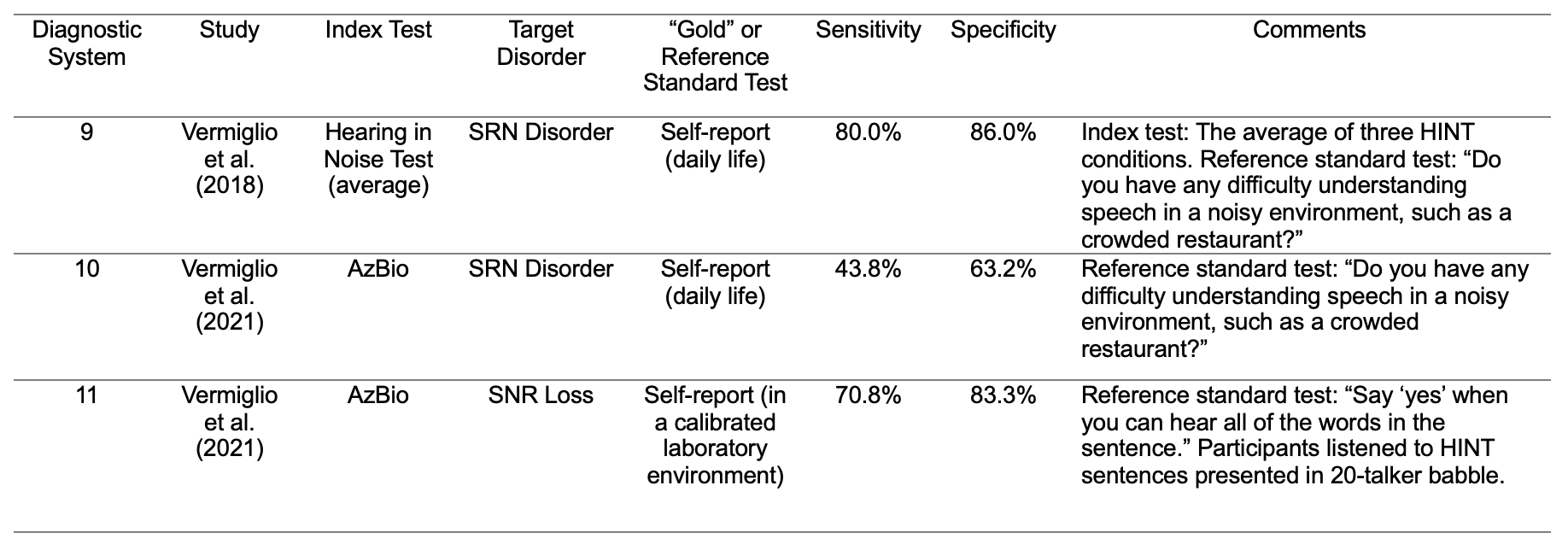

My colleagues and I have argued that self-report is a reasonable gold or reference standard test for an SRN disorder (Vermiglio et al., 2018). Previous diagnostic accuracy studies have used self-report as a reference standard test to delineate between the presence and absence of various target disorders including tinnitus (Schaette & McAlpine, 2011), pain (Stilma et al., 2015), hearing loss (Beasley, 1940; Steinberg et al., 1940), and an SRN disorder (Middelweerd et al., 1990; Saunders & Haggard, 1992; Vermiglio & Fang, 2022; Vermiglio et al., 2021; Zhao & Stephens, 2006). Since the self-report of sensory perception was used as the “gold” or reference standard tests in these studies, the legitimacy of these tests is self-evident. In the field of audiology, self-report is required to convey sensory perception (listening experiences) in daily life for hearing questionnaires. Self-report is also required to convey sensory perception in the lab (or clinic) for pure-tone threshold testing or psychoacoustic tasks. Both types of self-report were used as “gold” or reference standard tests by Vermiglio et al. (2021). As shown in Table 4, diagnostic systems 9-11 were used to evaluate the ability of the two different SRN tests to detect the presence and absence of an SRN disorder or a signal-to-noise (SNR) loss (Vermiglio, 2018; Vermiglio et al., 2021). In these studies, the self-report of the ability to hear speech in noise in daily life (diagnostic systems 9 and 10) or in a calibrated laboratory environment (diagnostic system 11) were used as the “gold” or reference standard tests. Participants who reported the presence of the target disorder (e.g., an SRN disorder) were placed in the disordered group. All other participants were placed in the control group. The index tests were the Hearing in Noise Test or HINT (Nilsson et al., 1994; Vermiglio, 2008), and the AzBio SRN test (Spahr et al., 2012). The sensitivity and specificity varied across index tests and across target disorders. In audiology, these types of evaluations should be replicated in order to establish the consistency of diagnostic accuracy results across studies. In addition to an SRN disorder, there are other potential clinical entities associated with the APD construct, including a temporal resolution disorder (Tremblay et al., 2003), amblyaudia (Moncrieff, 2011), and a spatialized speech-in-noise disorder (Cameron et al., 2006).

Table 4. Examples of diagnostic systems for the evaluation of tests used to determine the presence and absence of an SRN disorder or a signal-to-noise ratio (SNR) loss.

Again, a path forward is to use the clinical entities approach to identify legitimate disorders (clinical entities) currently associated with the APD construct, find appropriate “gold” or reference standard tests, and determine the diagnostic accuracy of behavioral tests used for the detection of these legitimate target disorders.

20. You've really given me a lot to think about. Any closing words?

Sure--here is a fairly concise summary of what we've been talking about. The APD construct is based on the untested and untestable presumption that tests relevant for the detection of a lesion of the CANS are also relevant for the detection of an APD in the absence of a CANS lesion. This presumption cannot be evaluated due to the absence of a “gold” or reference standard test for APD in the absence of a CANS lesion. The lack of a gold standard means there is no way to determine the very presence of the disorder and no way to determine if the test results and diagnoses are correct. This creates a breeding ground for widespread speculation appearing in the form of “expert opinion” or “professional judgment” in the absence of empirical support. This is in opposition to the scientific method where ideas (or hypotheses) are tested. The value of diagnostic tests in audiology rests on their ability to correctly identify the presence and absence of a target disorder. A critical analysis of a diagnostic accuracy study requires a review of the diagnostic system, including the index test, target disorder, and the “gold” or reference standard test. This will provide clarity as we replace APD diagnostics with the diagnosis of legitimate auditory disorders (clinical entities) in the presence of normal pure-tone thresholds.

References

American Academy of Audiology. (2010). Clinical Practice Guidelines: Diagnosis, Treatment and Management of Children and Adults with Central Auditory Processing Disorder. Reston, VA: American Academy of Audiology. http://www.audiology.org/resources/documentlibrary/Documents/CAPD Guidelines 8-2010.pdf

Aetna. (2022). Auditory Processing Disorder (APD) Clinical policy bulletin. No. 0668. Retrieved from Retrieved from: http://www.aetna.com/cpb/medical/data/600_699/0668.html#:~:text=Aetnaconsiders any diagnostic tests,any diagnostic tests and the 3/25/2022.

Aetna. (2023). Auditory Processing Disorder: Experimental and Investigational. Retrieved from https://www.aetna.com/cpb/medical/data/600_699/0668.html

ASHA. (2005). (Central) Auditory Processing Disorders. Retrieved from www.asha.org/policy

Beasley, W. C. (1940). Characteristics and distribution of impaired hearing in the population of the United States. J. Acoust. Soc. Am., 12.

Bellis, T. J. (2003). Assessment and management of central auditory procsssing disorders in the educational setting: From science to practice (Second ed.). San Diego: Singular.

Bocca, E., Calearo, C., & Cassinari, V. (1954). A new method for testing hearing in temporal lobe tumours; preliminary report. Acta Otolaryngol, 44(3), 219-221.

Bocca, E., Calearo, C., Cassinari, V., & Migliavacca, F. (1955). Testing "cortical" hearing in temporal lobe tumours. Acta Otolaryngol, 45(4), 289-304. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/13275293

Bossuyt, P. M., Reitsma, J. B., Bruns, D. E., Gatsonis, C. A., Glasziou, P. P., Irwig, L. M., . . . Lijmer, J. G. (2003). The STARD statement for reporting studies of diagnostic accuracy: explanation and elaboration. The Standards for Reporting of Diagnostic Accuracy Group. Croat Med J, 44(5), 639-650.

BSA. (2011). Postion Statement Auditory processing disorder (APD). Retrieved from Berkshire, UK:

California (2000). Otto Ernst Guttentag Somatotypes Project Records, MSS 80-8, Archives & Special Collections, UCSF Library & CKM. Retrieved from https://oac.cdlib.org/findaid/ark:/13030/tf5v19p12s/entire_text/

Cancel, V. E., McHaney, J. R., Milne, V., Palmer, C., & Parthasarathy, A. (2023). A data-driven approach to identify a rapid screener for auditory processing disorder testing referrals in adults. Sci Rep, 13(1), 13636. doi:10.1038/s41598-023-40645-0

CDC. (2001). International Classification of Diseases 10th Revision (ICD-10). Retrieved from https://www.cdc.gov/nchs/data/dvs/icd10fct.pdf

Chermak, G. D., Iliadou, V. M., Bamiou, D. E., & Musiek, F. (2018). Letter to the Editor: Response to Vermiglio, 2018, “The Gold Standard and Auditory Processing Disorder”. Perspectives of the ASHA Special Interest Groups, SIG 6, 3(Part 2), 77-82. doi:https://doi.org/10.1044/persp3.SIG6.77

Chermak, G. D., Musiek, F., & Weihing, J. (2017). Beyond controversies: The science behind central auditory processing disorder. Hearing Review, 24(5), 20-24.

Dillon, H., Cameron, S., Glyde, H., Wilson, W., & Tomlin, D. (2012). An opinion on the assessment of people who may have an auditory processing disorder. J Am Acad Audiol, 23(2), 97-105. doi:10.3766/jaaa.23.2.4

Excellus. (2021). Medical Policy: Auditory Processing Disorder (APD) Testing. Retrieved from https://www.excellusbcbs.com/documents/20152/127121/Auditory+Processing+Disorder+(APD)+Testing.pdf/532d7138-d7a2-daa7-48c0-71998dd160a5?t=1641497732139

FDA. (2000). Division of Neuropharmacological Drug Products (DNDP) Issues Paper for March 9, 2000 Meeting of the Psychopharmacological Drugs Advisory Committee Meeting on the Various Psychiatric and Behavioral Disturbances Associated with Dementia.

Fey, M. E., Richard, G. J., Geffner, D., Kamhi, A. G., Medwetsky, L., Paul, D., . . . Schooling, T. (2011). Auditory processing disorder and auditory/language interventions: an evidence-based systematic review. Lang Speech Hear Serv Sch, 42(3), 246-264. doi:10.1044/0161-1461(2010/10-0013)

Guttentag, O. E. (1949). On the clinical entity. Ann Intern Med, 31(3), 484-496. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/18137165

Hinchcliffe, R. (1992). King-Kopetzky syndrome: An auditory stress disorder? Journal of Audiological Medicine, 1, 89-98.

Iliadou, V., Bamiou, D. E., Kaprinis, S., Kandylis, D., & Kaprinis, G. (2009). Auditory Processing Disorders in children suspected of Learning Disabilities--a need for screening? Int J Pediatr Otorhinolaryngol, 73(7), 1029-1034. doi:10.1016/j.ijporl.2009.04.004

Jerger, J. (1960). Observations on auditory behavior in lesions of the central auditory pathways. AMA Arch Otolaryngol, 71, 797-806. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/14407164

Jerger, J. (2008). A Brief History of Audiology in the United States. In S. Kramer (Ed.), Audiology: Science to Practice (pp. 333-344). San Diego: Plural Publishing.

Jerger, J., & Musiek, F. (2000). Report of the Consensus Conference on the Diagnosis of Auditory Processing Disorders in School-Aged Children. J Am Acad Audiol, 11(9), 467-474. Retrieved from https://www.ncbi.nlm.nih.gov/pubmed/11057730

Katz, J., Basil, R. A., & Smith, J. M. (1963). A Staggered Spondaic Word Test for Detecting Central Auditory Lesions. Ann Otol Rhinol Laryngol, 72, 908-917. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/14088732

Keith, R. (2012). Technical Report SCAN-3 for Adolescents & Adults Tests for Auditory Processing Disorders. Retrieved from PsychCorp.com

King, P. F. (1954). Psychogenic deafness. J Laryngol Otol, 68(9), 623-635. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/13201864

Koors, P. D., Thacker, L. R., & Coelho, D. H. (2013). ABR in the diagnosis of vestibular schwannomas: a meta-analysis. Am J Otolaryngol, 34(3), 195-204. doi:10.1016/j.amjoto.2012.11.011

Kuk, F., Jackson, A., Keenan, D., & Lau, C. C. (2008). Personal amplification for school-age children with auditory processing disorders. J Am Acad Audiol, 19(6), 465-480. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/19253780

Kurdziel, S., Noffsinger, D., & Olsen, W. (1976). Performance by cortical lesion patients on 40 and 60% time-compressed materials. J Am Audiol Soc, 2(1), 3-7. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/965276

Meyer, S. C. (2021). The Return of the God Hypothesis: Three Scientific Discoveries Revealing the Mind Behind the Universe. United States: HarperCollins Publishers.

Meynell, G. G. (2006). John Locke and the preface to Thomas Sydenham's Observationes medicae. Med Hist, 50(1), 93-110. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/16502873

Middelweerd, M. J., Festen, J. M., & Plomp, R. (1990). Difficulties with speech intelligibility in noise in spite of a normal pure-tone audiogram. Audiology, 29(1), 1-7. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/2310349

Milner, B. (1958). Psychological defects produced by temporal lobe excision. Res Publ Assoc Res Nerv Ment Dis, 36, 244-257. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/13527787

Nilsson, M., Soli, S. D., & Sullivan, J. A. (1994). Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am, 95(2), 1085-1099. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/8132902

Papesh, M. A., Fowler, L., Pesa, S. R., & Frederick, M. T. (2023). Functional Hearing Difficulties in Veterans: Retrospective Chart Review of Auditory Processing Assessments in the VA Health Care System. Am J Audiol, 1-18. doi:10.1044/2022_AJA-22-00117

Pryce, H., Metcalfe, C., Hall, A., & Claire, L. S. (2010). Illness perceptions and hearing difficulties in King-Kopetzky syndrome: what determines help seeking? Int J Audiol, 49(7), 473-481. doi:10.3109/14992021003627892

Ransohoff, D. F., & Feinstein, A. R. (1978). Problems of spectrum and bias in evaluating the efficacy of diagnostic tests. N Engl J Med, 299(17), 926-930. doi:10.1056/NEJM197810262991705

Sanchez Longo, L. P., Forster, F. M., & Auth, T. L. (1957). A clinical test for sound localization and its applications. Neurology, 7(9), 655-663. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/13477338

Saunders, G. H., & Haggard, M. P. (1989). The clinical assessment of obscure auditory dysfunction--1. Auditory and psychological factors. Ear Hear, 10(3), 200-208. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/2744258

Saunders, G. H., & Haggard, M. P. (1992). The clinical assessment of "Obscure Auditory Dysfunction" (OAD) 2. Case control analysis of determining factors. Ear Hear, 13(4), 241-254. doi:10.1097/00003446-199208000-00006

Schaette, R., & McAlpine, D. (2011). Tinnitus with a normal audiogram: physiological evidence for hidden hearing loss and computational model. J Neurosci, 31(38), 13452-13457. doi:10.1523/JNEUROSCI.2156-11.2011

Schow, R. L., Dillon, H., & Seikel, J. A. (2021). Comment on Ahmmed (2021): The Search for Evidence-Based Auditory Processing Disorder Diagnostic Criteria. Am J Audiol, 1-3. doi:10.1044/2021_AJA-21-00100

Schow, R. L., Whitaker, M. M., Seikel, J. A., Brockett, J. E., & Domitz Vieira, D. M. (2020). Validity of the Multiple Auditory Processing Assessment-2: A Test of Auditory Processing Disorder. Lang Speech Hear Serv Sch, 51(4), 993-1006. doi:10.1044/2020_LSHSS-20-00001

Sinha, P. S. (1959). The role of the temporal lobe in hearing. (Master of Science). McGill University, Montreal Neurological Institute.

Spahr, A. J., Dorman, M. F., Litvak, L. M., Van Wie, S., Gifford, R. H., Loizou, P. C., . . . Cook, S. (2012). Development and validation of the AzBio sentence lists. Ear Hear, 33(1), 112-117. doi:10.1097/AUD.0b013e31822c2549

Steinberg, J. C., Montgomery, H. C., & Gardner, M. B. (1940). Results of the world's fair hearing tests. J. Acoust. Soc. Amer., 12, 533-562.

Stilma, W., Rijkenberg, S., Feijen, H. M., Maaskant, J. M., & Endeman, H. (2015). Validation of the Dutch version of the critical-care pain observation tool. Nurs Crit Care. doi:10.1111/nicc.12225

Vermiglio, A. J. (2008). The American English hearing in noise test. Int J Audiol, 47(6), 386-387. doi:10.1080/14992020801908251

Vermiglio, A. J. (2014). On the Clinical Entity in Audiology: (Central) Auditory Processing and Speech Recognition in Noise Disorders. J Am Acad Audiol, 25(9), 904-917. doi:10.3766/jaaa.25.9.11

Vermiglio, A. J. (2016). On diagnostic accuracy in audiology: Central site of lesion and central auditory processing disorder studies. Journal of the American Academy of Audiology, 27(02), 141-156.

Vermiglio, A. J. (2018). The Gold Standard and Auditory Processing Disorder. Perspectives of the ASHA Special Interest Groups, SIG 6, 3(Part 1), 6-17. doi:https://doi.org/10.1044/persp3.SIG6.6

Vermiglio, A. J., & Fang, X. (2022). The World Health Organization (WHO) hearing impairment guidelines and a speech recognition in noise (SRN) disorder. Int J Audiol, 61(10), 818-825. doi:10.1080/14992027.2021.1976424

Vermiglio, A. J., Leclerc, L., Thornton, M., Osborne, H., Bonilla, E., & Fang, X. (2021). Diagnostic Accuracy of the AzBio Speech Recognition in Noise Test. J Speech Lang Hear Res, 64(8), 3303-3316. doi:10.1044/2021_JSLHR-20-00453

Willeford, J. A. (1976). Central Auditory Function in Children with Learning Disabilities. Audiology and Hearing Education, 2(2), 12-20.

Wilson, W. J., & Arnott, W. (2013). Using different criteria to diagnose (central) auditory processing disorder: how big a difference does it make? J Speech Lang Hear Res, 56(1), 63-70. doi:10.1044/1092-4388(2012/11-

Wilson, W. J. (2019). On the definition of APD and the need for a conceptual model of terminology. Int J Audiol, 1-8. doi:10.1080/14992027.2019.1600057

Wilson, W. J., Heine, C., & Harvey, L. A. (2004). Central auditory processing and central auditory processing disorder: Fundamental questions and considerations. The Australian and New Zealand journal of Audiology, 26(2), 80-93.

Wolff, C. A., Holmes, S. P., Young, B. D., Chen, A. V., Kent, M., Platt, S. R., . . . Levine, J. M. (2012). Magnetic resonance imaging for the differentiation of neoplastic, inflammatory, and cerebrovascular brain disease in dogs. J Vet Intern Med, 26(3), 589-597. doi:10.1111/j.1939-1676.2012.00899.x

Yathiraj, A., & Maggu, A. R. (2014). Validation of the Screening Test for Auditory Processing (STAP) on school-aged children. Int J Pediatr Otorhinolaryngol, 78(3), 479-488. doi:10.1016/j.ijporl.2013.12.025

Zhao, F., & Stephens, D. (2006). Distortion product otoacoustic emissions in patients with King-Kopetzky syndrome. Int J Audiol, 45(1), 34-39. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/16562562

Citation

Vermiglio, A.J. (2024). 20Q: Auditory processing disorders - is there a gold standard? AudiologyOnline, Article 28876. Available at www.audiologyonline.com