PENN STATE - Our brains process foreign-accented speech with better real-time accuracy if we can identify the accent we hear, according to a team of neurolinguists.

"Increased familiarity with an accent leads to better sentence processing," said Janet van Hell, professor of psychology and linguistics and co-director of the Center for Language Science, Penn State. "As you build up experience with foreign-accented speech, you train your acoustic system to better process the accented speech."

A native of the Netherlands, where the majority of people are bilingual in Dutch and English, van Hell noticed that her spoken interactions with people changed somewhat when she moved to central Pennsylvania.

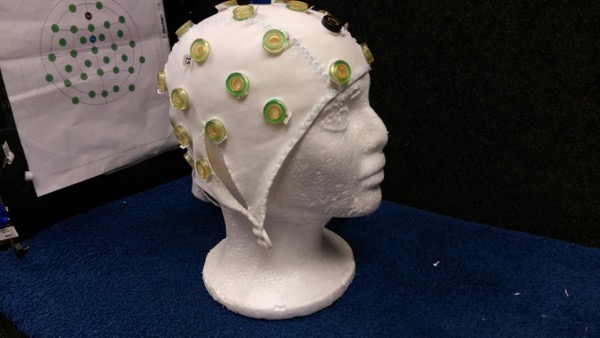

This is a view of the cap used to measure reaction to hearing language errors from native and non-native speakers. Listeners who could identify the non-native speakers native language did better than those that could not. Image courtesy of Kimberly Cartier.

"My speaker identity changed," said van Hell. "I suddenly had a foreign accent, and I noticed that people were hearing me differently, that my interactions with people had changed because of my foreign accent. And I wanted to know why that is, scientifically."

Van Hell and her colleague Sarah Grey, former Penn State postdoctoral researcher and now assistant professor of modern languages and literature at Fordham University, compared how people process foreign-accented and native-accented speech in a neurocognitive research study that measured neural signals associated with comprehension while listening to spoken sentences.

The researchers had study participants listen to the sentences while they recorded brain activity through an electroencephalogram. They then asked listeners to indicate whether they heard grammar or vocabulary errors after each sentence, to judge overall sentence comprehension.

The listeners heard sentences spoken in both a neutral American-English accent and a Chinese-English accent. Thirty-nine college-aged, monolingual, native English speakers with little exposure to foreign accents participated in this study.

The researchers tested grammar comprehension using personal pronouns, which are missing from the Chinese language, in sentences like "Thomas was planning to attend the meeting but she missed the bus to school."

They tested vocabulary usage by substituting words far apart in meaning into simple sentences, such as using "cactus" in place of "airplane," in sentences like "Kaitlyn traveled across the ocean in a cactus to attend the conference."

The listeners were able to correctly identify both grammar and vocabulary errors in the American- and Chinese-accented speech at a similarly high level on a behavioral accuracy task, an average of 90 percent accuracy for both accents.

However, while average accuracy was high, Grey and van Hell found that the listeners' brain responses differed between the two accents. In particular, the frontal negativity and N400 responses -- features of an EEG signal related to speech processing -- were different when processing errors in foreign- and native-accented sentences.

In a more detailed follow-up analysis, Grey and van Hell tried to explain these differences by connecting the EEG scans with a questionnaire asking listeners to identify the accents they heard.

When relating the EEG activity to the questionnaire responses, the researchers found that listeners who correctly identified the accent as Chinese-English responded to both grammar and vocabulary errors and had the same responses for both foreign and native accents.

Conversely, listeners who could not correctly identify the Chinese-English accent only responded to vocabulary errors, but not grammar errors, when made in foreign-accented speech. Native-accented speech produced responses for both types of error. The researchers published their results in the Journal of Neurolinguistics.

Van Hell plans to expand on these results by also studying how our brains process differences in regional accents and dialects in our native language, looking specifically at dialects across Appalachia, and how we process foreign-accented speech while living in a foreign-speaking country.

The results of this study are relevant in the United States, van Hell argues, where many people are second-language speakers or listen to second-language speakers on a daily basis. But exposure to foreign-accented speech can vary widely based on local population density and educational resources.

The world is becoming more global, she adds, and it is time to learn how our brains process foreign-accented speech and learn more about fundamental neurocognitive mechanisms of foreign-accented speech recognition.

"At first you might be surprised or startled by foreign-accented speech," van Hell said, "but your neurocognitive system is able to adjust quickly with just a little practice, the same as identifying speech in a loud room. Our brains are much more flexible than we give them credit for."

The National Science Foundation funded this research.

Source: https://www.eurekalert.org/pub_releases/2017-04/ps-rfa042017.php