Mouse research could lead to better treatments for hearing loss.

Researchers at Johns Hopkins have mapped the sound-processing part of the mouse brain in a way that keeps both the proverbial forest and the trees in view. Their imaging technique allows zooming in and out on views of brain activity within mice, and it enabled the team to watch brain cells light up as mice "called" to each other. The results, which represent a step toward better understanding how our own brains process language, appear online July 31 the journal Neuron.

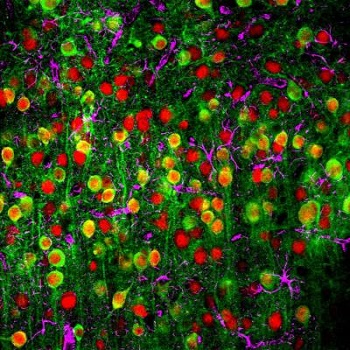

This is a two-photon microscopy image showing a calcium sensor (green), the nuclei of neurons (red) and supporting cells called astrocytes (magenta). Photo Courtesy of John Issa/Johns Hopkins Medicine

In the past, researchers often studied sound processing in various animal brains by poking tiny electrodes into the auditory cortex, the part of the brain that processes sound. They then played tones and observed the response of nearby neurons, laboriously repeating the process over a gridlike pattern to figure out where the active neurons were. The neurons seemed to be laid out in neatly organized bands, each responding to a different tone. More recently, a technique called two-photon microscopy has allowed researchers to focus in on minute slices of the live mouse brain, observing activity in unprecedented detail. This newer approach has suggested that the well-manicured arrangement of bands might be an illusion. But, says David Yue, M.D., Ph.D., a professor of biomedical engineering and neuroscience at the Johns Hopkins University School of Medicine, "You could lose your way within the zoomed-in views afforded by two-photon microscopy and not know exactly where you are in the brain." Yue led the study along with Eric Young, Ph.D., also a professor of biomedical engineering and a researcher in Johns Hopkins' Institute for Basic Biomedical Sciences.

To get the bigger picture, John Issa, a graduate student in Yue's lab, used a mouse genetically engineered to produce a molecule that glows green in the presence of calcium. Since calcium levels rise in neurons when they become active, neurons in the mouse's auditory cortex glow green when activated by various sounds. Issa used a two-photon microscope to peer into the brains of live mice as they listened to sounds and saw which neurons lit up in response, piecing together a global map of a given mouse's auditory cortex. "With these mice, we were able to both monitor the activity of individual populations of neurons and zoom out to see how those populations fit into a larger organizational picture," he says.

With these advances, Issa and the rest of the research team were able see the tidy tone bands identified in earlier electrode studies. In addition, the new imaging platform quickly revealed more sophisticated properties of the auditory cortex, particularly as mice listened to the chirps they use to communicate with each other. "Understanding how sound representation is organized in the brain is ultimately very important for better treating hearing deficits," Yue says. "We hope that mouse experiments like this can provide a basis for figuring out how our own brains process language and, eventually, how to help people with cochlear implants and similar interventions hear better."

Yue notes that the same approach could also be used to understand other parts of the brain as they react to outside stimuli, such as the visual cortex and the parts of the brain responsible for processing stimuli from limbs.

This work was supported by the Robert J. Kleberg, Jr. and Helen C. Kleberg Foundation, the National Institute for Neurological Disorders and Stroke (grant number R01NS073874), the National Institutes of Health's Medical Scientist Training Program, and the National Institute on Deafness and Other Communication Disorders (grant number T32DC000023).

Source: https://www.eurekalert.org/pub_releases/2014-07/jhm-nma072914.php