Machine Learning in Hearing Aids: Signia’s Approach to Improving the Wearer Experience

AudiologyOnline: Machine learning and artificial intelligence are terms you hear a lot these days to describe new hearing aid features. What gives?

Brian Taylor: Machine learning is a popular buzzword in the hearing aid industry these days. The term conjures images of futuristic technology, oozing with revelatory possibility. After all, who doesn’t want the drudgery of ordinary tasks, like cleaning your house, buying groceries (or making routine hearing aid adjustments), replaced by intelligent androids or really smart algorithms? Despite the recent marketing hype surrounding the terms, machine learning and artificial intelligence have been used in hearing devices for several years

Machine learning, which is commonly known by data scientists to be the largest subfield of artificial intelligence, is perhaps best described as techniques for automatically detecting and using patterns in data (Bianco et al., 2019). Given that an individual’s soundscape is often a constantly changing pattern, and there is an almost infinite number of adjustments that can be made to the hearing aid to optimize sound quality and speech intelligibility across the wearer’s listening situations, machine learning has many possible applications in hearing aids.

AudiologyOnline: How has Signia applied machine learning in their devices?

Brian Taylor: Signia has been a leader in applying machine learning principles to hearing aids. As early as 2006, the Signia Centra was programmed to learn its wearer’s gain preferences. In the Centra device, gain still could be changed manually by the wearer, but the hearing aid, using basic machine learning principles, could automatically adjust gain once it had been trained by the wearer. With the first-of-its-kind Centra product, it was possible for wearers to train gain, and to conduct this training for different programs/memories—this was referred to as DataLearning.

The ability for the wearer to train hearing aid gain reduced the likelihood the device would need manual adjustment, which created a more seamless listening experience. While the idea was simple, the Centra provided important insights into how machine learning could benefit hearing aid manufacturers and wearers alike. This basic application of machine learning spurred a pathway toward more complex machine learning uses. For example, in 2008 the Siemens Pure hearing aid was introduced, which had advanced machine learning dubbed SoundLearning—level-dependent gain learning in four independent bands, allowing for the first time, additional learning for frequency shaping.

One of the early studies (Palmer, 2012) with this innovative machine learning application involved the fitting of over 30 individuals, all new hearing aid users, wearing hearing aids programmed to the NAL target. A clinically relevant finding was that after 30-60 days of hearing aid learning, the average use-gain for average inputs for the group was essentially identical to what originally was programmed based on the NAL prescription. However, in blinded ratings, two-thirds of the participants favored their “learned” response. Given that the NAL is a fitting for “average,” we would expect that an equal number of the participants were fitted either over or under their preferred listening levels. If the hearing aids learned their preferred settings (more or less gain), it’s probable that the patients would prefer this learned fitting, yet the average gain for the group would stay the same.

AudiologyOnline: Okay, I understand that Signia has been using machine learning for more than a decade in their devices. Tell us more about some of the earlier implementations of machine learning in their devices.

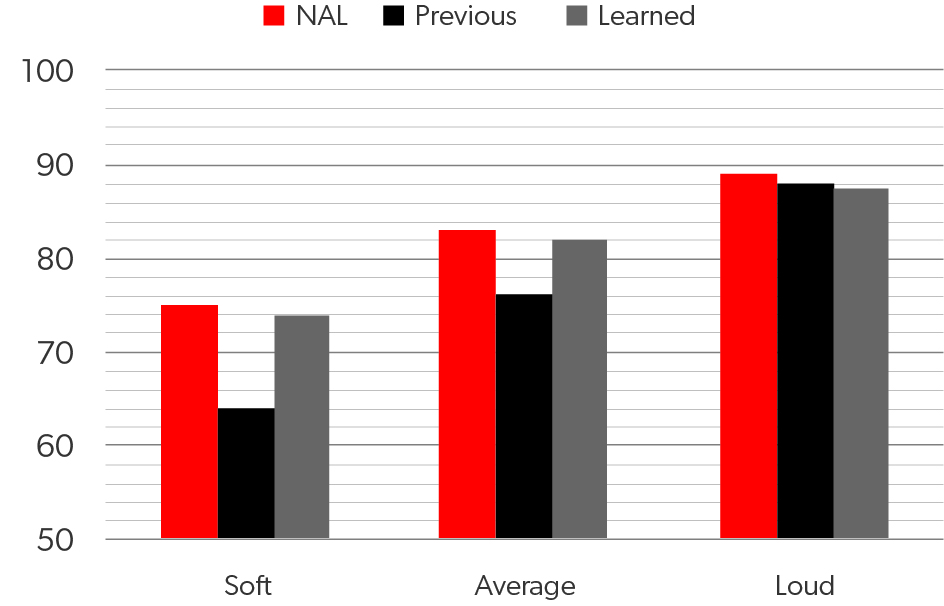

Brian Taylor: Let me share a second study (Mueller & Hornsby, 2014) using SoundLearning that clearly demonstrates how early iterations of machine learning not only improved patient satisfaction but also led to potentially life-altering improvements. In this research, the machine-learning hearing aids were fitted to individuals who were experienced wearers, but who had been using hearing aids fitted considerably below NAL targets (e.g., ~10 dB for soft speech, ~5 dB for average speech). Following a few weeks of learning, on average, the individuals trained the hearing aids to within 2-3 dB of prescriptive gain for soft, and within 1 dB of prescriptive gain for average, again illustrating the benefits of input-level-specific learning (see “before and after” average output findings in Figure 1). At the conclusion of the learning, nearly half of the participants were using 10 dB or more gain for soft inputs than they had been using prior to the use of the new hearing aids. These results suggest that when machine learning is applied to training gain for soft, average, and loud sounds, the wearer can, with minimum involvement from the clinician, optimize audibility on their own.

Figure 1. Average output (2000-4000 Hz) for the NAL-NL2 prescription, previous use, and following machine learning, for soft, average, and loud inputs. Note that following learning, average output matches that of the desired NAL-NL2 fitting.

AudiologyOnline: Those studies are about ten years old. What can you tell us about more recent iterations of Signia’s approach to machine learning and studies supporting its efficacy?

Brian Taylor: I realize these studies were conducted several years ago, but they demonstrate the virtuous cycle of research and how clinical studies build on each other to create better, more effective features that benefit wearers. So, please bear with me as I describe one additional study. In 2010, Signia’s machine learning advancements led to linking compression learning (SoundLearning) with automatic signal classification. That is, as a given signal was classified into one of six distinct categories, the use gain, or frequency-specific gain adjustments made by the patient automatically generated deep learning within that specific categorization. This learning would then continue automatically whenever that categorization would occur, and all six categories would be trained accordingly. This meant that the wearer no longer had to change programs to successfully use machine-learned settings. Keidser and Alamudi (2013) showed that after a few weeks of hearing aid use, the learning was individualized for each of the six conditions, illustrating differences in preferences for such diverse situations as speech in background noise, listening to music, or communication in a car.

AudiologyOnline: Thanks for providing us with some context around the implementation of machine learning in Signia devices. What can you tell us about the latest advances in machine learning and how they are employed in Signia’s latest products?

Brian Taylor: More recently, Signia has applied machine learning in other meaningful ways, contributing to a better overall wearer experience that drives more favorable outcomes. Here to unpack how Signia has implemented innovative features that use machine learning is Erik Hoydal. Erik is Senior Concept Manager for Digital Solutions for WS Audiology. He is part of the team that has created, validated, and commercialized machine learning features found in the Signia AX platform.

AudiologyOnline: Erik, welcome to Audiology Online. Since you are new around here, could you tell us about your background and what you do at Signia?

Erik Høydal: Great to be here. With a background in clinical audiology and research, I joined Signia almost a decade ago. Having worked in scientific research, platform launches, and as Lead Audiologist for Signia, innovation has always been a red thread for me. How can Signia leverage new technologies to improve and simplify the lives of people? That has been a central question for our team for many years. The introduction of new machine learning methods, like Deep Neural Networks, opens up whole new ways to think about hearing aid fittings and personalization, including the interaction between the professional and the wearer. I have recently moved to a role as Concept Manager in Digital Solutions to focus specifically on using these technologies to bring Audiology and hearing devices to a new level. We have only seen the beginning of the potential in machine learning, so it is an incredibly exciting domain to work in these days.

AudiologyOnline: Let me start with a general question. How does Signia think about machine learning?

Erik Høydal:

At Signia, we believe in using the latest technologies, including machine learning and artificial intelligence, to enhance the overall wearer experience and drive better outcomes for people with hearing difficulties. We see machine learning as a way to improve people’s hearing through intelligent automation and personalization.

Signia is committed to driving innovation in audiology by embracing the latest, most relevant technologies. Our focus is always on improving lives, not following trends. Deep Neural Networks (DNNs) have revolutionized many industries, including healthcare, with their ability to recognize patterns, make decisions, and for live DNNs; continuously improve through learned data. In dermatology, DNNs enhance screening accuracy for skin lesions, including malignancies. Signia introduced the world's first live DNN-powered hearing aid, through the Signia Assistant, in December 2019, transforming individual hearing optimization and providing professionals with unprecedented data-based insights. We will continue to leverage machine learning for intelligent automation and personalization, simplifying the lives of hearing aid wearers and enhancing the work of professionals in the field.

AudiologyOnline: In Brian’s introduction to the topic, he provided some historical context on Signia’s implementation of machine learning for the past 15-16 years. Brian reminded us that in 2020, Signia took machine learning one step further and introduced the Signia Assistant. Please describe how this feature uses machine learning and what makes Signia Assistant unique.

Erik Høydal: The Signia Assistant is an artificial intelligence-powered personal assistant that is designed to make the life of hearing aid wearers simpler and more convenient. It also provides a much more targeted support and sound for each individual than what has been previously possible. This is done by using the latest application of machine learning, in the form of a live deep neural network.

A deep neural network is a type of artificial neural network with multiple layers that can learn and represent complex relationships between input and output. For Signia, this meant we could feed the Signia Assistant with all the audiological data we had in order to best solve a given problem for the individual wearer. But instead of dictating what works best ourselves in the research lab, we created a ranked list of different solutions that the Assistant could choose. The Assistant would favor our top picks, but also constantly update or improve them when new data was entered into the system. This means that the Assistant can identify which solutions perform best and re-arrange the ranked list based on data from people all over the world, as well as the individual wearer. Every interaction leads to an improvement of the system, while the Assistant also learns more about the preferences of the wearer.

AudiologyOnline: That sounds like a good explanation for how Signia Assistant functions in theory. Can you give us some examples of how Signia Assistant works in real life?

Erik Høydal: Absolutely. Let me walk you through a scenario. Imagine Linda has just received a new pair of hearing aids and is attending a gathering with old friends a few days later. But when she arrives, she realizes the background music and chatter are too much for her to follow the conversation.

Linda opens the Signia app and clicks on the Assistant button. The Assistant asks Linda how it can help and she specifies that she is having trouble hearing what people are saying.

The Assistant analyzes the environment and recognizes that Linda is in a noisy place with a lot of background noise and music. It then determines the best solution based on previous experiences of what has worked well for others in similar situations. In just a few seconds, the Assistant changes the settings on Linda's hearing aids to improve her ability to hear speech.

The Assistant then asks Linda if she wants to keep the new settings, which she does. Linda was able to enjoy her evening with friends and didn't miss out on anything, all thanks to the Signia Assistant which solved her problem in just 30 seconds.

The second part, which was instrumental in why we developed Signia Assistant, is that all the changes made by the Assistant are displayed to the clinician when the wearer returns for the follow-up. This provides a data-based insight that serves as a solid foundation for further adjustments needed by the wearer. Not everyone can accurately describe sound or remember how their hearing was working last week. The Assistant removes the guesswork and replaces it with data-driven insights. It is the tool I dreamt of when I worked in the clinic, and I am very proud to see it live and working as well as we hoped for.

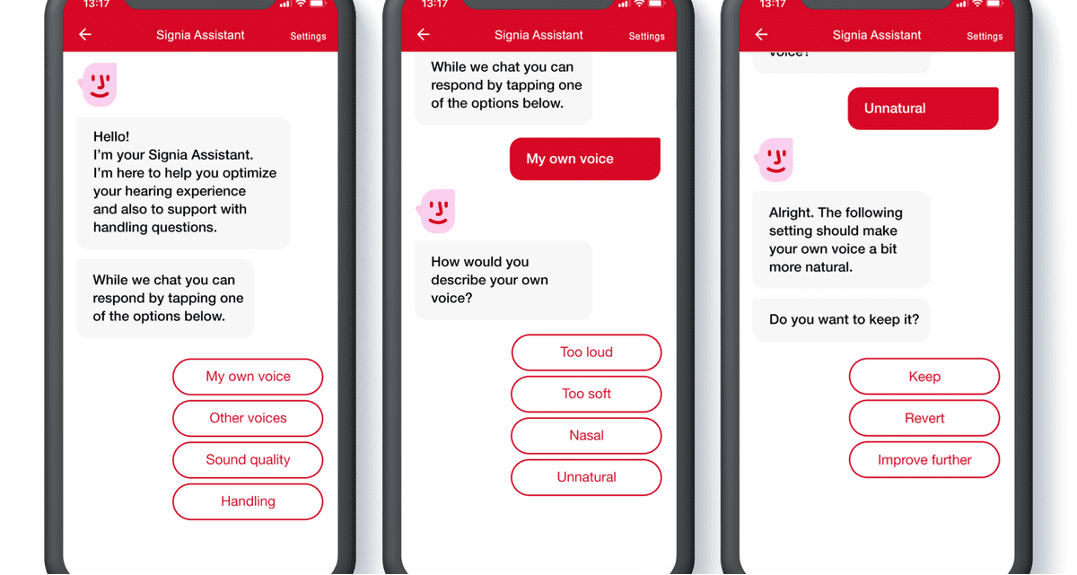

Figure 2 shows an example of the Signia Assistant interface available to the wearer on their smartphone via the Signia app.

Figure 2. An example of the Signia Assistant interface. The wearer specifies a problem, and a solution is presented, which the wearer can keep, revert, or further improve.

AudiologyOnline: Please share with us some of the studies you conducted to validate the effectiveness of Signia Assistant.

Erik Høydal: When you launch something (Signia Assistant) that changes everything, you, of course, want to ensure that it actually does what it is intended to do. Therefore, we conducted several studies with prototypes of the Assistant before it was launched. These studies evaluated the efficiency, usability, and perception of the Assistant. On efficiency, we found that people preferred the new sound or solution they received from the Assistant in about 70-75% of the cases. This result was replicated in several locations around the world. Both quantitative and qualitative research gave the same results and feedback. For usability, we made a lot of changes before launch, where we constantly reduced complexity to ensure that everyone that ever sent a text message would be able to use the Assistant. By launch, we reached a result that indicated this. Based on usability data, I can say with confidence that the Signia Assistant is not a tool for the tech-savvy only; it is truly designed for everyone.

The final study I will mention evaluated the wearer's perception of the Assistant. One of the more interesting findings was the positive impact it had on wearers knowing they had support 24/7. Both on handling issues, where it will show a video solving the problem, or attending social events where an adjustment might be beneficial. In a US study, 93% reported that access to the Signia Assistant will make me more confident that I can rely on my hearing aids in more situations. We know that many wearers can be anxious and hesitant entering social events, and if we can help them gain confidence in these important listening situations, it can improve their quality of life.

A last point I would like to highlight is a worry we often face on our team when launching a new product or feature: “Wearers will adjust their devices until the sound is comfortable and they are unconcerned about restoring audibility (hearing new sounds and getting acclimated to them).” First, we designed the Assistant to not simply turn down gain for every problem but to use alternative ways of altering the quality of sound instead. Second, we thoroughly evaluated this many times by conducting speech intelligibility tests before and after weeks of active use of the Assistant. Our analysis of speech intelligibility indicates that wearers are not just interested in turning down the gain of their devices, so it sounds comfortable, rather they desire better audibility and sound quality in everyday listening places. Further, we did not see a trend on our data where wearers reduced high-frequency gain – a trend many of us expected. We also found no decline in speech understanding after usage of the Assistant – something you might expect to occur if wearers were turning down gain for high-frequency sounds. On the contrary, when asked, 80% of wearers reported they understood speech better after a period of prolonged use.

AudiologyOnline: It’s been more than two years since Signia Assistant was launched in 2020. What improvements to it have been made?

Erik Høydal: The advantage of using an advanced machine learning system with a deep neural network is that it gets smarter every day as the wearer uses it. That is, as more data enters into the machine learning system (the Assistant), the better able the system can predict what the individual wearer might like. We can monitor the general satisfaction of each solution, and the success rate of each problem stated.

We are seeing evidence that the system is getting smarter as more data is entered into the Assistant. For example, the overall success rate of the Assistant is improving. When we started collecting data in 2020, overall satisfaction with it was about 72%, but within the past year, the satisfaction rating of the Assistant has improved beyond 80%. That is a substantial improvement, and we believe it will only continue to improve as more wearers use it, and more fitting data is put into the Assistant.

We have also made great improvement in the handling part of the Assistant, by adding new and improved videos helping people with specific handling topics of their devices. Those videos reinforce the counseling and orientation received from the hearing care professional and serve as an added layer of educational content that wearers can access at their convenience.

AudiologyOnline: Based on what you’ve shared so far, Signia Assistant sounds like a really unique feature. In fact, Brian shared with us offline that he believes that no other hearing aid manufacturer implements machine learning in quite this way. He also said he didn’t think Signia Assistant is as popular as it should be among clinicians. Perhaps this is a downside to being so unique – hearing care professionals do not have other similar experiences to draw from. What are two or three things that clinicians need to know about Signia Assistant that will improve its uptake in the field?

Erik Høydal: I think it just takes a while before people realize the power of such a tool. Honestly, we see great usage numbers in some parts of the world where the Assistant is prominently marketed as part of a broader package of professional services with 24/7 support. I would encourage hearing care professionals to think of Signia Assistant as part of a comprehensive 24/7 service package that could be included with the devices.

When it comes to how the Assistant actually helps improve a fitting, think of those new wearers who tend to describe their trial period with hearing aids. Their input is often vague and typically limited to a few listening situations. This limited verbal feedback from the wearer is often the only basis hearing care professionals have when they make adjustments to the hearing aid.

The Assistant removes much of this guesswork. It provides a data-based foundation for clinicians to make adjustment decisions. Unless you plan to spend the weekend with the wearer yourself, the insights provided by the Assistant are beyond anything hearing care professionals have had to adjust hearing devices. Signia Assistant is simply a tool to make better-informed hearing aid adjustment decisions for each wearer.

I want to remind readers of the simplicity in using the Signia Assistant. Although the Assistant is incredibly complex, our engineers deliberately made it easy to use. If you have a person in your clinic that can text and open an app, they will have no problem using the Assistant. In fact, I would argue that once many hearing aid wearers are taught how to use Signia Assistant, they feel more empowered in their ability to use their hearing aids effectively. We all know that an empowered wearer tends to experience higher levels of satisfaction and benefit.

Finally, I know it’s a cliché, but I want to remind hearing care professionals that no two hearing aid wearers are alike. This is where Signia Assistant excels, by considering individual differences among wearers with similar audiograms.

For instance, two people with identical hearing loss, may have very different preferences for aided sound or amount of directionality. It is impossible to know exactly how much support a wearer needs or how sensitive they are to sounds in their daily life purely based on the audiogram. Hearing aids are typically fitted the same way for these individuals due to their matching audiograms. The Assistant allows us to finally gain insights into these audiological differences and use them for further decision making. Brian also wishes to add his thoughts on the matter.

Brian Taylor: Yes, Erik, your comments about two wearers with similar audiograms and very different aided preferences reminded me of something that machine learning in general, and live deep neural networks specifically, are quite adept at addressing: reducing the possibility of the clinician’s confirmation bias creeping into the fine-tuning process. After all, it is human nature to rely on past experience to inform current decision making in the clinic. We call that confirmation bias and it can lead to erroneous decisions when it comes to fine tuning devices. For example, we know from survey data (Anderson, Arehart &Souza, 2018) there is considerable variability in how audiologists fine tune hearing aids based on wearers’ description of the problem.

Unlike a well-intended clinician who might unknowingly be prone to confirmation bias, Signia Assistant can detect these differences in listening preferences between individuals with similar auditory profiles. Use of Signia Assistant can, for example, lead to two vastly different settings for compression or beamforming – preferred settings that would be impossible to detect with verbal feedback from the wearer. In that sense, you can think of the machine learning inside the Assistant as a co-pilot that promotes faster, more accurate fine tuning, freeing up time for the clinician to focus on patient-centered coaching and communication. Signia Assistant saves time by helping you and the wearer get the hearing aids fine-tuned to their preferences quickly and accurately. Machine learning that uses a live DNN, like what is found in Signia Assistant, complements the human touch provided by the hearing care professional.

AudiologyOnline: Erik and Brian, thanks so much for sharing your insights and expertise on this topic. If someone had a specific question about Signia’s application of machine learning or wanted to learn more about it, how can they contact you?

Erik Høydal: I think it is safe to say that Signia Assistant is both unique and effective. To support this statement, I would encourage them to visit the Signia professional library. A simple Google search will take you there. Here are two studies archived there that I would urge people to read if they want to learn more about Signia’s approach to machine learning: 1. https://hearingreview.com/hearing-products/testing-equipment/fitting-equipment/assistant 2. https://www.signia-library.com/wp-content/uploads/sites/137/2020/05/Signia-Assistant_Ensuring-consistent-support-and-individualized-care-through-the-Signia-Assistant.pdf

References

Anderson, M. C., Arehart, K. H., & Souza, P. E. (2018). Survey of Current Practice in the Fitting and Fine-Tuning of Common Signal-Processing Features in Hearing Aids for Adults. Journal of the American Academy of Audiology, 29(2), 118–124.

Bianco, M. J., Gerstoft, P., Traer, J., Ozanich, E., Roch, M. A., Gannot, S., & Deledalle, C. A. (2019). Machine learning in acoustics: Theory and applications. The Journal of the Acoustical Society of America, 146(5), 3590.

Keidser, G., & Alamudi, K. (2013). Real-life efficacy and reliability of training a hearing aid. Ear Hear, 34(5), 619-29.

Mueller, H. G., & Hornsby, B. W. Y. (2014, July). Trainable hearing aids: the influence of previous use-gain. AudiologyOnline, Article 12764. Retrieved from: https://www.audiologyonline.com

Palmer, C. V. (2012, August). Implementing a gain learning feature. AudiologyOnline, Article 11244. Retrieved from: https://www.audiologyonline.com