Question

I recently started using a new computer-based audiometer system which has word recognition files as part of the software. I’ve heard that some form of file compression might be used when these files are transferred from the original CD recording. Is it okay to use these files for my NU-6 word recognition testing, or should I continue to use the external CD-quality material I’ve always been using?

Answer

The short answer is “yes, we’re pretty certain that it’s okay to use the files from your new system,” but, for a couple reasons, we’ll go into a bit more detail.

First, you’re asking a very common and relevant question. We know that different recordings of the same NU-6 word list can result in highly different word recognition scores (see Hornsby & Mueller, 2013).

For example, back in the 1980’s, Fred Bess (1983) summarized some performance/intensity functions from recorded NU-6 materials. These were commercially available recordings, but they were recorded by different people. For one of the NU-6 recordings, when the test was delivered at 8 dB SL, the average score was 75% correct. But for a different recording of the very same NU-6 list, presented at the same 8 dB SL, the average score was only 15% —a difference of 60 percentage points between talkers saying the same words. If simply changing the talker will alter the results to this extent, then it certainly is reasonable to question if file-compression of the NU-6 material also will impact the findings? As we have said before (Mueller & Hornsby, 2020):

“The words are not the test, the test is the test.”

And, the question not only relates to your computer-based audiometric systems. As you know, there are more and more internet speech tests available, and for convenience, some audiologists might use their own portable system, where they have copied (and likely compressed) a standard CD recording for speech testing.

The second reason why our answer here will be little long-winded is that we recently published some research on this very topic, along with our colleagues Todd Ricketts, Jodi Rokuson and Mary Dietrich (Mueller et al., 2022). The initial research and data collection was Dr. Rokuson’s AuD Capstone project. Please read the entire article at AJA. Here, we'll highlight a few key points.

Participants were 86 adults ranging in age from 19-93 years (mean=65.5 years) who were being seen for routine hearing evaluation appointments at Vanderbilt Bill Wilkerson Center Audiology Clinic, Nashville, TN. There was no exclusion for type of pathology, audiometric configuration, or degree of hearing loss, unless it was not possible to measure a speech recognition threshold. Patients who had severe cognitive disabilities (based on clinician judgment) were not included. Their hearing loss (pure-tone air conduction average of 500, 1000 and 2000 Hz) ranged from 5 to 102 dB.

The Auditec compact disc (CD) recording of the NU-6 Ordered by Difficulty, Version II words were used as the “uncompressed” (or original) test materials. We used iTunes AAC encoder to compress these original files with a fixed compression rate of 64 kbps—this was among the lowest used for audio streaming when the study was conducted. We believed that using this low bit rate would give us a better chance of seeing any negative effects of file compression that might be present. Our impression is that software algorithms have probably improved, not gotten worse, since we collected these data.

The compressed and uncompressed versions of the first 25 words from the NU-6 Lists 1-4 were organized into two counterbalanced playlists and imaged back onto CDs for presentation. Four different clinicians conducted word recognition testing, using these stimuli, in standard audiometric test suites as part of their routine audiologic evaluations. Speech presentation levels varied from 70-105 dB HL with the goal of optimizing audibility without exceeding the patient’s loudness discomfort level. The same presentation level was used for the compressed and uncompressed stimuli presented to the same ear. All but 2 participants were tested bilaterally, resulting in 170 compressed and uncompressed scores (scored in percent correct).

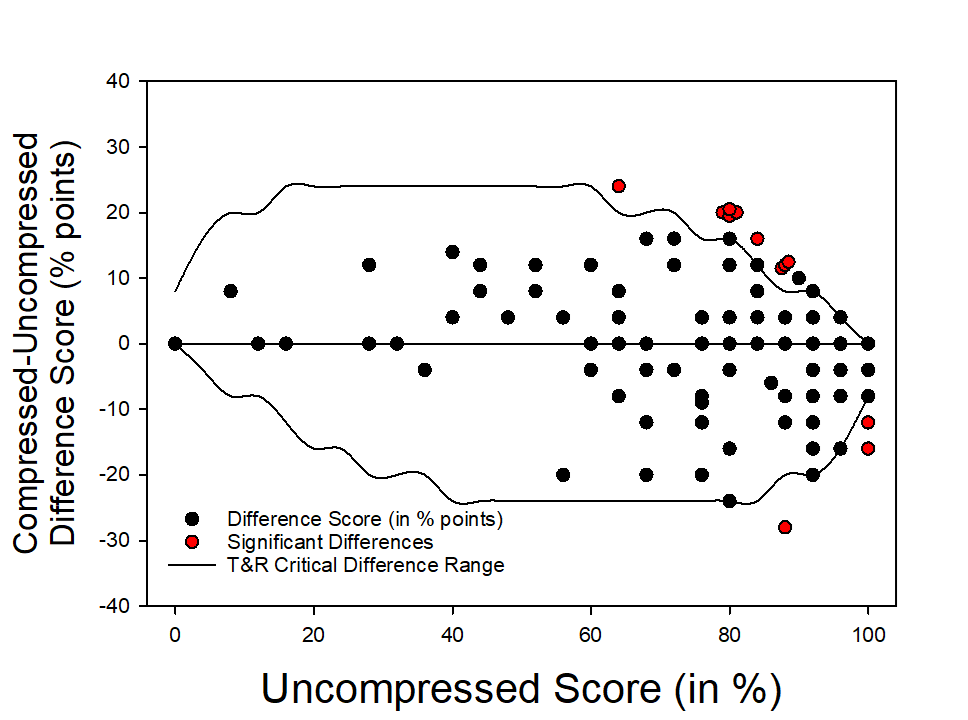

An initial analysis of our results revealed median scores from our sample were exactly the same in the uncompressed and compressed conditions (Median = 88%). While this strongly implies that the effects of file compression were small, from a clinical perspective it is important to know whether an individual’s score (not the mean or median) is different between conditions, and whether that difference has clinical significance. To assess the clinical relevance of individual differences across conditions we used Thornton and Raffin’s (1978) 95% critical difference ranges (for a 25-item word list) to identify individuals with scores that were significantly different in the compressed and uncompressed conditions. Of the 170 comparisons, significant differences were observed in only 7.1% of cases (N=12), approximating the 5% of expected cases occurring simply due to chance (Thornton and Raffin, 1978). All difference scores are displayed in Figure 1. The red circles show the individual cases that exceeded the 25-item critical difference range for a given score.

Figure 1. Shown are the difference scores—Compressed subtracted from uncompressed (above the zero line, compressed score was better; below the zero line, uncompressed score was better). The reference (X-axis) is the uncompressed score. The upper and lower horizontal lines represent the Thornton and Raffin (1978) 95% critical difference range around a given uncompressed (X-axis) score. The red circles are the 12 cases that fell outside of these boundaries.

A review of the individual scores revealed that in nine of the 12 cases (75%) the best score occurred in the compressed condition. This might lead one to think that file compression had a beneficial effect on word recognition. However, in all 12 cases (100%), the best score occurred in the condition that was tested second, irrespective of the test condition (uncompressed or compressed). This pattern suggests that at least some of the significant differences we saw were more likely due to practice effects, based on testing order, rather than true differences due to file compression. A more formal analysis of order effects revealed that, on average, scores improved about 4 percentage points on the second test (compressed or uncompressed). This was just enough of a change to shift a few individual scores outside Thornton and Raffin’s critical difference range. Further analyses found that, as expected, speech scores also were affected by age and degree of hearing loss; but, these main effects were similar in the compressed vs. uncompressed conditions (i.e., there were no significant interactions between age, or PTA, and file compression).

In summary, these findings suggest it is unlikely that typically applied file compression of NU-6 materials will affect word recognition scores in such a way as to alter your clinical decision-making. We are not, however, advocating clinicians routinely use aggressive file compression algorithms to shrink the size of their clinical audio files. In fact, we believe that using the original, uncompressed, versions of audio tests is always the preferred option. First, all normative data used for interpreting speech test findings are based on data collected using the original, uncompressed, stimuli. In addition, while we believe it unlikely, it is possible that the file compression used in the study described here could have a significant effect on some other speech test (e.g., speech in noise, CAPD tests, etc). Finally, improvements in technology, such as increased file storage capabilities on individual devices and via cloud-based storage solutions, continues to reduce the need for file compression as a space-saving option.

References

Bess F.H. (1983). Clinical assessment of speech recognition. In Konkle D., & Rintelmann W. (Eds.), Principles of speech audiometry (pp. 127-202). University Park Press.

Hornsby, B. W. Y., & Mueller, H.G. (2013, July). Monosyllabic word testing: Five simple steps to improve accuracy and efficiency. AudiologyOnline, Article 11978. Available at www.audiologyonline.com

Mueller, H.G., & Hornsby, B.W.Y. (2020). Word recognition testing - Let's just agree to do it right! AudiologyOnline, Article 26478. Available at www.audiologyonline.com

Mueller, H. G., Rokuson, J., Ricketts, T., Dietrich, M. S., & Hornsby, B. (2022). Is It okay to use compressed NU-6 Files for clinical word recognition testing? American Journal of Audiology, 1-8. https://doi.org/10.1044/2022_AJA-21-00181

Thornton, A., & Raffin, M. (1978). Speech-discrimination scores modeled as a binomial variable. Journal of Speech Language Hearing Research, 21(3), 507-518. https://doi.org/10.1044/jshr.2103.507

Resources

Text Course: 20Q: Word Recognition Testing – Let’s Just Agree to do it Right!

Article: Monosyllabic Word Testing: Five Simple Steps to Improve Accuracy and Efficiency