Learning Outcomes

As a result of this course, participants will be able to:

- Identify examples of real-life listening situations where directional processing would and would not be advantageous.

- Describe the rationales for the different microphone modes of Binaural Directionality II.

- Explain how Binaural Directionality II supports different listening strategies.

Introduction

The fact that two ears are better than one is well-established. The human auditory system integrates information from both ears providing benefits in terms of loudness, localization, sound quality, noise suppression, speech clarity and listening in noise. The ability to selectively attend to particular sounds, like a single voice among many talkers, is one of the most amazing and significant benefits of binaural hearing.

Although absolute performance may be worse than with normal hearing, binaural advantages exist even in the presence of peripheral damage to the auditory system. In fact, if audibility of auditory cues is provided via amplification, binaural advantages for those with hearing impairment are nearly as substantial as for normal-hearing listeners. However, even with bilaterally fit hearing instruments, some cues that enable binaural hearing can be disturbed.

Binaural Directionality II with Spatial Sense supports binaural auditory processing. Using 4th generation 2.4 GHz wireless technology and introduced in the ReSound LiNX2, Binaural Directionality II enables the listener to make use of better ear and awareness listening strategies in a natural way. It is supplemented by Spatial Sense, which is modeled on natural processes in the peripheral auditory system in order to deliver the best signals to the brain, enhancing localization and sound quality. In this way, the brain can effortlessly carry out what no hearing instrument system can ever do. Hearing instrument wearers can easily orient themselves in their environment, selectively pay attention to the sounds of interest to them, and shift their attention among sounds.

Binaural Processing and Hearing Instruments

In reference to hearing instruments, the term “binaural processing” has come to refer to the use of information exchanged between devices to enhance signal processing for the benefit of the hearing instrument user. However, most examples of binaural processing in hearing instruments are based on the assumption that the sound-of-interest to the hearing instrument wearer can be accurately determined by the hearing instrument system. Not only is this a grossly incorrect assumption, it also unnecessarily prevents the brain from carrying out binaural auditory processing in a natural way.

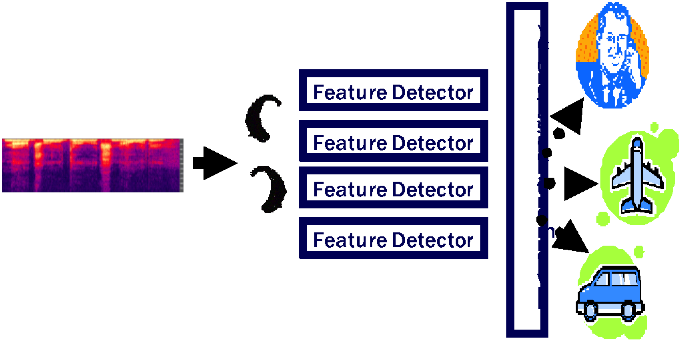

Binaural processing in hearing instruments typically uses communication between the devices to identify and enhance the loudest speech signal in the environment. Modern hearing instrument technology has made it possible not only to use hearing instrument technologies to preferentially amplify sounds coming from any direction in relation to the user, but to do this automatically. Figure 1 illustrates the logic of such a system. Acoustic features are extracted from the signal picked up by each hearing instrument. These features are compared and analyzed, resulting in an “acoustic scene”. The acoustic scene categorizes the types of sounds in the environment as well as the general direction of certain sounds, speech in particular. Based on the acoustic scene, the hearing instrument system applies technologies including directionality, noise reduction, and gain to preferentially amplify the most intense speech signal.

Figure 1. Most hearing instruments with binaural processing attempt to identify and enhance the loudest speech in the environment without regard for listener intent.

The rationale for this approach is that it supports a better ear listening strategy by amplifying speech on one ear or from one direction while attenuating noise on the opposite ear or from other directions. On the surface, this makes sense. It emulates the naturally occurring phenomenon whereby listeners move their heads or lean toward a speaker-of-interest to enhance the audibility of the speech on the side with the best signal-to-noise ratio. Such a strategy can improve signal-to-noise ratio in the better ear by 8 dB or more (Bronkhorst & Plomp, 1988). However, a problem arises in that the system decides on behalf of the hearing instrument wearer what the most salient sound in the environment is at any given time.

Nearly any listening situation provides examples of why hearing instrument binaural processing carried out in this way creates disadvantages for the listener. This is because real-world listening environments are dynamic. The signal of interest and competing noises may move, and they may change. The talker who is of interest one moment is the competing noise source the next. A noisy restaurant is often used as an example of when hearing instrument binaural processing as described above would be advantageous. As long as the situation remains static, and there is only one person talking from one direction that the hearing instrument wearer wants to hear, this approach can be desirable. But as the talker of interest switches from the right to the left side of the listener and back again, and as a new talker of interest – like a waiter – appears from behind, the listener is restricted by the information the hearing instrument system has decided is important.

Everyone who fits hearing instruments is familiar with the term “directional benefit”. This quantifies the improvement in signal-to-noise ratio for a signal occurring from a particular direction (usually in front of the listener) that is provided by directional processing. But what happens when the signal-of-interest comes from a different direction, as will more often than not be the case at some point in real-world listening situations? Not only will the off-axis signal not be preferentially enhanced, but it will actually be suppressed. The degree to which this occurs depends on the angle of arrival and the directional characteristics of the system. When off-axis signals are the signals of interest, the effect of directional processing becomes a “directional deficit”, meaning that it is detrimental to audibility of the desired sound.

One of the strategies listeners make use of that is commonly thought to mitigate the directional deficit and limiting nature of hearing instrument binaural processing is orienting behavior via head movements. This refers to small head movements which listeners naturally carry out to make sense of their auditory environment and help them locate and focus on signals of interest. Head movements provide additional acoustic cues that the brain can efficiently use to this end. In the case where hearing instrument technology is applied that enhances a particular signal, and the actual signal-of-interest occurs from a different direction, it is assumed that compensatory head movements will allow the user to quickly re-orient to the desired sound. So in the noisy restaurant example, this behavior is assumed to enable the user to follow a conversation occurring around him or her.

Brimijoin and colleagues (2014) tracked head movements of listeners fit with directional microphones that provided either high or low in situ directionality to test the idea that directionality might complicate natural orienting behavior. They asked participants to locate a particular talker in a background of speech babble. Their results showed that not only did it take longer for listeners wearing highly directional microphones to locate the speaker of interest, but that they also exhibited larger head movements and even moved their heads away from the speaker of interest before locating the target. This represents a clear difference in the strategy employed when wearing highly directional microphones versus microphones providing little-to-no directionality. Rather than a simple orienting movement, listeners were performing a more complex search behavior when wearing the highly directional microphones. The authors suggested that the longer, more complex search behavior could result in more of a new target signal being lost in situations such as a multitalker conversation in the noisy restaurant scenario. In all, this switch in strategy leads to more effortful listening, which is in complete contrast to the desired effect of the processing.

Supporting True Binaural Processing

Surround Sound by ReSound is the proprietary signal processing system that is driven by a philosophy of creating a natural hearing experience. Surround Sound by ReSound technologies are inspired by natural hearing processes and seek to leverage technology to support - not replace - natural hearing. Binaural Directionality II with Spatial Sense exemplifies this philosophy. It encompasses a binaural microphone steering strategy that enables hearing instrument wearers to use different listening strategies, and builds on this with processing to enhance an auditory sense of space designed in accordance with knowledge of auditory physiology and open ear acoustics. The design necessarily includes the human auditory system, including the brain, as an integral part of the binaural feature.

Binaural Listening Strategies

As previously described, one strategy that listeners employ is referred to as the better ear strategy. According to this strategy, listeners will accommodate their position relative to the desired sound to maximize audibility of that sound, and rely on the ear with the best representation (SNR) of that sound. The directivity patterns of both ears contribute to this ability to focus and were discussed at length by Zurek (1993). An extension to the better ear strategy model includes the omnidirectional directivity effects of binaural listening to describe the listener’s ability to remain connected and aware of the surrounding soundscape. Where the head shadow effect plays a role in improving the signal-to-noise ratio in one of the two ears, the awareness strategy looks at how the two ears, due to their geometric location on the head, allow for the head to be acoustically transparent and keep the listener connected to their listening environment. The listener can make use of either the better ear strategy or the awareness strategy at will.

An analogy to these two strategies exists in the visual domain. With the right eye closed, people with normal visual processing will see a solid image of the left side of their nose, with the nose blocking visual information occurring to the right of the nose. The opposite occurs if the left eye is closed. The nose is analogous to the head shadow in the auditory domain, where some acoustic information from one side of the head is not available to the opposite ear. With both eyes open, each side of the nose is visible but transparent, such that a fused image of the entire visual field from each eye is also visible. It is possible to choose to focus on the nose or to ignore this visual information and focus on something else in the visual field. In the auditory domain, binaural listening strategies enable focusing on a particular sound by taking advantage of improved SNR or monitoring the entire auditory scene by means of the head’s acoustic transparency.

Binaural Directionality II

With Binaural Directionality II, the brain receives all sound inputs and can choose to attend to certain signals in the auditory scene, making use of both the better ear and awareness listening strategies. These strategies can be used by the aided listener only when the sound environment is made completely accessible by the hearing instruments. Binaural Directionality II steers the microphone configuration of two hearing instruments to support binaural sound processing by the brain. It is the only truly binaural strategy, taking advantage of scientifically proven listening strategies incorporating acoustic effects and auditory spatial attention strategies (Zurek, 1993; Akeroyd, 2004; Edmonds & Culling, 2005; Shinn-Cunningham, Ihlefeld, & Satyavarta, 2005; Simon & Levitt, 2007).

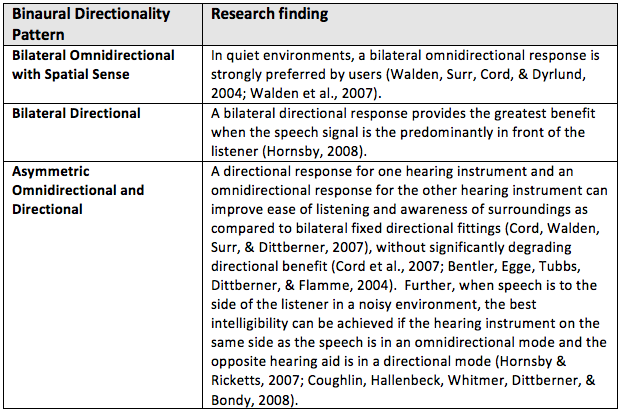

Binaural Directionality uses ReSound’s 4th generation 2.4 GHz wireless technology on the SmartRange platform to coordinate the microphone modes between both ears for an optimal binaural response. Front and rear speech detectors on each hearing instrument estimate the location of speech with respect to the listener. The environment is also analyzed for the presence or absence of noise. Through wireless transmission, the decision to switch the microphone mode for one or both of the hearing aids is made based on the inputs received by the four speech detectors in the binaural set of devices. The possible outcomes include a bilateral omnidirectional response with Spatial Sense, a bilateral directional response, or an asymmetric directional response. These outcomes were derived from external research regarding the optimal microphone responses of two hearing instruments in different sound environments. Table 1 provides the justification for each possible binaural microphone response.

Table 1. Research study findings on optimal binaural microphone response were instrumental in developing the four bilateral microphone responses of Binaural Directionality II.

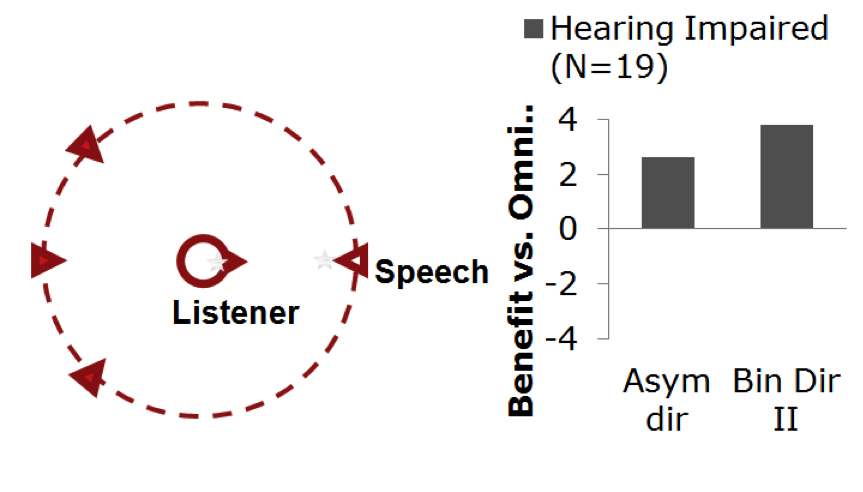

In-house data on speech recognition in noise tasks supports the rationales for the different microphone modes. Hearing impaired listeners were tested with different microphone mode configurations in conditions that theoretically would favor a particular response. When speech was presented from in front and noise from behind the listener, a bilateral directional response is expected to provide the most benefit, and Binaural Directionality will switch to bilateral directionality under these conditions. Figure 3 illustrates how this microphone mode provides the most SNR improvement.

Figure 2. With speech in front of the listener and noise from behind, a bilateral directional response provides the most directional benefit. Binaural Directionality II will switch to a bilateral directional microphone mode under these conditions.

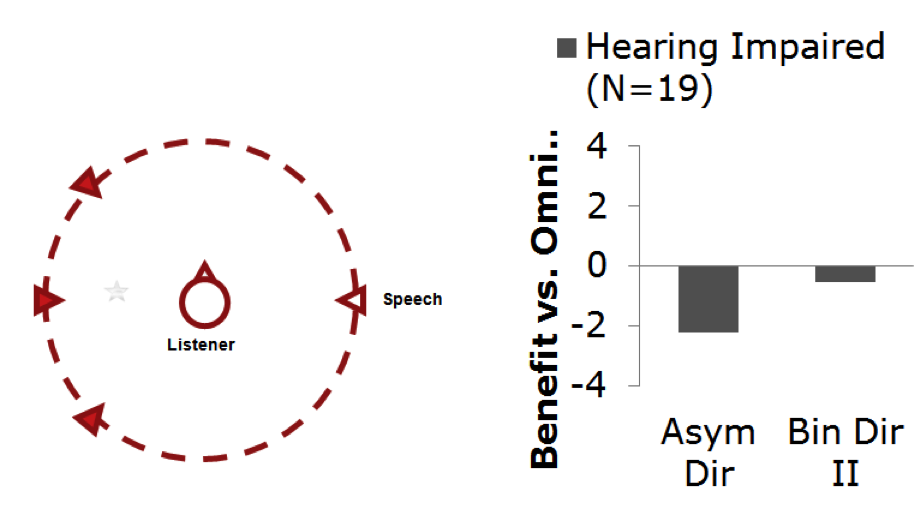

In contrast, speech occurring from another location than in front can result in a directional deficit. When speech was presented from one side of the listener and noise from the other, a directional deficit was demonstrated for the fixed asymmetrical directional condition. In this condition, the right ear was always directional and the left ear was always omnidirectional. Binaural Directionality II also provided an asymmetric response in these conditions, except that the system was steered to provide directionality on the left ear and omnidirectionality on the right. This ensures audibility of the speech, allowing the wearer to make use of the awareness listening strategy. As expected, performance with Binaural Directionality II in this condition was not significantly different than omnidirectional.

Figure 3. When speech is presented from one side and noise from the other, a directional deficit was noted for the asymmetrical condition, in which the right ear was always directional. Binaural Directionality II also provides an asymmetrical response in this situation, but with directionality on the left ear. This erases the directional deficit, resulting in performance not significantly different from omnidirectionality.

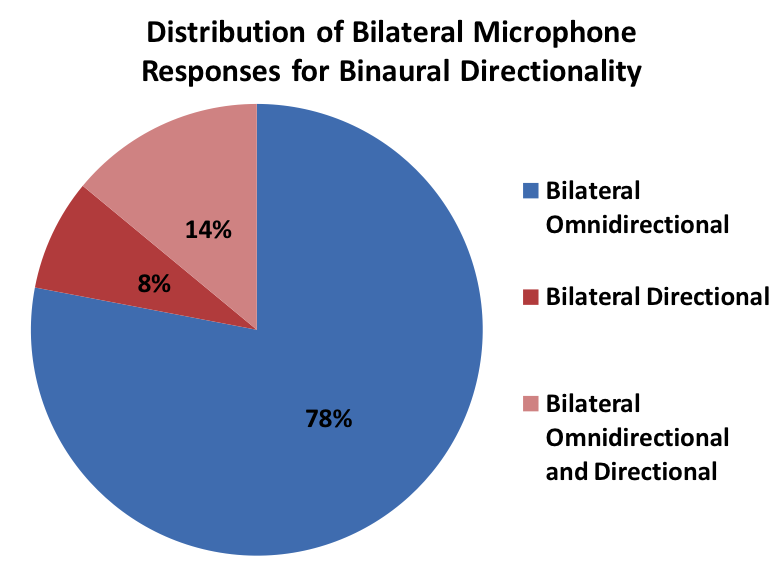

With a microphone steering strategy, it is critical that the optimum mode is selected in each listening environment. Datalogging results from 29 trial participants who wore the hearing aids for a 4-week period support that the steering of microphone modes provides the desired response in varying listening situations. These results indicated that the hearing instruments were in the Bilateral Omnidirectional mode with Spatial Sense 78% of the time, and were in some form of directional mode (Bilateral Directional or Asymmetric Directional) 22% of the use time. This is roughly in agreement with published research stating that omnidirectional processing is appropriate roughly 70% of the time and directional processing is beneficial the remaining 30% of the time (Shinn-Cunningham et al., 2005). In addition, it is exactly in agreement with survey results from hearing aid users with switchable directionality who understood and used the directional feature. These users reported on average that they spent 78% of their use time in a bilateral omnidirectional microphone configuration and the remaining 22% of the time in a bilateral directional configuration (Cord, Surr, Walden, & Olson, 2002).

Figure 4. Results of datalogging showing average use time in different microphone mode configurations with Binaural Directionality II.

Enhancing Localization and Sound Quality with Spatial Sense

As evidenced, users fit with Binaural Directionality II are likely to spend much of their hearing instrument use time in relatively quiet conditions where a bilateral omnidirectional response will be selected. A strong preference for omnidirectionality in quiet listening conditions has also been demonstrated, and this preference is likely driven by sound quality. Surround Sound by ReSound has been proven top-rated in terms of sound quality (Jespersen, 2014), but could it be even better?

Consider sound quality in reproduced sound, such as when listening to music or speech through stereo headphones through a player device. If it is a stereo recording, it may be possible to perceive the sound as being more to the left, to the right or in the center. However, it will sound as if these sounds are occurring within the head. Even if the quality of the sound reproduction is judged very highly, it won’t sound as if the listener is actually in the real listening situation. Thus it will lack an aspect of naturalness as it will lack the spaciousness of a real listening situation.

Spatial hearing refers to the listener’s ability to segregate the incoming stream of sound into auditory objects, resulting in an internal representation of the auditory scene. An auditory object is a perceptual estimate of the sensory inputs that are coming from a distinct physical item in the external world (Shinn-Cunningham & Best, 2014). For example, auditory objects in a kitchen auditory scene might include the sound of the refrigerator door opening, the sound of the water running in the sink, and the sound of an onion being chopped. The ability to form these auditory objects and place them in space allows the listener to rapidly and fluidly choose and shift attention among these objects. Furthermore, the formation of an auditory scene provides a natural-sounding listening experience.

The auditory system must construct this spatial representation by combining multiple cues from the acoustic input. These include differences in time of arrival of sounds at each ear (Interaural Time Difference – ITD), differences in level of sounds arriving at each ear (Interaural Level Difference – ILD) as well as spectral “pinna” cues. Head movements also are important contributors as the auditory system quickly analyzes how the relationships among these cues change. Disrupting any of these cues interferes with spatial hearing, and it is known that hearing aids may distort some or all of them.

Spatial Sense is a unique Surround Sound by ReSound technology that accounts for the three hearing instrument-related issues that can interfere with spatial cues:

- Placement of the microphones above the pinna in Behind-the-Ear (BTE) and Receiver-in-the-Ear (RIE) styles removes spectral pinna cues (Orton & Preves, 1979; Westerman & Topholm, 1985).

- Placement of the microphones above the pinna in BTE and RIE styles distorts ILD (Udesen, Piechowiak, Gran, & Dittberner, 2013).

- Independently functioning Wide Dynamic Range Compression in two bilaterally fit hearing instruments can distort ILD (Kollmeier, Peissig, & Hohmann, 1993).

How Spatial Sense Works

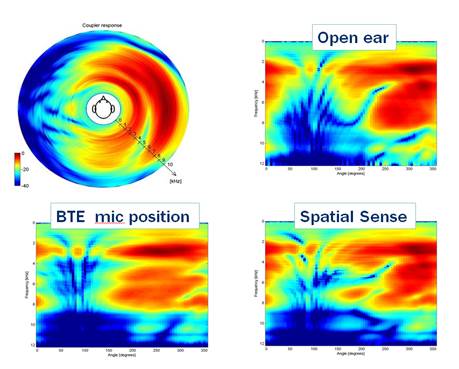

Spatial Sense integrates two technologies to preserve the acoustic cues for spatial hearing: pinna restoration and binaural compression. Pinna restoration compensates for the loss of spectral pinna cues and allows an accurate estimate of the true ILD at the eardrum of the hearing instrument wearer. This processing uses dual microphones and works on the same principle as directional processing. But whereas the goal of directional processing is to maximize SNR improvement for sounds coming from a particular direction, the goal of pinna restoration is to provide directivity characteristics that resemble those of the open ear canal for sounds coming from any angle. An example of how the pinna restoration algorithm in Spatial Sense works is shown in Figure 6. These measurements are done on the right ear. The upper panels show the attenuation (red = no attenuation; blue = much attenuation) in the open ear canal for sounds reaching the ear canal from all angles around the head. Note that the least attenuation occurs on the right side, and the most occurs on the left side. This is due mainly to the head shadow effect. For angles from in front to behind the head on the right side, the pattern of attenuation is largely due to the pinna. The lower left panel shows the pattern of attenuation when the hearing aid microphone is placed above the pinna as is the case for BTE and RIE instruments. The distinctive pattern on the same side of the head is radically changed from the open ear condition. Compare this to the bottom right panel, which is measured with the microphone position above the pinna and Spatial Sense activated. The characteristic pattern of attenuation on the right side of the head is well-preserved.

Figure 5. The angle-dependent attenuation of different frequency sounds for the open right ear, BTE microphone position and Spatial Sense pinna restoration. Dark red indicates little-to-no attenuation. Note how the characteristic attenuation pattern for the open ear is emulated by Spatial Sense.

The other component of Spatial Sense is a binaural compression algorithm. This type of processing seeks to preserve ILD cues that might be reduced when WDRC is applied independently in a pair of bilaterally fit devices. Because sounds reaching the ear furthest from a sound source will be less intense than sounds reaching the ear nearest to the sound source, WDRC will apply relatively more gain to the softer sound at the far ear. While the preservation of ILD cues, which are most salient in the high frequencies, is not the dominant localization cue, preserving the natural relationship between ITD and ILD cues is a main determinant of spatialization (Weinrich, 1992; MacPherson & Sabin, 2007).

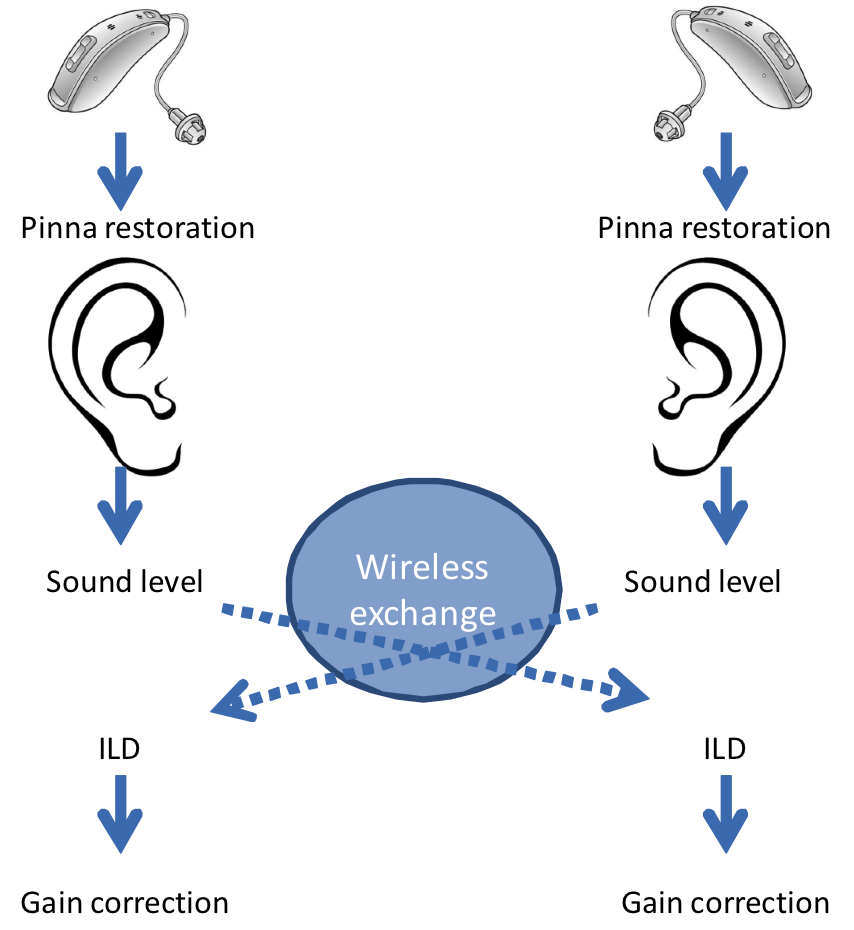

The binaural compression component of Spatial Sense relies in part on the accuracy of the pinna restoration and in part on the wireless exchange of data between the two hearing instruments. The magnitude of the sound pressure at the ear drum is strongly affected by the angle to the sound source, resulting in ILD of up to 20 dB as a function of incident angle at high frequencies (Shaw, 1974; Shaw & Vaillancourt, 1985). Udesen and colleagues (2013) reported the effects on ILD of 46 different microphone locations in and around the outer ear. While humans are sensitive to ILD changes as small as .5 dB, they revealed ILD errors of 30 dB and greater depending on the interaction of angle of sound incidence and microphone position. Some of the largest errors occurred for positions typical of BTE and RIE hearing instruments. Because a binaural compression algorithm seeks to preserve ILD, it is critical to be able to estimate the ILD that would correspond to that of the open ear. As demonstrated in Figure 6, pinna restoration enables this capability.

Consistent with the ReSound philosophy that drives Surround Sound by ReSound, binaural compression is inspired by the physiology of the normally functioning ear. Many hearing care practitioners are familiar with the effects of efferent innervation in the auditory system. These effects can be demonstrated via measurements of otoacoustic emissions (OAE). If one ear is stimulated, inhibition of outer hair cell activity - as reflected in reduced amplitude of OAE - is observed (Berlin, Hood, Hurley, Wen, & Kemp, 1993; Maison, Micheyl, & Collet, 1998). This behavior suggests a reduction in sensitivity for the ear furthest from salient sounds, which indicates that noise suppression may be a benefit. This type of auditory efferent activity occurs when a signal is carried from the stimulated ear to the brain, and the brain then sends a control signal to the opposite ear. It relies on the crossing of information from both ears to both sides of the brain. In the case of hearing loss due to hair cell damage, it can be assumed that auditory efferent activity is reduced. The ReSound approach to binaural compression is unique in that it uses the wireless ear-to-ear communication in the hearing instruments to emulate the crossing of signals from ear-to-ear via the brain (Kates, 2004). The correction to hearing instrument gain in order to preserve ILD will be carried out on the ear with the least intense signal. This is to emulate the inhibitory effects of auditory efferents.

Figure 7 illustrates how Spatial Sense is modeled after the natural ear including pinna restoration for an accurate estimate of ILD, wireless exchange of information to emulate the crossing of signals between ears, and the correction of ILD based on the ear with the least intense signal to emulate inhibitory effects of auditory efferent effects.

Figure 6. The wireless link between hearing aids is analogous to the crossing of signals between ears in the auditory system. This helps to emulate ILD preservation in a way most similar to normal auditory processes.

Evidence for Spatial Sense

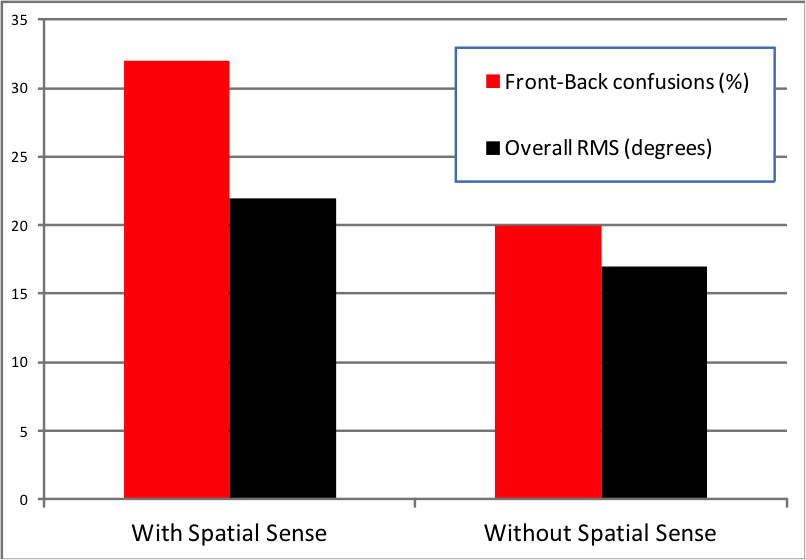

Laboratory trials of localization performance with hearing impaired listeners showed a clear benefit of Spatial Sense over omnidirectional processing. Figure 8 shows the improvement for 10 participants both in terms of reduction of front-back confusions as well as overall localization errors for sounds coming from multiple angles around the listener. While the improvement in front-back localization can be attributed to the pinna restoration alone, the overall improvement in localization relies on the combination of algorithms in Spatial Sense.

Figure 7. Errors in front-back localization and overall localization errors for sounds coming from multiple angles were reduced with Spatial Sense.

Nine participants also wore hearing instruments programmed with Binaural Directionality and Binaural Directionality II with Spatial Sense in a single-blinded crossover design. They completed the Speech, Spatial and Qualities of Hearing (SSQ) (Gatehouse & Noble, 2004) and a subjective rating questionnaire for each condition. One general finding is that ratings clustered in the positive direction regardless of whether they wear evaluating Binaural Directionality or Binaural Directionality II with Spatial Sense. This supports the superior performance and excellent sound quality of existing products such as the ReSound LiNX and ReSound Verso, which feature Binaural Directionality. No significant differences were observed between the conditions except for “ease of listening” on the SSQ and tonal quality on the subjective rating questionnaire. In both cases Binaural Directionality II with Spatial Sense was assigned the significantly higher rating.

Summary

The brain can only process and analyze the sound environment based on the inputs received from the ears. Traditional wireless solutions for transmission between two hearing instruments can optimize the audibility and beamforming characteristics of a fitting, but do not necessary lead to a natural, binaural processing of sound. The underlying assumptions made by such systems are that the signal of interest is stable and predictable, which is not the case in the majority of real-world listening situations. The Surround Sound by ReSound signal processing system is guided by the philosophy of providing a natural hearing experience. Therefore, Binaural Directionality II with Spatial Sense allows for the brain to receive the best possible representation of the sound, by focusing on the user and natural sound processing as opposed to the hearing instruments and their prescribed “signal of interest.” With this approach, the user determines the signal of interest. In addition, Spatial Sense preserves localization cues to allow for true spatialization and the most natural listening experience ever with hearing instruments.

References

Akeroyd, M.A. (2004). The across frequency independence of equalization of interaural time delay in the equalization cancellation model of binaural unmasking. J Acoust Soc Am, 116, 1135–48.

Bentler, R.A., Egge, J.L.M., Tubbs, J.L., Dittberner, A.B., & Flamme, G.A. (2004). Quantification of directional benefit across different polar response patterns. J Am Acad Audiol, 15, 649-59.

Berlin, C.I., Hood, L.J., Hurley, A.E., Wen, H., Kemp, D.T. (1993). Binaural noise suppresses linear click-evoked otoacoustic emissions more than ipsilateral or contralateral noise. Hearing Research, 87, 96-103.

Brimijoin, W.O., Whitmer, W.M., McShefferty, D., & Akeroyd, M.A. (2014). The effect of hearing aid microphone mode on performance in an auditory orienting task. Ear Hear, 35(5), e204-e212.

Bronkhorst, A.W., & Plomp, R. (1988). The effect of head induced interaural time and level differences on speech intelligibility in noise. J Acoust Soc Am, 83, 1508 – 1516.

Cord, M.T., Surr, R.K., Walden, B.E., & Olson, L. (2002). Performance of directional microphone hearing aids in everyday life. J Am Acad Audiol, 13, 295-307.

Cord, M.T., Walden, B.E., Surr, R.K., & Dittberner, A.B. (2007). Field evaluation of an asymmetric directional microphone fitting. J Am Acad Audiol, 18, 245-56.

Coughlin, M., Hallenbeck, S., Whitmer, W., Dittberner, A., & Bondy, J. (2008, April). Directional benefit and signal-of-interest location. Seminar presented at: American Academy of Audiology convention. Charlotte, NC.

Edmonds, B.A., & Culling, J.F. (2005). The spatial unmasking of speech: evidence for within-channel processing of interaural time delay. J Acoust Soc Am, 117, 3069–78.

Gatehouse, S., & Noble, W. (2004). The Speech, Spatial and Qualities of Hearing Scale. Int J Audiol, 43(2), 85-99.

Hornsby, B. (2008, June). Effects of noise configuration and noise type on binaural benefit with asymmetric directional fittings. Seminar presented at 155th Meeting of the Acoustical Society of America. Paris, France.

Hornsby, B., & Ricketts, T. (2007) Effects of noise source configuration on directional benefit using symmetric and asymmetric directional hearing aid fittings. Ear Hear, 28, 177-86.

Jespersen, C.T. (2014). Independent study identifies a method for evaluating hearing instrument sound quality. Hear Rev, 21(3), 36-40.

Kates, J.M. (2004). Binaural compression system. US Patent Application 20040190734 A1.

Kollmeier, B. Peissig, J. & Hohmann, V. (1993). Real-time multiband dynamic range compression and noise reduction for binaural hearing aids. Journal of Rehabilitation Research and Development, 30, 82-94.

MacPherson, E.A., & Sabin A.T. (2007). Binaural weighting of monaural spectral cues for sound localization. J Acoust Soc Am, 121(6), 3677-3688.

Maison, S., Micheyl, C., & Collet, L. (1998). Contralateral frequency-modulated tones suppress transient-evoked otoacoustic emissions in humans. Hearing Research, 117, 114-118.

Orton, J.F., & Preves, D. (1979). Localization as a function of hearing aid microphone placement. Hearing Instruments, 30(1), 18-21.

Shaw, E.A.G. (1974). Transformation of sound pressure level from the free field to the eardrum in the horizontal plane. J Acoust Soc Am, 56, 1848-1861.

Shaw, E.A.G., & Vaillancourt, M.M. (1985). Transformation of sound pressure level from the free field to the eardrum presented in numerical form. J Acoust Soc Am, 78, 1120-1123.

Shinn-Cunningham, B.G., & Best, V. (2008). Selective attention in normal and impaired hearing. Trends Amplif, 12(4), 283-299.

Shinn-Cunningham, B., Ihlefeld, A., & Satyavarta, E.L. (2005). Bottom-up and top-down influences on spatial unmasking. Acta Acustica united with Acustica, 91, 967-79.

Simon, H., & Levitt, H. (2007). Effect of dual sensory loss on auditory localization: Implications for intervention. Trends Amplif, 11, 259-72.

Udesen, J., Piechowiak, T., Gran, F., & Dittberner, A. (2013, August). Degradation of spatial sound by the hearing aid. In T. Dau, S. Santurette, J.S. Dalsgaard, L. Tanebjaerg, T. Andersen, & T. Poulsen (eds), Proceedings of ISAAR 2013: Auditory plasticity – listening with the Brain, 4th symposium on auditory and audiological research. Nyborg, Denmark.

Walden, B., Surr, R., Cord, M., & Dyrlund, O. (2004). Predicting hearing aid microphone preference in everyday listening. J Am Acad Audiol, 15, 365-96.

Walden, B., Surr, R., Cord, M., Grant, K., Summers, V., & Dittberner, A. (2007). The robustness of hearing aid microphone preferences in everyday environments. J Am Acad Audiol, 18, 358-79.

Weinrich, S.G. (1992, March). Improved externalization and frontal perception of headphone signals. Presentation at the 92nd Convention of the Audio Engineering Society, Vienna, Austria.

Westerman, S., & Topholm, J. (1985). Comparing BTEs and ITEs for localizing speech. Hearing Instruments, 36(2), 20-24.

Zurek, P.M. (1993). Binaural advantages and directional effects in speech intelligibility. In G. Studebaker & I. Hochberg (Eds.), Acoustical factors affecting hearing aid performance. Boston: College-Hill.

Citation

Groth, J. (2016, May). Hearing aid directionality with binaural processing. AudiologyOnline, Article 17272. Retrieved from www.audiologyonline.com