From the Desk of Gus Mueller

Michael Jackson had just released Thriller, Chariots of Fire was showing at the theaters, a college quarterback named John Elway was making news at Stanford, and I was attending the ASHA convention in Toronto. It was November 1982. I recall walking down the aisle in the exhibit hall and hearing my name called. I was being summoned to the Bernafon booth, where they had on display a new probe-tube microphone real-ear system. It was a prototype of what would soon become the Rastronics CCI-10, named after the developer Steen Rasmussen.

Michael Jackson had just released Thriller, Chariots of Fire was showing at the theaters, a college quarterback named John Elway was making news at Stanford, and I was attending the ASHA convention in Toronto. It was November 1982. I recall walking down the aisle in the exhibit hall and hearing my name called. I was being summoned to the Bernafon booth, where they had on display a new probe-tube microphone real-ear system. It was a prototype of what would soon become the Rastronics CCI-10, named after the developer Steen Rasmussen.

Gus Mueller

At the time probe-microphone measures had been around for a while; we were conducting hearing aid research at Walter Reed Medical Center using this technique. But the only system available, an interface developed by Dave Preves, required placing the microphone itself down in the ear canal. This worked okay for research, but wasn’t practical for clinical applications. This new system of Rastroncis, where only a small silicone tube went down into the ear canal was pretty slick, and I was impressed. The future for clinical probe-mic measures looked bright.

And bright it was. Following the introduction of the Rastronics, we soon saw similar models from Acoustimed, Bosch, Madsen and Frye. Audiologists were delighted to throw out the tedious and unreliable functional gain measures that they had been using to verify prescriptive fittings, and quickly latched onto this new thing called “insertion gain.” One model was even named the “Insertion Gain Optimizer”—how do you get better than that?

By the mid-1980s probe-mic verification was beginning to be considered the clinical norm for verification; terminology and procedures were being standardized, and early validity and reliability studies were very encouraging. Many assumed that it would only be a few more years before all hearing aids would be verified in this manner—after all, who wouldn’t want to know the aided SPL being delivered to their patients' ears?

All this happened 30 years ago. How are we doing today?

Gus Mueller, Ph.D.

Contributing Editor

January 2014

To browse the complete collection of 20Q with Gus Mueller articles, please visit www.audiologyonline.com/20Q

20Q: Real-Ear Probe-Microphone Measures — 30 Years of Progress?

1. You mention progress? I just saw a new system where the core processing is a small device with a USB connection to a laptop. That sure beats the big cumbersome systems of years ago.

You’re right. Our systems of today are smaller and more sophisticated, but there are different ways of assessing progress. How about a mobile phone analogy? Like probe-mic equipment, the cell phones of today are cuter, with considerably more advanced technology than what we had in the mid-1980s. But here’s a different type of progress to consider. In 1991, 3% of the U.S. population had a cell phone—today that number is at 91%. By comparison, a 1991 ASHA survey revealed that 45% of audiologists used probe-mic measures for fitting hearing aids. Care to guess what that use rate is today?

2. I see where you’re going, but the cell phone comparison is a little lame. You can’t call your mom on Mother’s Day with your probe-mic equipment!

True, but you could call her with a landline phone, and that actually would get the job done very nicely. That’s part of the problem as there is no suitable alternative to probe-mic measures when it comes to fitting hearing aids. And I might add, at one time there were several of us who assumed that probe-mic testing would be as common among audiologists as cell phone use is today with the general population.

3. So what is the average use rate today?

Hard to say, but probably no better than it was when that 1991 survey was conducted. Erin Picou and I conducted a survey a couple years ago, and I doubt that things have changed any since then (Mueller & Picou, 2010). When we combined audiologists and hearing instrument specialists, we found that the routine use (>50% of the time) of probe-mic testing for hearing aid verification was around 40%, essentially identical to what I found in a 2005 survey (Mueller, 2005). And it’s actually probably not as high as the survey showed. I’ve been doing these surveys for many years and have found that respondents often report doing test procedures more frequently than they really do. So we inserted a “lie detector” question to account for this. Based on the findings from this question, I’d estimate the use rate to be no higher than 30%. In preparing for your questions, I’ve also conducted some informal surveys on this topic in just the past few weeks. A group who has a pretty good idea of what really goes on when hearing aids are fitted is the manufacturers’ sales reps and trainers, who go office to office each week. Their best guess was 20-30%. I also talked to individuals involved in the sale of probe-mic equipment, and they weighed in at around 30-35%. The use rate of probe-mic for verification in the VA is pretty high, and they of course fit a large percent of hearing aids, so if you count them, the overall rate would be somewhat higher.

4. I would guess that the increased number of retail outlets dispensing hearing aids is one of the factors driving down the average use of probe-mic verification?

You could guess that, but I’m pretty sure you’d be wrong. In fact, my bet is that it’s just the opposite—this is where you’ll find the highest use rate. Most of these dispensing outlets emphasize best practices, and of course probe-mic verification has been part of every best practice guideline for fitting hearing aids in the past 20 or more years. At dispensing outlets such as Costco, I doubt that you would keep your job if you didn’t follow their fitting protocol, which indeed calls for probe-mic verification.

5. I didn’t know that. But, in many of these cases, the company buys the equipment for the audiologist. Some audiologists might like to do the testing but just don’t have the equipment.

Well, that certainly is a common reason given for not conducting probe-mic verification, but it really doesn’t hold water. First, in the survey that I mentioned earlier (Mueller & Picou, 2010), we looked separately at the use rate for the audiologists who had the equipment. The use of probe-mic only went up 10% (from 45% to 55%). That is, 45% of those who had the equipment still didn’t use it routinely. Moreover, I’ve talked to reps from hearing equipment sales companies who tell me they could set someone up with a used probe-mic system on a lease-to-buy deal for as little as $100/month. That’s a pretty minor investment for fitting hearing aids that retail for several thousand dollars.

6. So why do you think that audiologists are reluctant to use probe-mic measures when fitting hearing aids?

Good question. I did a pretty detailed discussion of most of the possible reasons in an article a few years back, and I don’t think anything has changed significantly, so I won’t go through them all again (see review by Mueller, 2005). That article included the opinions of several probe-mic luminaries but we didn’t really reach a conclusion. One of the opinion leaders cited in that article stated:

I do not believe that probe-mic and other methods of verification will be part of the routine practice of dispensing hearing aids until there is some form of legislation or mandates from third-party payers that reimbursement for hearing aids will not occur unless there is documentation that some method of verification of the fitting has been performed.

On a similar note, another expert said:

If the fitting process were to be unbundled, with separate charges made for tests as well as for the basic hearing instrument, then the addition of a real-ear check would have a cash incentive.

It would be nice to think that audiologists would do this testing because it is the right thing to do, not because it was mandated or billable. As you may recall, Catherine Palmer (2009) pointed out that not conducting probe-mic verification could be considered a breach of the Code of Ethics of many professional organizations. For example, Principle 4 of the American Academy of Audiology's Code (AAA, 2011) states: “Members shall provide only services and products that are in the best interest of those served.” We all want to be ethical, so I have to believe that people think that the fitting will be okay based on using alternative verification procedures. What that alternative approach would be, I can only guess. Comparative speech testing? The patient saying, “sounds good?” The patient not returning the hearing aids for credit? None of these seem valid to me.

7. I have to fess up. I am one of those people who own the equipment but rarely use it. I tried doing probe for a while, but I didn’t really find it made any difference.

It's interesting that you say “doing probe", and I hear that term alot. This generalization is one of the problems. Probe-mic measures are not a way to fit hearing aids. They are a way to verify your way of fitting hearing aids. That means that you need to have some pre-determined gold standard to verify.

Let me provide an example to illustrate my point. If you wanted to build a bird house for your backyard, you would probably start with downloading a blueprint. You’d then run off to Home Depot and buy all the necessary materials, then get all the wood cut to the correct specifications, and assemble it. Along the way you’d frequently use a tape measure. Later that evening when your friends admired the design and construction of your newly built birdhouse, you wouldn’t credit the tape measure, you’d go back to the blueprint. And that’s pretty much the way fitting hearing aids go—you start with a blueprint and then simply use probe-mic testing to verify that you got things right.

8. I get it. But I’ve heard that there really isn’t a blueprint that works very well, which is why I skip probe-mic measures and simply make adjustments based on my clinical experience.

First, I don’t understand why you don’t want to know the amplified ear canal SPL. If nothing else, probe-mic measures will tell you if you have made speech signals audible—this is Hearing Aid Fitting 101. Your clinical experience can't tell you that, and this relates to the ethical practice comments I made earlier. Sending your patient out the door with speech inaudible, when it easily could have been made audible with a few mouse clicks, is not "providing services that are in the best interest of those served.” Secondly, you have to start somewhere. You’re usually working with hearing aids with a gazillion channels, several compression kneepoints and ratios, and a 40-60 dB range for gain. Why not start with a validated prescriptive approach, which will provide you with input-level-specific fitting targets for speech signals, which then can be verified?

9. I assume you’re referring to the NAL-NL2. Doesn’t this call for more gain than what most patients want to use?

You have two validated approaches to choose from, the NAL-NL2 and the DSLv5; both should get you started pretty close to where you want to end up. Regarding your “too much gain” comment, I’m curious what you are basing that on? In the development stages, the researchers behind both of these prescriptive methods considered preferred loudness levels in determining the amount of gain prescribed. Granted, we are using average values, so indeed the gain will be too loud for some patients, but the prescribed values should also be too soft for an equal number of patients.

Rather than speculation, we can look at research. In a recent report from Keidser and Alamudi (2013), 26 hearing-impaired individuals (experienced hearing aid users) were fitted with new trainable hearing aids, which were initially programmed to NAL-NL2. Following three weeks of training, they examined the new trained settings for both low and high frequencies, for six different listening situations (the training was situation specific). The participants did tend to train down from the NAL-NL2, but only by a minimal amount. For example, for the speech in quiet condition for the high frequencies, the average value was a gain reduction of 1.5 dB (0.95 range = 0 to -4 dB), and for the speech in noise condition, there was an average gain reduction of only 2 dB (0.95 range = .5 to -4.5 dB). The trained gain for the low-frequency sounds for these listening conditions was even closer to the original NAL-NL2 settings.

Perhaps even more compelling data is from another study using trainable hearing aids conducted by Catherine Palmer (Palmer, 2012). The participants in this study were 36 new users of hearing aids. One group of 18 was fitted to the NAL-NL1, used this gain prescription for a month, and then trained the hearing aids for the following month. The second group of 18 was also fitted to the NAL-NL1, but started training immediately, and trained for two months. Importantly, these individuals were using hearing aids which had the potential to be trained up or down by 16 dB—providing ample opportunity for them to zero in on their preferred loudness levels. In general, after two months of hearing aid use, both groups ended up very close to the NAL-NL1. Palmer reports that the Speech Intelligibility Index (SII) for soft speech was reduced a modest 2% for the first group, and 4% for the group that started training at the initial fitting.

If indeed the NAL prescribed gain is excessive as you suggest, we would expect all or most of the individuals in these two studies to train the gain to a lower level. These data suggest that the NAL prescription is a reasonable starting place.

10. I’ll think about that. What about the DSLv5 prescriptive algorithm?

I’m not familiar with a study where this fitting algorithm has been used with trainable hearing aids, but the work of Polonenko et al. (2010) addresses the issue of preferred loudness. Their research showed that the DSLv5 adult algorithm approximated the preferred listening levels of their participants within 2.6 dB on average. That’s pretty good. In general, for adult patients, I wouldn’t expect the results to be very different for the NAL-NL2 and DSLv5. For typical audiograms, for average level inputs, the two prescriptions are quite similar, especially for the 500 to 4000 Hz range, where we usually focus our hearing aid fitting attention. In 2012, Earl Johnson wrote a 20Q article that provides a detailed comparison of the two procedures. Take a look at Earl’s Figure 2 and you’ll see the similarities I mention.

11. That’s interesting, but to be honest I simply use the manufacturer’s recommended fitting—my rep told me that works the best with their products. Seem reasonable?

The question is, is it best for the patient? Maybe not. Most manufacturers do have their own fitting method, commonly referred to as the “proprietary fit,” “default fit,” or “first fit.” There are several reasons for this, some involving the specific signal processing of different instruments, and others relating to a fitting strategy that differs from the NAL or the DSL. Regarding the latter, many audiologists fitting hearing aids prefer a “first fit” that is immediately acceptable to the patient, or at least more acceptable than what typically occurs with the NAL or DSL. Initial acceptance by the patient, therefore, plays a pretty big role when the manufacturer’s proprietary fitting is developed. In general, especially for new hearing aid users, less gain than normally predicted tends to be more acceptable. To the patient, less gain sounds more like no hearing aid at all, which is what they are used to. I’ve never seen a manufacturer’s proprietary fitting that calls for more gain than the NAL or DSL. So on the day of the fitting, if your goal is to have the patient say something like “Gee, this sounds really natural and normal to me,” then a proprietary fitting might be the way to go (although the hearing aids may not be providing optimal benefit). That explains why you you’re not using probe-mic measures, as you really don’t have anything to verify.

In addition to basic audibility of speech, we also know that one of the primary reasons why people obtain hearing aids is to understand in background noise. The leading reasons people who own hearing aids never use them are minimal benefit and problems understanding in background noise (Kochkin, 2000). Providing the patient optimum understanding in background noise, therefore, is also a factor to consider when the hearing aids are programmed on the day of the fitting.

12. Are you suggesting that the manufacturer’s proprietary fitting might not optimize speech understanding in background noise?

A recent study has looked at this. Ron Leavitt and Carol Flexer (2012) fit hearing-impaired individuals (typical downward sloping losses) with seven different pairs of hearing aids and conducted aided QuickSIN testing. The QuickSIN sentences were presented at 57 dB SPL (slightly below average speech). Six of the seven pairs of hearing aids were the premier models from the leading 6 hearing aid companies. Special features such as directional microphone technology and noise reduction were activated. The seventh pair were 10-year-old analog, single-channel, omnidirectional hearing aids with no noise reduction features. Each of the six premier hearing aids were evaluated while first programmed to the manufacturer’s first fit, and then also when programmed to the NAL-NL1. The old analog hearing aids were only tested programmed to the NAL-NL1.

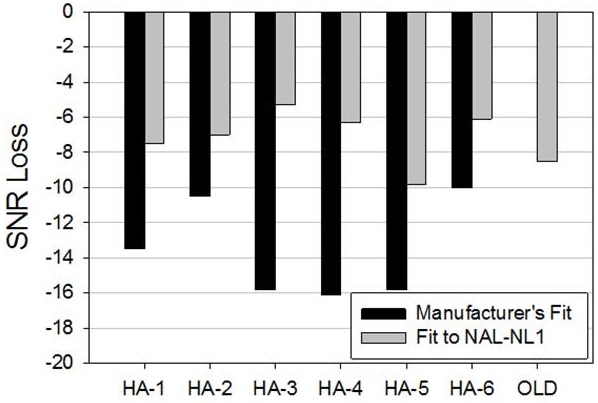

The results of this study are plotted in Figure 1, which are the mean QuickSIN scores for the participants for all the aided conditions. There is a lot of good information here, so let’s walk through it. As a reminder, the QuickSIN is scored in SNR Loss. SNR Loss means that compared to normal hearing individuals, the decrement in SNR that the patient (or group in this case) has to obtain 50% correct for the sentences. The way I have the scores plotted in Figure 1, a -10 dB SNR would indicate that mean performance is 10 dB worse than expected for normal hearing individuals. For now, we’re concerned with the relative, not so much the absolute, scores.

Figure 1. Mean QuickSIN scores (SNR-Loss) for hearing impaired individuals fitted with six different pairs of hearing aids. One pair (labeled "old") were 10-year-old analog single-channel products; the other six pair were the premier model (circa 2012) of the six leading hearing aid manufacturers. The old hearing aids (far right bar) was only programmed to NAL-NL1. The other hearing aids were tested while programmed to the manufacturer’s proprietary fit and also programmed to the NAL-NL1 (adapted from Leavitt & Flexer, 2012)

If you first look at the far right bar, you see that the mean SNR-Loss for the old analog instruments was around 8 dB. Compare this to the mean performance for the manufacturers’ recommended fitting for the six different new premier hearing aids (dark bars). The results for HA-6 are fairly similar to those of the old analog hearing aids. However, note that when the participants used HA-3, HA-4 or HA-5, their scores were about 8 dB worse. Let’s put this into practical terms: You have a patient who has been using 10-year-old analog hearing aids with no noise reduction algorithms programmed to the NAL-NL1. He now purchases a pair of new high-end products with all the special features, and you program these new hearing aids to the manufacturer’s recommended first fit. If that fellow goes back to his favorite pub, where in the past he was just able to follow the conversation, he now will somehow have to turn the pub noise down by 8 dB (good luck!), or convince his buddies to speak 8 dB louder, just to perform as well as he was with his old hearing aids. My guess is he’ll either stop going to the pub, or go back to using his old hearing aids, and you'll have a return for credit on your hands.

Falling in the “To No One’s Surprise” category are the findings when the premier hearing aids were programmed to the NAL-NL1 rather than the manufacturer's recommended first fit. Now you see that most of the new products are performing 2 dB or better than the old analog instruments. Audibility is a wonderful thing. With HA-3, for example, the mean QuickSIN score improved by over 10 dB simply by changing the programming from manufacturer's fit to NAL. A good example of why the person fitting the hearing instruments is usually more critical than the technology.

13. You’ve got my attention. But, that study was from a couple years ago. Do you think we’d see those same results today?

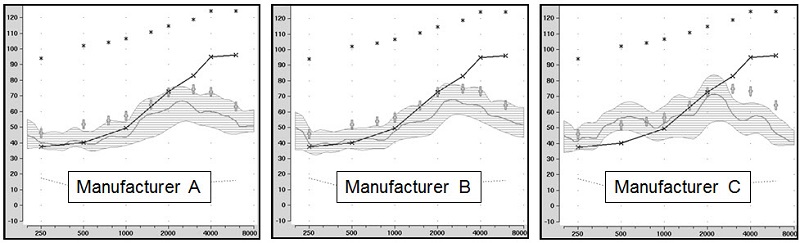

Good point. Manufacturers do sometimes change their first fits when new products are introduced, so it could be that some changes have occurred since the time those data were collected. In October 2013, I worked on a project with Elizabeth Stangl at the University of Iowa Hearing Aid Lab, and we looked at the default fittings for the premier hearing aid for each of the Big 6 manufacturers. These findings should be representative of the hearing aids you are fitting today. We simply entered a typical downward sloping hearing loss (e.g. 30 dB in the lows to 75 dB in the highs), used the manufacturer’s recommended default settings, and measured the real-ear output for a real-speech input (55 dB SPL male speech signal from the Verifit). As a reference, we compared the manufacturer’s fitting to the prescriptive targets of the NAL-NL2 for this speech signal.

Figure 2. Shown are the speechmapping findings for the premier hearing aid of three major manufacturers, programmed to the manufacturer’s recommended proprietary algorithm. The test stimulus was the male talker of the Verifit system, presented at 55 dB SPL. Also shown are the sample patient’s audiogram (upward sloping solid line) and the NAL-NL2 targets for this speech input (frequency specific crosses). Chart courtesy of Elizabeth Stangl, AuD.

Figure 2 shows the findings for three of the six manufacturers. The findings for the other three were similar, although the deviations from NAL-NL2 targets were not quite as great. For those of us who think closer to NAL is better, I’m showing you the three worse examples. Notice that for both Manufacturer A and B, the deviation from NAL-NL2 is large (10 dB or more), and importantly, even the peaks of the speech signal are only audible through 2500 Hz or so. Manufacturer C actually maintains fairly good audibility up to 2500 Hz, but then drops to 20 dB below NAL-NL2 for the higher frequencies. Even if we forget about the NAL-NL2 fitting targets and simply look at this patient’s thresholds, I would think that audiologists would want to send their patients out the door with more audibility for speech than shown here. And if you’re not measuring the hearing aid output in the real ear, how would you know?

Editor's Note: If you're new to using probe-mic measures and observing the print-out of probe-mic equipment, see the Appendix at end of this article, which has a sample screen shot labeling the typical items in the display.

14. The manufacturer I use has the option of clicking “NAL-NL2” in the fitting software, so if I use that rather than their proprietary first fit could I skip probe mic measures?

Fitting someone to a given prescriptive algorithm is based on the SPL you deliver to the eardrum, not what is says on a software button, or the simulated curve that you see on your computer screen. Research suggests it is risky to assume you have a NAL fit in patient's ear just because you made that selection in the fitting software. In 2007, Aazh and Moore used four different types of hearing aids and programmed them to the manufacturer’s NAL-NL1 using the software selection method. When probe-mic verification was conducted, only 36% of fittings were within a very generous +/-10 dB of NAL targets. And it doesn’t appear that things have gotten any better. Just two years ago Aazh, Moore and Prasher (2012) conducted a similar study with open fittings. They reported that of the 51 fittings, after programming to the manufacturer’s NAL in the software, only 29% matched NAL-NL1 targets within +/- 10 dB.

15. Is this discrepancy present for all manufacturers or just some?

I suspect it varies somewhat among manufacturers, and with some, it varies depending on what product you are fitting, and whether you are fitting a BTE or a custom instrument. We know of course, that because of patient-specific factors, such as the real-ear-coupler difference (RECD), or microphone location effects (MLEs), we would not expect an exact match to the NAL targets when we do probe-mic verification. The software calculations are for the average ear so we would expect to see deviations of a few dB above and below target, influenced mostly by the residual volume of the ear canal. But interestingly, the deviation from NAL targets that we see across all manufacturers is always under-fitting, and to a greater degree than we would expect from any RECD or MLE factors.

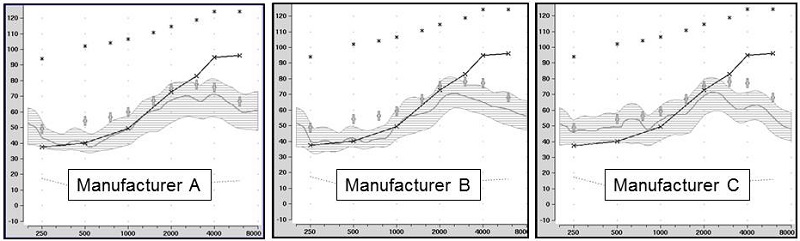

I do have more real-ear measures to show you that will help make this latter point. These again were collected at the Hearing Aid Lab at the University of Iowa a few months ago. As with the proprietary fittings I discussed earlier, we tested the premier model of each manufacturer (mini-BTE RICs with standard domes) using the male speechmapping signal of the Verifit (55 dB SPL). The results for three of the instruments are shown in Figure 3. One of the other manufacturers was reasonably close to the NAL-NL2 targets, but what you see here is pretty common. There is the trend of under-fitting, and note that 10 dB below NAL is typical for all three of these manufacturers. Manufacturer C is actually 15 dB below NAL-NL2 targets for the 3000-4000 Hz region. And by the way, all three of these were a close match to NAL targets on the fitting software screen, which is why you cannot fit using the fitting software screen.

Figure 3. Shown are the speechmapping findings for the premier hearing aid of three major manufacturers, programmed using the manufacturer’s NAL-NL2 option in the fitting software. The test stimulus was the male talker of the Verifit system, presented at 55 dB SPL. Also shown are the sample patient’s audiogram (upward sloping solid line) and the NAL-NL2 targets for this speech input (frequency-specific crosses). Chart courtesy of Elizabeth Stangl, AuD.

16. If I really do this extra work, is there any proof that my patients will do better in the real world with their verified NAL fittings?

A minor correction—probe-mic verification isn’t “extra work.” In most all settings outside of the government, either directly or indirectly the patient is paying you to get the fitting right. But this is a good question, and something that hasn’t been researched as much as you would think. One recent study that looked at this issue was done by Abrams, Chisolm, McManus, & McArdle (2012). In a cross-over design, these researchers compared manufacturer’s first fit to a verified prescriptive NAL-NL1 fitting (n=22). Although the “verified” fitting was still somewhat below the NAL-NL1, it was significantly closer to the prescribed targets than what was obtained with the manufacturers’ recommended fitting (as you would predict from our earlier discussion). The participants used the hearing aids with the two different fittings in the real world, and following each trial, completed the APHAB. After using both prescriptive methods, the participants selected their preferred fitting. The APHAB mean group results showed significant benefit with the verified NAL fitting for speech in quiet, in reverberation, and listening in background noise. Of the 22 participants, 17 selected the verified NAL as their preferred fitting. For several of the fittings, the verified NAL and the initial-fit were not significantly different. It is also, therefore, important to note that of the 13 subjects whose RMS error was significantly closer to prescribed target for the verified NAL fit than for the initial-fit approach, 11 of these preferred the verified fit at the conclusion of the study. While maybe not overwhelming, these data certainly do suggest that on average, fittings approaching NAL target will provide more real-world benefit, at least when compared to under-fitting, which is the most common alternative.

17. So far we’ve pretty much just talked about using probe-mic to verify prescriptive fittings. Are there other things I should be measuring with this equipment?

You bet. Where do I start? Special hearing aid processing algorithms, wireless connectivity, FM systems, telecoils, CROS fittings, tinnitus maskers, the occlusion effect, and the list goes on. It’s pretty much only limited by your imagination. We’ve been talking about many of these measures for 20 years or so (e.g., Mueller & Bryant, 1991; Mueller & Hawkins, 1992; Mueller, 1995), and some step-by-step protocols are in a monograph I wrote a few years back (Mueller, 2001a). Of course, as new technologies emerge, we need to develop new methods to verify them in the clinic.

One thing that I’d suggest you do routinely, which isn’t new at all, is real-ear verification of the maximum power output (MPO) using a swept pure tone (e.g., input of 85 dB SPL). This isn’t a replacement for doing aided behavioral loudness measures, but will give you the frequency-specific information that you need to make precise adjustments. Because today we have multiple channels of AGCo, with several easily adjustable kneepoints, this measure can be used to verify the adjustments that you make to different frequency regions. You can more or less shape the MPO to match your loudness discomfort targets just like you adjust gain and compression to match your prescriptive targets for different speech input levels. There’s a description of this process with some case studies at Mueller (2011).

18. You mentioned special hearing aid features. I assume you’re talking about things like directional technology? And if so, how about a couple tips on conducting this testing?

Directional technology is certainly one of the many features you want to assess on the patient’s ear, and the way I see it, verification of features is all part of a hearing aid fitting. I’ll do a quick review of the verification process for a few of the more common features, and give you some references where you can pick up some finer points.

Directional technology. There are at least three reasons why you might want to assess this technology in the real ear. First, is the directional effect what I would expect from this product? There are still directional products that arrive from the manufacturer not functioning as directional, and on follow-up visits, you’re going to run into cases where the microphone ports have been plugged with dirt or debris, often making the product omnidirectional. Second, what effect does the positioning of the hearing aid on the head have on the directional processing? This can be especially critical with mini-BTEs, where positioning the hearing aid to be comfortable or for cosmetics might work in opposition to good directivity (the on-head microphone port alignment is critical). While sometimes you may choose to fit for comfort over optimal directivity, but you’ll want to know the acoustic consequences of your decision, as it will impact patient counseling. And third, what effect does the tightness of the fitting have on the directional processing? The directivity index (DI) of an open fitting might only be ½ of what you would obtain from the same product when it is fitted with the earcanal closed. And, with the common use of custom ear tips, you might be doing more open fittings than you think. Most open fittings have little directivity below 1500 Hz, and you’d probably want to know this.

The general protocol for conducting this testing is to measure the real ear output, as you would with speechmapping, from both the standard 0 degree azimuth, and then also from a 180 degree azimuth (works best if the patient is in a swivel chair). You simply look at the difference in output for the two measures, which of course should be considerably lower for the 180 degree condition. You can find a detailed step-by-step protocol at Mueller, 2001b.

A few things to remember when you’re conducting this testing:

- Put the hearing aid on “fixed directional.” If you leave it in the automatic mode it’s possible that the test signal won’t trigger the directional processing. The intensity threshold to trigger the switch to directional may be higher than the input you are using, and some systems will not switch to directional if the input is classified as speech.

- This measure is to determine if a product is working as it should, not to compare one product to another. The specific polar pattern of a given product may make it appear superior to another directional product during testing conditions, when in fact it could have worse directivity in a diffuse noise field, the primary condition of interest. See Mueller, 2001b for a complete description of this.

- Because we do not present the 0 degree and 180 degree signals simultaneously, AGCi will cause the differences between the front and back signal to be smaller than what this difference would be in a real-world condition. See Mueller, 2001c for explanation of why this happens.

One final thing to note. When conducting probe-mic measures, we’ve typically used front-to-back ratio (FBR) to look at directionality. Recent research, however, suggests that it might be better to look at the front-to-side ratio (FSR) (Wu and Bentler, 2012). In their research, they found that the FSR predicted the directivity index (DI) and performance on the HINT more accurately than did the FBR. They suggest that clinicians use the FSR to monitor hearing aid directivity. I personally haven’t tried this out, but something you might want to consider. Wu and Bentler provide some guidelines in their article.

Digital noise reduction. Some of the same reasons I mentioned earlier regarding the probe-mic assessment of directivity also apply to digital noise reduction (DNR). The magnitude of the noise reduction varies considerably among manufacturers. A medium setting for one manufacturer might mean 8 dB, whereas for another, it’s only 3 dB. The onset and offset of the DNR algorithms also vary considerably among manufacturers. This too is easy to observe when you conduct probe-mic measures and can be beneficial in patient counseling. As with directional, open fittings have a substantial impact on the degree of DNR for the lower frequencies—in a truly open fitting, there will be no functional DNR in the lower frequencies regardless of the strength of the setting. This too is important for patient counseling, as it’s the low-frequency DNR that is the most perceptually noticeable.

With directional, we talked about using probe-mic measures for quality control purposes. That probably isn’t necessary for DNR. That is, if you know that product ABC from manufacturer XYZ has 8 dB of DNR at the max setting, you can be pretty confident that all the ABC products you fit will have this same amount of DNR. So your main interest in conducting the testing at time of the fitting is to examine the effects the acoustic coupling has on the DNR. I might add, most patients are quite impressed when they see the output of the noise signal automatically dropping in intensity on the computer screen. It very quickly answers the question: “Are you sure the noise reduction of my hearing aids is working?”

When conducting this testing, you simply would have the hearing aid adjusted to “use settings,” conduct a measure with DNR off, and then a second measure with DNR on. Of course, it’s critical that the input signal is something that the hearing aid classifies as noise, so you wouldn’t use the same signal you had just used for speechmapping. Most probe-mic equipment has one or more noise signals that can be used for this purpose.

Automatic feedback reduction. Today, even entry level hearing aids have sophisticated phase-cancellation feedback reduction circuits. But, the effectiveness of these systems can vary by 10 dB or more among manufacturers, or among different models from the same manufacturers. How good is the feedback system you’re fitting to your patients? With an investment of two minutes of testing, your probe-mic system will tell you.

The process for determining the effectiveness of the feedback system is pretty straightforward. After you have conducted speech mapping and have the hearing aid adjusted appropriately, presumably to validated prescriptive targets, turn off the feedback reduction system and increase overall gain gradually until feedback occurs. The patient can help you determine when this happens. Run a typical 65 dB SPL speechmapping curve for this setting. Now, turn the feedback reduction circuit on, and continue to gradually increase gain until feedback again occurs. Run another speechmapping curve for this setting. The difference between these two curves is what is commonly referred to “added gain before feedback” or AGBF. I suspect that you will find that it only will be 2-3 dB for some instruments and 12-15 dB for others. There are some patient-specific interactions, but in general, if it’s a good feedback reduction system, you will see good results for most everyone. One potential limitation of this approach is that if you have a good system, and are fitting a mild gain instrument, it is possible that you will max-out gain before you obtain feedback with the circuit on. In this case, you won’t have a true measure of the AGBF, but you can still be reassured that your patient won’t have feedback issues.

Frequency lowering. I won’t go into a lot of detail here regarding the probe-mic assessment of this feature, as we have discussed this pretty thoroughly in two other 20Q articles (Scollie, 2013; Mueller, Alexander, & Scollie, 2013) You’ll find some sample screen shots in the Scollie (2013) article.

The general notion regarding this technology is to take high frequency speech signals presumed to be inaudible to the patient, and lower them into a region where there is better hearing and a greater chance of audibility. A 6000 Hz signal, for example, might be moved to 3000 Hz, either through compression or linear transformation. The question at hand for the audiologist doing the fitting is: How do I know if I made the signal audible after it was lowered? Conventional speechmapping or a real-ear MPO sweep will tell you if the signals for the higher regions have been lowered (you’ll see a drop in the output for this region), but this testing will not tell you the status of the signals for the lower region where they were placed, as they have now blended in with the original speech signals from this same region. Some probe-mic equipment has special test signals that allow us to specifically examine the lowered signals, and compare the intensity of these signals to the patient's thresholds. You could also use recorded speech signals or live voice high frequency speech sounds to obtain a general idea of audibility. Again, see the 20Q articles I mentioned earlier for details on this, as well as Glista and Scollie (2009).

19. Thanks, a lot of information. Your final comments on frequency lowering intrigue me. I fit frequency compression quite a bit, and it never occurred to me to look at what was going on in the ear canal with my probe-mic system. Think I’m still okay?

Let’s see, you told me earlier that you’ve been using the manufacturer’s proprietary algorithms, so I’d say no, you’re probably not okay. Your patients probably don’t even know that that feature had been activated. Of the four features I mentioned, frequency lowering is the one where probe-mic verification is the most essential.

Go back and look at the proprietary fits that I showed you in Figure 2. Note that even the peaks of speech are not audible at 3000 Hz, and this is a patient with only a 75 dB loss for this frequency. The new lowered signal is not going to receive any more gain than what has been originally programmed for this region. So basically, if you were using one of these proprietary algorithms, and implementing frequency compression, you’d be taking speech signals that were not audible in the 5000-6000 Hz range, and making them inaudible in the 2500-3000 Hz range. To the patient, inaudible sounds like inaudible.

Of course, we’re still looking for the evidence that shows that frequency lowering is beneficial, but we’ll never know if this is a useful feature unless the technology is fitted in the manner that it was intended.

20. Understood. So, you’ve pointed out that not much has changed in 30 years. Any final thoughts on how we can encourage more audiologists to make probe-mic verification part of their routine fitting protocol?

That’s a tough one to answer. Many of us have been promoting this testing from the onset. We’ve conducted workshops, written articles, monographs and books. We even have a probe-mic song! But still, this verification process has not been widely utilized. I think each audiologist needs to look at the evidence, and make a decision regarding what is the right way to fit hearing aids. When they do that, I think they will see that probe-mic verification is a vital key to the process. There really is no suitable alternative.

Editor’s Note: If you’re interested in probe-mic measures, we have two courses on this topic here at AudiologyOnline recorded by this month’s 20Q author, Gus Mueller. If you’re just getting started, this course reviews the basic probe-mic procedures and the clinical applications:

Probe-Mic Measures: Yes, They are Still the Best Way to Verify Hearing Aid Performance!

This second course reviews speechmapping applications, with fitting tips, and is geared more for the individual already conducting probe-mic measures:

Speech Mapping - Clinical Tips

References

Aazh, H. & Moore, B.C. (2007). The value of routine real ear measurement of the gain of digital hearing aids. Journal of the American Academy of Audiology, 18(8),653-664.

Aazh, H., Moore, B.C., & Prasher, D. (2012). The accuracy of matching target insertion gains with open-fit hearing aids. American Journal of Audiology, 21(2),175-80.

Abrams, H.B., Chisolm, T.H., McManus, M., & McArdle, R. (2012). Initial-fit approach versus verified prescription: comparing self-perceived hearing aid benefit. Journal of the American Academy of Audiology, 23(10),768-78.

American Academy of Audiology. (2011, April). Code of ethics. Retrieved from: https://www.audiology.org/resources/documentlibrary/Pages/codeofethics.aspx

Johnson, E. (2012, April) 20Q: Same or different - Comparing the latest NAL and DSL prescriptive targets. AudiologyOnline, Article 769. Retrieved from: https://www.audiologyonline.com/

Keidser, G. & Alamudi, K. (2013). Real-life efficacy and reliability of training a hearing aid. Ear and Hearing, 34(5),619-629.

Kochkin, S. (2000). Why my hearing aids area in the drawer: The consumer’s perspective. Hearing Journal, 50(2), 34-42.

Leavitt R., & Flexer, C. (2012). The importance of audibility in successful amplification of hearing loss. Hearing Review, 19(13), 20-23.

Mueller, H.G. (1995). Probe-microphone measurements: Unplugged. Hearing Journal, 48(1), 10-12, 34-36.

Mueller, H.G. (2001a). Probe-microphone measurements: 20 years of progress. Trends In Amplification, 5(2), 3-39.

Mueller, H.G. (2001b, Jan). Using probe mics to measure directionality. AudiologyOnline, Ask the Expert #764. Retrieved from: https://www.audiologyonline.com/

Mueller, H.G. (2001c, Feb). Directionality and WDRC. AudiologyOnline, Ask the Expert #763. Retrieved from: https://www.audiologyonline.com/

Mueller, H.G. (2005). Probe-mic measures: Hearing aid fitting’s most neglected element. Hearing Journal, 57(10), 33-41.

Mueller, H.G. (2011, June). How loud is too loud? Using loudness discomfort level measures for hearing aid fitting and verification, Part 2. AudiologyOnline, Article 824. Retrieved from: https://www.audiologyonline.com/

Mueller, H.G., Alexander, J.M., & Scollie, S. (2013, June). Frequency lowering—The whole shebang. AudiologyOnline, Article 11913. Retrieved from: https://www.audiologyonline.com/

Mueller, H.G. & Bryant, M. (1991). Some overlooked uses of probe microphone measures. Seminars in Hearing, 12(1), 73-92.

Mueller, H.G. & Hawkins, D.B. (1992). Assessment of fitting arrangements, special circuitry, and features. In H.G. Mueller, D. Hawkins, J. Northern (Eds.). Probe microphone measurements: Hearing aid selection and assessment (pp. 92, 201-227). San Diego: Singular Press.

Mueller, H.G. & Picou, E.M. (2010) Survey examines popularity of real-ear probe-microphone measures. Hearing Journal, 63(5), 27-32.

Palmer, C. (2009). Best practice: It’s a matter of ethics. Audiology Today, 21(5), 31-35.

Palmer, C. (2012, August). Implementing a gain learning feature. AudiologyOnline, Article 11244. Retrieved from: https://www.audiologyonline.com/

Polonenko, M.J., Scollie, S.D., Moodie, S., Seewald, R.C., Laurnagaray, D., Shantz, J., & Richards, A. (2010). Fit to targets, preferred listening levels, and self-reported outcomes for the DSL v5.0 a hearing aid prescription for adults. International Journal of Audiology, 49(8),550-60.

Scollie, S. (2013, May). 20Q: The Ins and outs of frequency lowering amplification. AudiologyOnline, Article 11863. Retrieved from: https://www.audiologyonline.com/

Wu, Y.H. & Bentler, R.A. (2012). Clinical measures of hearing aid directivity: assumption, accuracy, and reliability. Ear and Hearing, 33(1),44-56.

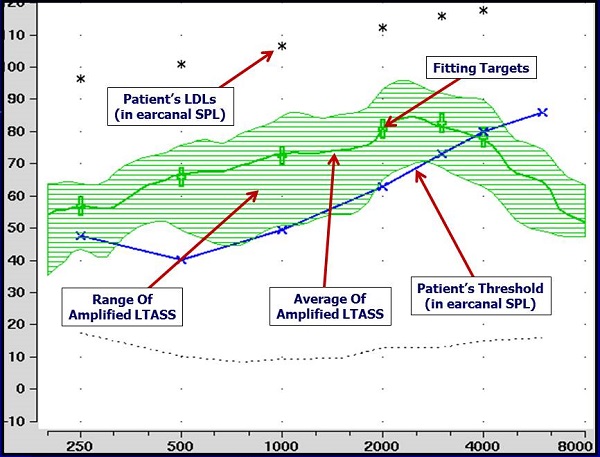

Appendix

Example of hearing aid output using a real-speech input with match to NAL-NL2 prescriptive targets for 65-dB input.

Cite this content as:

Mueller, H.G. (2014, January). 20Q: Real-ear probe-microphone measures - 30 years of progress? AudiologyOnline, Article 12410. Retrieved from: https://www.audiologyonline.com