Japanese people influenced less by lip movements when listening to another speaker: Evidence from neuroimaging study.

KUMAMOTO UNIVERSITY - Which parts of a person's face do you look at when you listen them speak? Lip movements affect the perception of voice information from the ears when listening to someone speak, but native Japanese speakers are mostly unaffected by that part of the face. Recent research from Japan has revealed a clear difference in the brain network activation between two groups of people, native English speakers and native Japanese speakers, during face-to-face vocal communication.

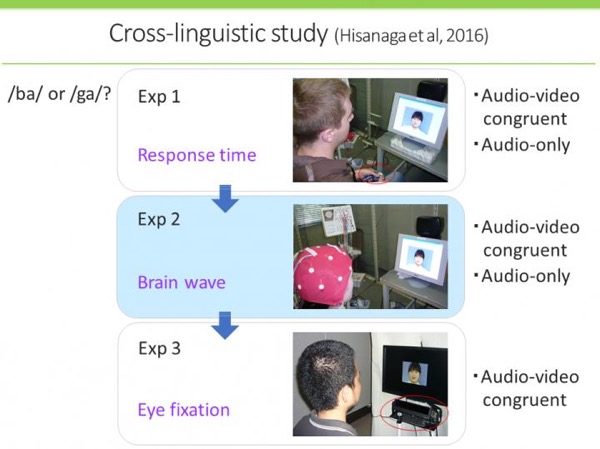

Kumamoto University researchers assessed the McGurk effect between native English and Japanese speakers and found a major difference: English speakers "listen with their eyes" and Japanese speakers use only their ears. Audio-video & audio-only tests were performed in the first two experiments, and an audio-video test was performed in the third experiment. CREDIT: DR. KAORU SEKIYAMA

It is known that visual speech information, such as lip movement, affects the perception of voice information from the ears when speaking to someone face-to-face. For example, lip movement can help a person to hear better under noisy conditions. On the contrary, dubbed movie content, where the lip movement conflicts with a speaker's voice, gives a listener the illusion of hearing another sound. This illusion is called the "McGurk effect."

According to an analysis of previous behavioral studies, native Japanese speakers are not influenced by visual lip movements as much as native English speakers. To examine this phenomenon further, researchers from Kumamoto University measured and analyzed gaze patterns, brain waves, and reaction times for speech identification between two groups of 20 native Japanese speakers and 20 native English speakers.

The difference was clear. When natural speech is paired with lip movement, native English speakers focus their gaze on a speaker's lips before the emergence of any sound. The gaze of native Japanese speakers, however, is not as fixed. Furthermore, native English speakers were able to understand speech faster by combining the audio and visual cues, whereas native Japanese speakers showed delayed speech understanding when lip motion was in view.

"Native English speakers attempt to narrow down candidates for incoming sounds by using information from the lips which start moving a few hundreds of milliseconds before vocalizations begin. Native Japanese speakers, on the other hand, place their emphasis only on hearing, and visual information seems to require extra processing," explained Kumamoto University's Professor Kaoru Sekiyama, who lead the research.

Kumamoto University researchers then teamed up with researchers from Sapporo Medical University and Japan's Advanced Telecommunications Research Institute International (ATR) to measure and analyze brain activation patterns using functional magnetic resonance imaging (fMRI). Their goal was to elucidate differences in brain activity between the two languages.

The functional connectivity in the brain between the area that deals with hearing and the area that deals with visual motion information, the primary auditory and middle temporal areas respectively, was stronger in native English speakers than in native Japanese speakers. This result strongly suggests that auditory and visual information are associated with each other at an early stage of information processing in an English speaker's brain, whereas the association is made at a later stage in a Japanese speaker's brain. The functional connectivity between auditory and visual information, and the manner in which the two types of information are processed together was shown to be clearly different between the two different language speakers.

"It has been said that video materials produce better results when studying a foreign language. However, it has also been reported that video materials do not have a very positive effect for native Japanese speakers," said Professor Sekiyama. "It may be that there are unique ways in which Japanese people process audio information, which are related to what we have shown in our recent research, that are behind this phenomenon."

These findings were published in the nature.com journal Scientific Reports on August 11th and October 13th, 2016.

References

J. Shinozaki, N. Hiroe, M. Sato, T. Nagamine, K. Sekiyama et al, "Impact of language on functional connectivity for audiovisual speech integration," Sci. Rep., vol. 6, no. August, p. 31388, Aug. 2016. DOI: 10.1038/srep31388.

S. Hisanaga, K. Sekiyama, T. Igasaki, and N. Murayama, "Language/Culture Modulates Brain and Gaze Processes in Audiovisual Speech Perception," Sci. Rep., vol. 6, p. 35265, Oct. 2016. DOI: 10.1038/srep35265.

Source: https://www.eurekalert.org/pub_releases/2016-11/ku-hwy111416.php